A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package

Our preprint paper: Our preprint paper are available at here

Date of release: Our paper is currently under review, and our code will be released after the first round of review if the reviewer's comments are positive.

Our related video: our related video is now available on YouTube (click below images to open, or watch it on Bilibili1, 2):

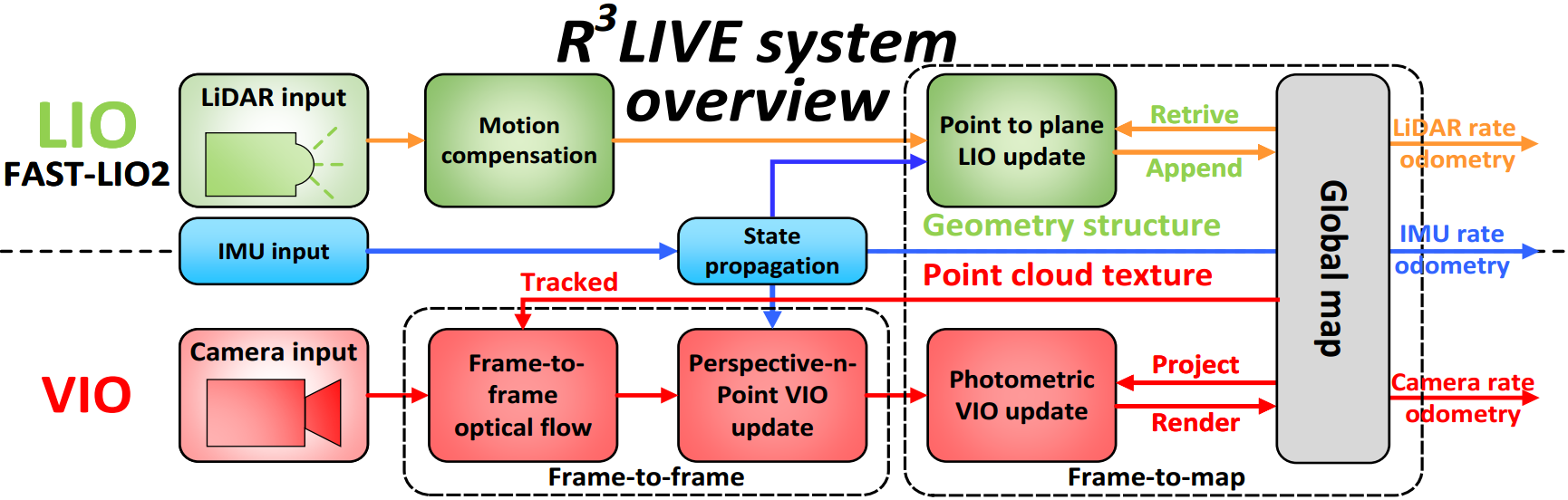

R3LIVE is a novel LiDAR-Inertial-Visual sensor fusion framework, which takes advantage of measurement of LiDAR, inertial, and visual sensors to achieve robust and accurate state estimation. R3LIVE is contained of two subsystems, the LiDAR-inertial odometry (LIO) and visual-inertial odometry (VIO). The LIO subsystem (FAST-LIO) takes advantage of the measurement from LiDAR and inertial sensors and builds the geometry structure of (i.e. the position of 3D points) global maps. The VIO subsystem utilizes the data of visual-inertial sensors and renders the map's texture (i.e. the color of 3D points).

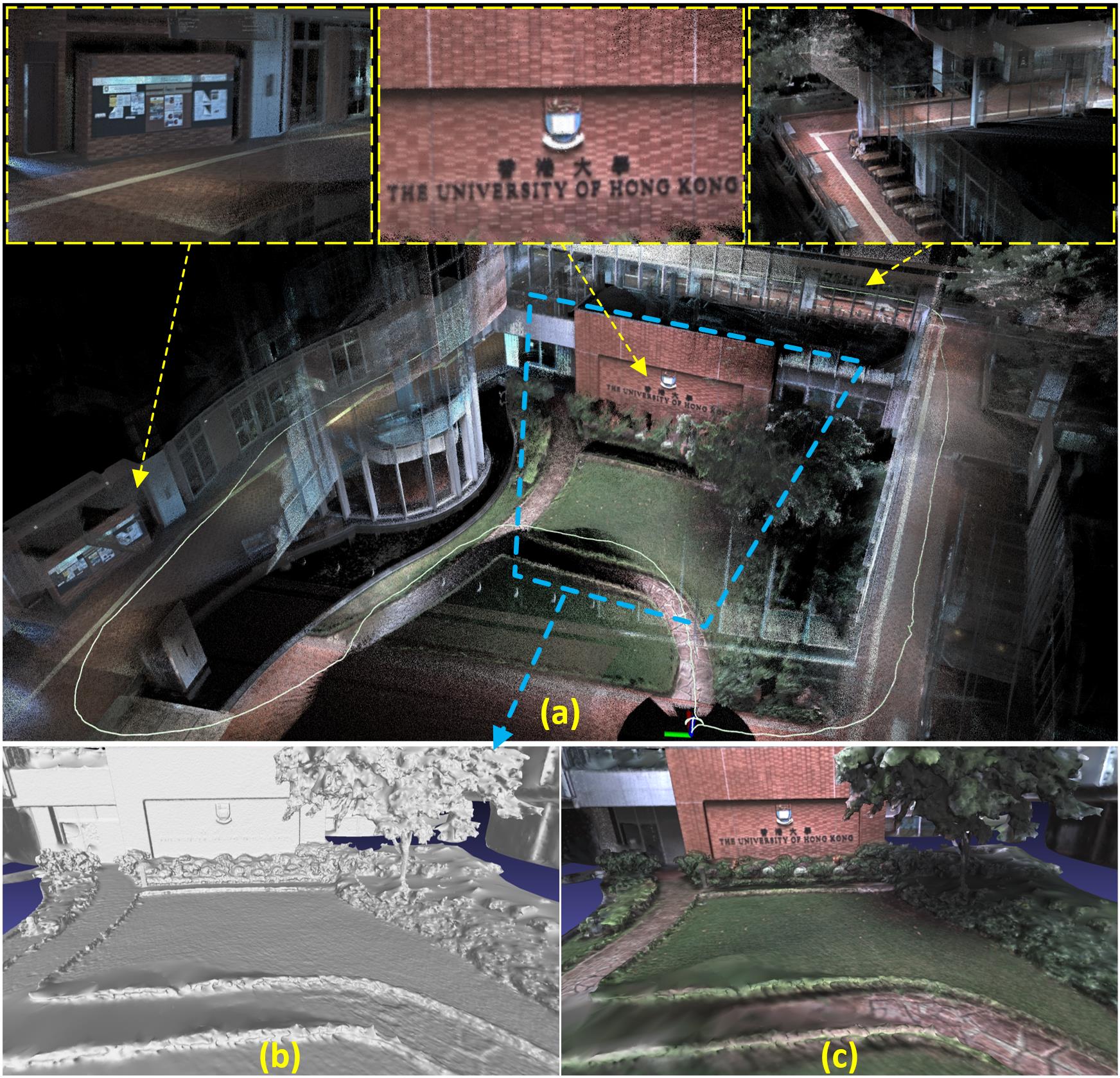

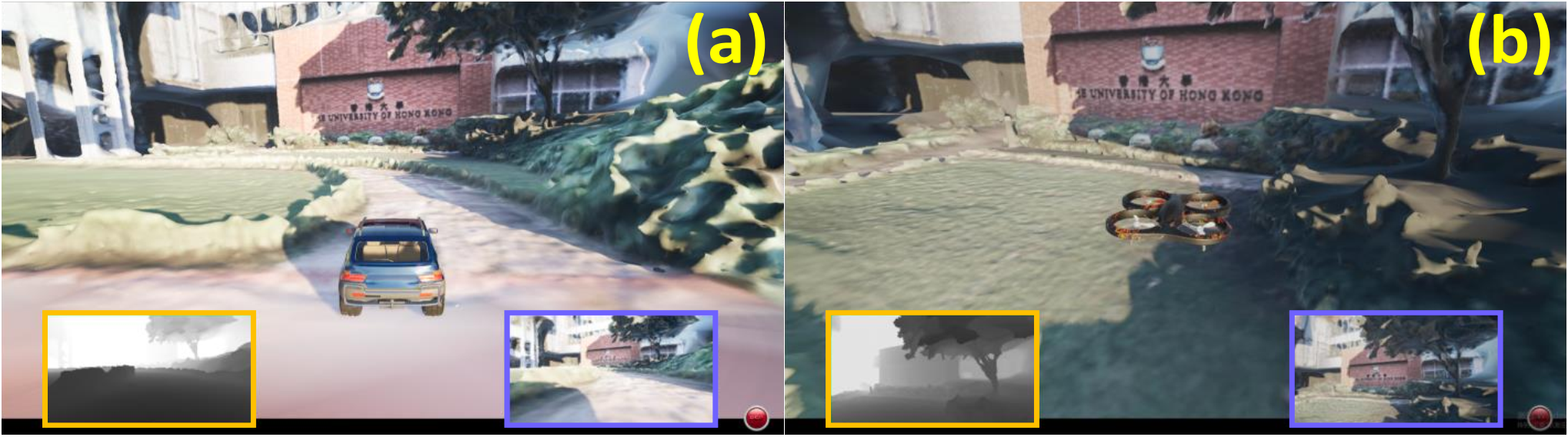

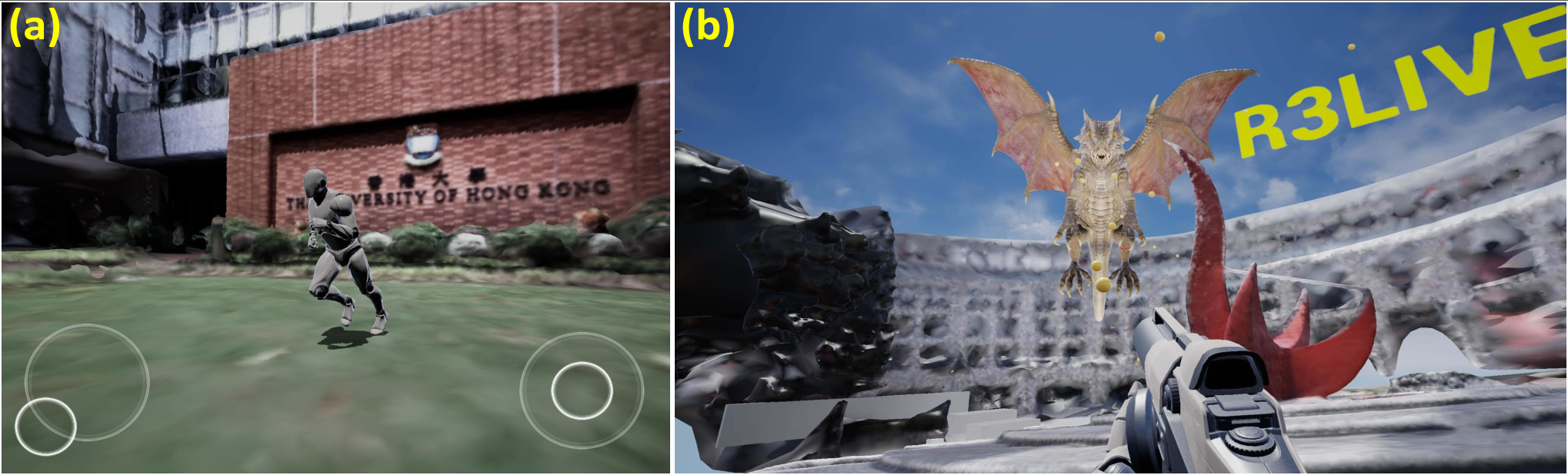

R3LIVE is developed based on our previous work R2LIVE, with careful architecture design and implementation, is a versatile and well-engineered system toward various possible applications, which can not only serve as a SLAM system for real-time robotic applications, but can also reconstruct the dense, precise, RGB-colored 3D maps for applications like surveying and mapping. Moreover, to make R3LIVE more extensible, we develop a series of offline utilities for reconstructing and texturing meshes, which further minimizes the gap between R3LIVE and various of 3D applications such as simulators, video games and etc.

- FAST-LIO: A computationally efficient and robust LiDAR-inertial odometry package.

- R2LIVE: A robust, real-time tightly-coupled multi-sensor fusion package.

- ikd-Tree: A state-of-art dynamic KD-Tree for 3D kNN search.

- LOAM-Livox: A robust LiDAR Odometry and Mapping (LOAM) package for Livox-LiDAR