This is the implementation of our Computer Vision and Image Understanding 2022 work 'A Multi Camera Unsupervised Domain Adaptation Pipeline for Object Detection in Cultural Sites through Adversarial Learning and Self-Training'. The aim is to reduce the gap between source and target distributions improving the object detector performance on the target domains when training and tests data belong to different distributions.

If you want to use this code with your dataset, please follow the following guide.

Please leave a star ⭐ and cite the following paper if you use this repository for your project.

@article{PASQUALINO2022103487,

title = {A multi camera unsupervised domain adaptation pipeline for object detection in cultural sites through adversarial learning and self-training},

journal = {Computer Vision and Image Understanding},

pages = {103487},

year = {2022},

issn = {1077-3142},

doi = {https://doi.org/10.1016/j.cviu.2022.103487},

url = {https://www.sciencedirect.com/science/article/pii/S1077314222000911},

author = {Giovanni Pasqualino and Antonino Furnari and Giovanni Maria Farinella}

}

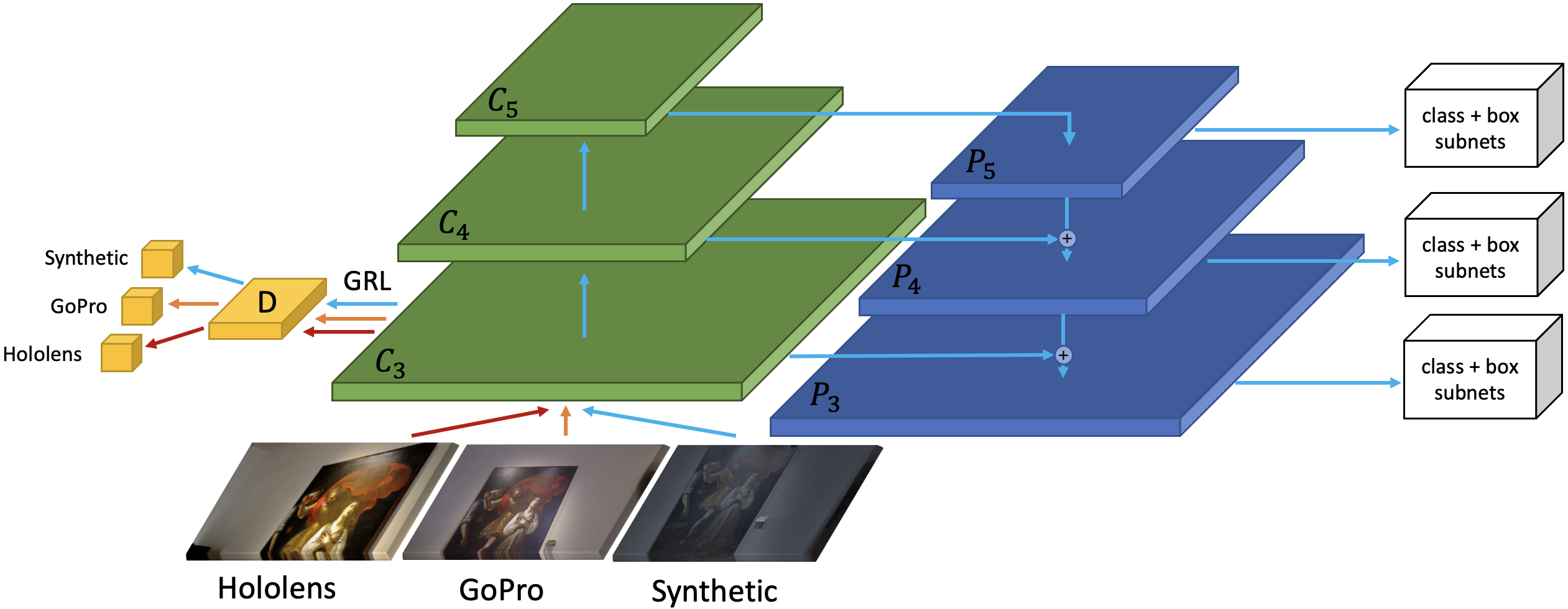

In this step the models is trained using synthetic labeled images (source domain) and unlabeled real images Hololens and GoPro (target domains). At the end of this step, the model is used to produce pseudo label on both Hololens and GoPro images.

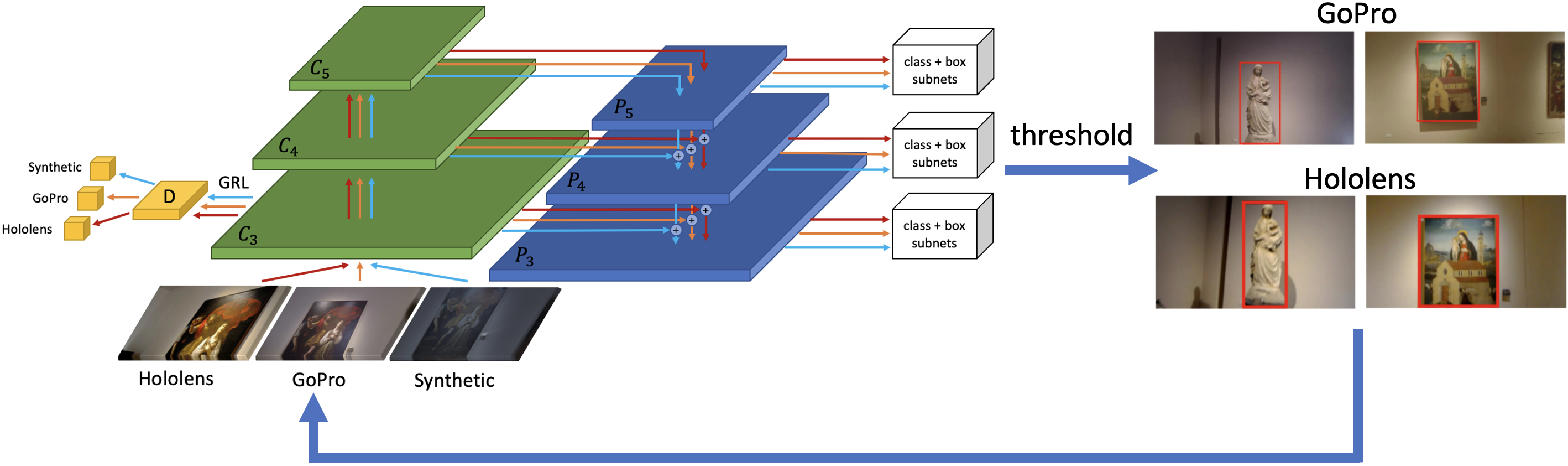

In this step the model is trained using synthetic labeled images (source domain) and pseudo label real images Hololens and GoPro (target domains) produced in the previous step. This is an iterative step and at the end of each step we produce better pseudo label on the target domains.

You can use this repo following one of these three methods:

NB: Detectron2 0.2.1 is required, installing other versions this code will not work.

Quickstart here 👉

Or load and run the STMDA-RetinaNet.ipynb on Google Colab following the instructions inside the notebook.

Follow the official guide to install Detectron2 0.2.1

Or

Download the official Detectron 0.2.1 from here

Unzip the file and rename it in detectron2

run python -m pip install -e detectron2

Follow these instructions:

cd docker/

# Build

docker build -t detectron2:v0 .

# Launch

docker run --gpus all -it --shm-size=8gb -v /home/yourpath/:/home/yourpath --name=name_container detectron2:v0

If you exit from the container you can restart it using:

docker start name_container

docker exec -it name_container /bin/bash

Dataset is available here

If you want to use this code with your dataset arrange the dataset in the format of COCO. Inside the script stmda_train.py register your dataset using:

register_coco_instances("dataset_name_source_training",{},"path_annotations","path_images")

register_coco_instances("dataset_name_init_target_training",{},"path_annotations","path_images")

register_coco_instances("dataset_name_init_target2_training",{},"path_annotations","path_images")

these are the paths where will be saved the annotations produced at the end of the step 1

register_coco_instances("dataset_name_target_training",{},"path_annotations","path_images")

register_coco_instances("dataset_name_target2_training",{},"path_annotations","path_images")

register_coco_instances("dataset_name_target_test",{},"path_annotations","path_images")

register_coco_instances("dataset_name_target_test2",{},"path_annotations","path_images")

Replace at the following path detectron2/modeling/meta_arch/ the retinanet.py script with our retinanet.py.

Do the same for the fpn.py file at the path detectron2/modeling/backbone/, evaltuator.py and coco_evaluation.py at detectron2/evaluation/

Inside the script stmda_train.py you can set the parameters for the second step training like number of iteration and threshold.

Run the script stmda_train.py

Trained models are available at these links:

MDA-RetinaNet

STMDA-RetinaNet

MDA-RetinaNet-CycleGAN

STMDA-RetinaNet-CycleGAN

If you want to test the model load the new weights, set to 0 the number of iterations and run stmda_train.py

Results of baseline and feature alignment methods. S refers to Synthetic, H refers to Hololens and G to GoPro.

| Model | Source | Target | Test H | Test G |

|---|---|---|---|---|

| Faster RCNN | S | - | 7.61% | 30.39% |

| RetinaNet | S | - | 14.10% | 37.13% |

| DA-Faster RCNN | S | H+G merged | 10.53% | 48.23% |

| StrongWeak | S | H+G merged | 26.68% | 48.55% |

| CDSSL | S | H+G merged | 28.66% | 45.33% |

| DA-RetinaNet | S | H+G merged | 31.63% | 48.37% |

| MDA-RetinaNet | S | H, G | 34.97% | 50.81% |

| STMDA-RetinaNet | S | H, G | 54.36% | 59.51% |

Results of baseline and feature alignment methods combined with CycleGAN. H refers to Hololens while G to GoPro. "{G, H}" refers to synthetic images translated to the merged Hololens and GoPro domains.

| Model | Source | Target | Test H | Test G |

|---|---|---|---|---|

| Faster RCNN | {G, H} | - | 15.34% | 63.60% |

| RetinaNet | {G, H} | - | 31.43% | 69.59% |

| DA-Faster RCNN | {G, H} | H+G merged | 32.13% | 65.19% |

| StrongWeak | {G, H} | H+G merged | 41.11% | 66.45% |

| DA-RetinaNet | {G, H} | H+G merged | 52.07% | 71.14% |

| CDSSL | {G, H} | H+G merged | 53.06% | 71.17% |

| MDA-RetinaNet | {G, H} | H, G | 58.11% | 71.39% |

| STMDA-RetinaNet | {G, H} | H, G | 66.64% | 72.22% |