Robinson, Joseph P., Gennady Livitz, Yann Henon, Can Qin, Yun Fu, and Samson Timoner. "Face recognition: too bias, or not too bias? " In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, pp. 0-1. 2020.

@inproceedings{robinson2020face,

title={Face recognition: too bias, or not too bias?},

author={Robinson, Joseph P and Livitz, Gennady and Henon, Yann and Qin, Can and Fu, Yun and Timoner, Samson},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops},

pages={0--1},

year={2020}

}

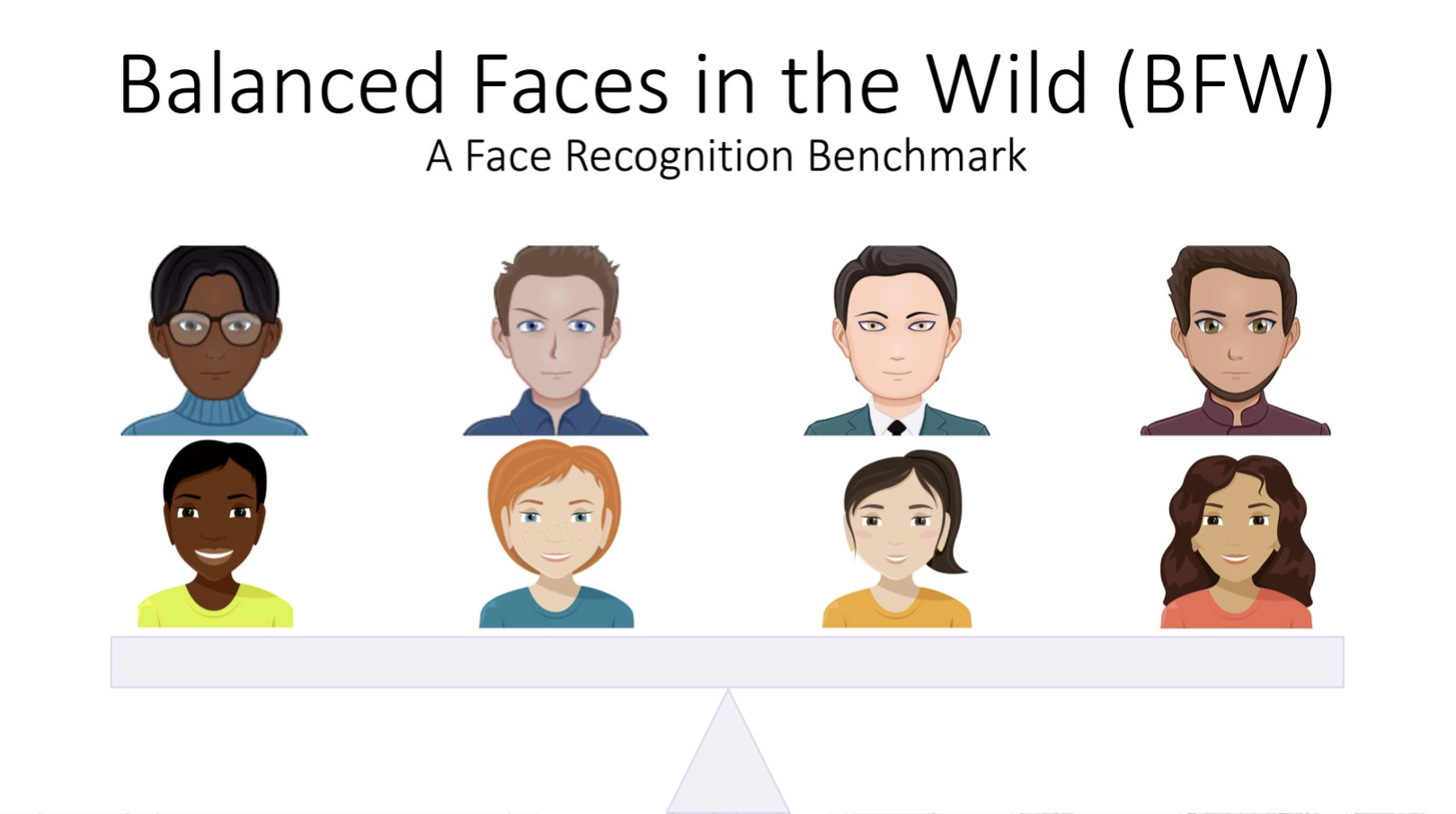

Our findings reveal a bias in scoring sensitivity across different subgroups when verifying the identity of a subject using facial images. In other words, the performance of a FR system on different subgroups (e.g., male vs female, asian vs black) typically depends on a global threshold (i.e., decision boundary on scores or distances to determine whether true or false pair). Our work uses fundamental signal detection theory to show that the use of a single, global threshold causes a skew in performance ratings across different subgroups. For this, we demonstrate that subgroup-specific thresholds are optimal in terms of overall performance and balance across subgroups. Furthermore, we built and released the facial image dataset needed to address bias from this view of FR. Namely, Bias Faces in the Wild (BFW).

Check out research paper, https://arxiv.org/pdf/2002.06483.pdf

See data/README.md for more on BFW.

See code/README.md for more on 'facebias' package and experiments contained within.

See results/README.md for summary of figures and results.

Register and download via this form.

We reveal critical insights into problems of bias in state-of-the-art facial recognition (FR) systems using a novel Balanced Faces In the Wild (BFW) dataset: data balanced for gender and ethnic groups. We show variations in the optimal scoring threshold for face-pairs across different subgroups. Thus, the conventional approach of learning a global threshold for all pairs results in performance gaps between subgroups. By learning subgroup-specific thresholds, we reduce performance gaps, and also show a notable boost in overall performance. Furthermore, we do a human evaluation to measure bias in humans, which supports the hypothesis that an analogous bias exists in human perception. For the BFW database, source code, and more, visit https://github.com/visionjo/facerec-bias-bfw.

All source code used to generate the results and figures in the paper are in the code folder. The calculations and figure generation are all run inside Jupyter notebooks. The data used in this study is provided in data and the sources for the manuscript text and figures are in manuscript. Results generated by the code are saved in results. See the README.md files in each directory for a full description.

Most processes and experiments depend on a pandas dataframe. A demo notebook is provided to show the steps of populating the data structure included in the data download in a csv and pickle file. Furthermore, documentation in data/README summarize the details about data as described in the paper and used in the notebooks.

You can download a copy of all the files in this repository by cloning the git repository:

git clone https://github.com/visionjo/facerec-bias-bfw.git

You'll need a working Python environment to run the code. The recommended way to set up your environment is through the Anaconda Python distribution which provides the conda package manager. Anaconda can be installed in your user directory and does not interfere with the system Python installation. The required dependencies are specified in the file environment.yml.

We use conda virtual environments to manage the project dependencies in isolation. Thus, you can install our dependencies without causing conflicts with your setup (even with different Python versions).

Run the following command in the repository folder (where environment.yml is located) to create a separate environment and install all required dependencies in it:

conda env create

Before running any code you must activate the conda environment:

source activate ENVIRONMENT_NAME

or, if you're on Windows:

activate ENVIRONMENT_NAME

This will enable the environment for your current terminal session. Any subsequent commands will use software that is installed in the environment.

To build and test the software, produce all results and figures, and compile the manuscript PDF, run this in the top level of the repository:

make all

If all goes well, the manuscript PDF will be placed in manuscript/output.

You can also run individual steps in the process using the Makefiles from the code and manuscript folders. See the respective README.md files for instructions.

Another way of exploring the code results is to execute the Jupyter notebooks individually. To do this, you must first start the notebook server by going into the repository top level and running:

jupyter notebook

This will start the server and open your default web browser to the Jupyter interface. In the page, go into the code/notebooks folder and select the notebook that you wish to view/run.

The notebook is divided into cells (some have text while other have code). Each cell can be executed using Shift + Enter.

Executing text cells does nothing and executing code cells runs the code and produces it's output. To execute the whole notebook, run all cells in order.

- Begin Template

- Create demo notebooks

- Add manuscript

- Documentation (sphinx)

- Update README (this)

- Complete test harness

- Modulate (refactor) code

- Add scripts and CLI

All source code is made available under a BSD 3-clause license. You can freely use and modify the code, without warranty, so long as you provide attribution to the authors. See LICENSE.md (LICENSE) for the full license text.

The manuscript text is not open source. The authors reserve the rights to the article content, which is currently submitted for publication in the 2020 IEEE Conference on AMFG.

We would like to thank the PINGA organization on Github for the project template used to structure this project.