This repo is the official implementation of the paper:

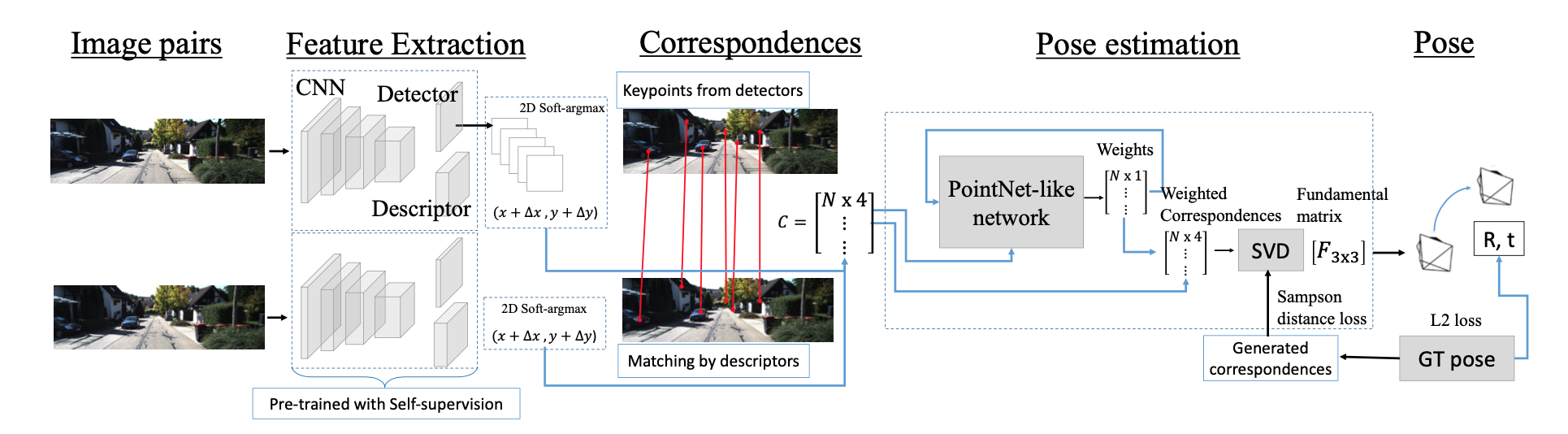

Deep Keypoint-Based Camera Pose Estimation with Geometric Constraints.

You-Yi Jau*, Rui Zhu*, Hao Su, Manmohan Chandraker (*equal contribution)

IROS 2020

See our arxiv, paper and video for more details.

# please clone without git lfs and download from google drive if possible

# "This repository is over its data quota. Purchase more data packs to restore access."

export GIT_LFS_SKIP_SMUDGE=1

git clone https://github.com/eric-yyjau/pytorch-deepFEPE.git

git pull --recurse-submodules- python == 3.6

- pytorch >= 1.1 (tested in 1.3.1)

- torchvision >= 0.3.0 (tested in 0.4.2)

- cuda (tested in cuda10)

conda create --name py36-deepfepe python=3.6

conda activate py36-deepfepe

pip install -r requirements.txt

pip install -r requirements_torch.txt # install pytorch

- install superpoint module

git clone https://github.com/eric-yyjau/pytorch-superpoint.git

cd pytorch-superpoint

git checkout module_20200707

# install

pip install --upgrade setuptools wheel

python setup.py bdist_wheel

pip install -e .

- if

asciierror

export LC_ALL=en_US.UTF-8

export LANG=en_US.UTF-8

export LANGUAGE=en_US.UTF-8

- Data preprocessing

cd deepFEPE_data

- Follow the instructions in the README.

- Process

KITTIdataset for training or testing. - Process

ApolloScapedataset for testing.

- Key items

dump_root: '[your dumped dataset]' # apollo or kitti

if_qt_loss: true # use pose-loss and F-loss (true) or only F-loss (false)

if_SP: true (use superpoint instead of SIFT)/ false (SIFT)

# deepF

retrain: true (new deepF model)/ false (use pretrained deepF)

train: true (train the model)/ false (freeze weights)

pretrained: [Path to pretrained deepF]

# superpoint

retrain_SP: true (new superpoint model)/ false (use pretrained_SP)

train_SP: true (train SP model)/ false (freeze SP weights)

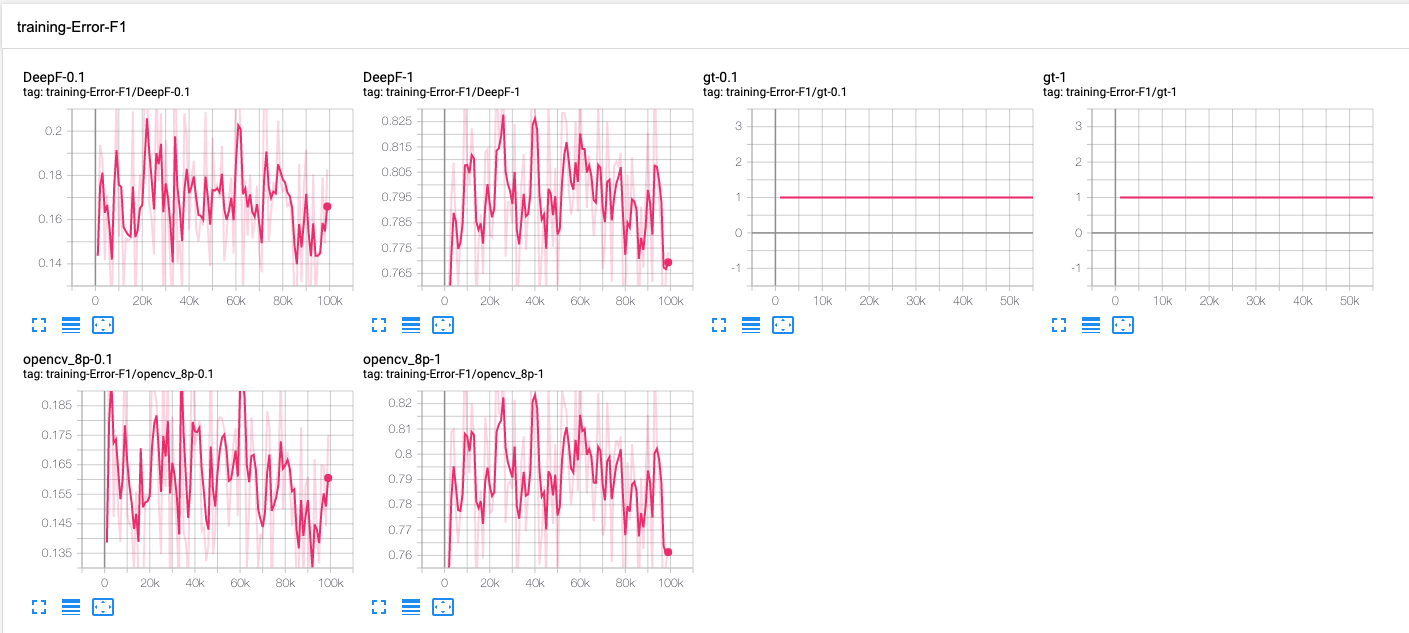

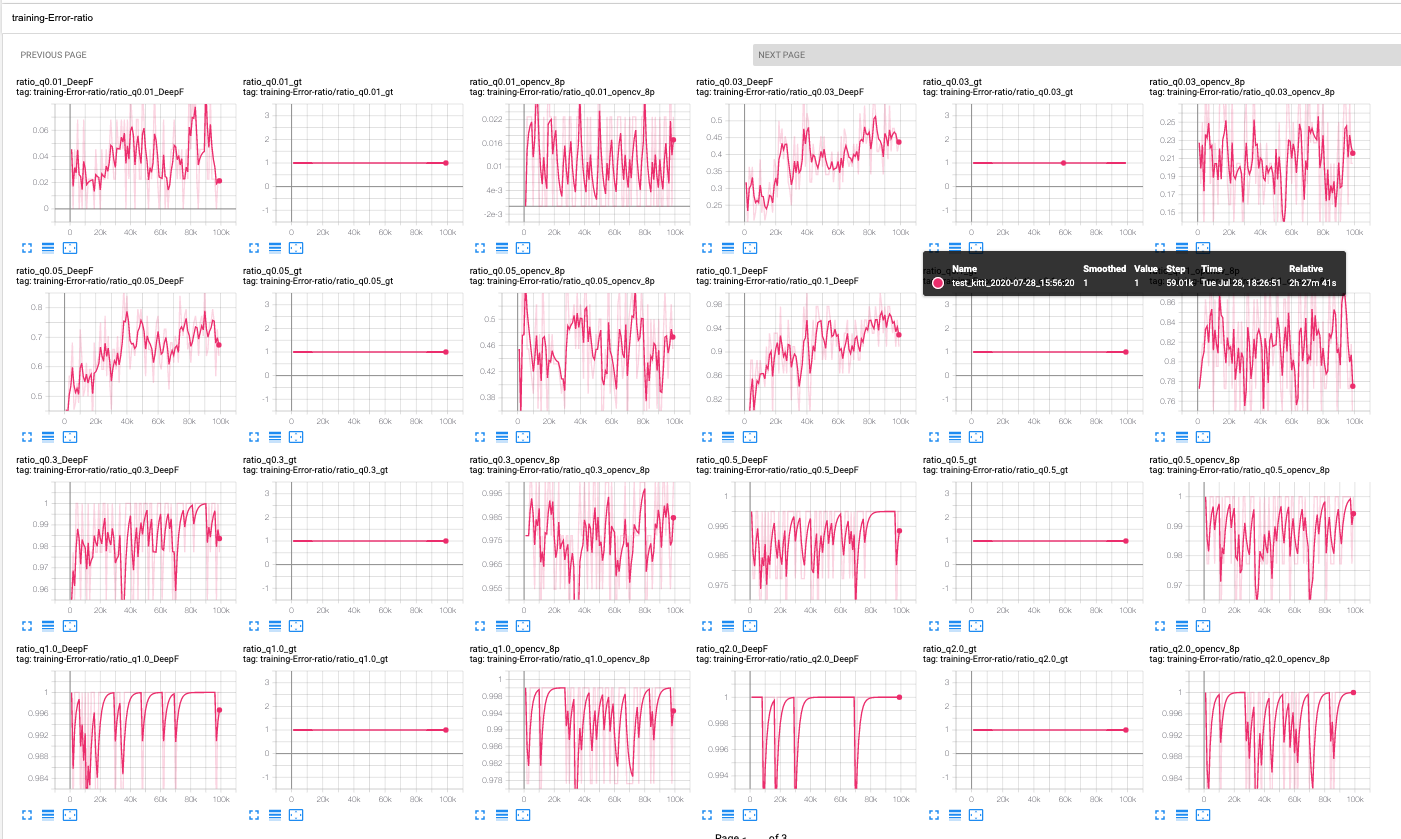

pretrained_SP: [Path to pretrained SP]Prepare the dataset. Use training commands following steps 1 to 3 (skip step 0). Visualize training and validation with Tensorboard.

- KITTI

python deepFEPE/train_good.py train_good deepFEPE/configs/kitti_corr_baseline.yaml test_kitti --eval

- ApolloScape

python deepFEPE/train_good.py train_good deepFEPE/configs/apollo_train_corr_baseline.yaml test_apo --eval

- config file:

deepFEPE/configs/kitti_corr_baseline.yaml - config:

- set

dump_rootto[your dataset path] - set

if_SPtoFalse

- set

- run training script

- Follow the instruction in pytorch-superpoint.

- Or use the pretrained models.

- git lfs file:

deepFEPE/logs/superpoint_models.zip- KITTI:

deepFEPE/logs/superpoint_kitti_heat2_0/checkpoints/superPointNet_50000_checkpoint.pth.tar - Apollo:

deepFEPE/logs/superpoint_apollo_v1/checkpoints/superPointNet_40000_checkpoint.pth.tar

- KITTI:

- config:

- Set

if_SPtoTrue. - Add

pretrained_SPpath.

- Set

- config:

- Set

traintoTrue - Set

train_SPtoTrue - Set the pretrained paths in

pretrainedandpretrained_SP. - Set

if_qt_loss: truefor pose-loss.

- Set

- KITTI: use

kitti_corr_baselineEval.yaml

python deepFEPE/train_good.py eval_good deepFEPE/configs/kitti_corr_baselineEval.yaml eval_kitti --test --eval # use testing set (seq 09, 10)

- ApolloScape: use

apollo_train_corr_baselineEval.yaml

python deepFEPE/train_good.py eval_good deepFEPE/configs/apollo_train_corr_baselineEval.yaml eval_apo --test --eval

You can use our pretrained models for testing. Just put the paths in the config file.

- Download through

git-lfsor Google drive

git lfs ls-files # check the files

git lfs fetch

git lfs pull # get the files

git pull

(Refer to the config.yml and checkpoints/ in the folder)

- KITTI models (trained on KITTI):

- git lfs file:

deepFEPE/logs/kitti_models.zip - SIFT Baselines:

baselineTrain_deepF_kitti_fLoss_v1baselineTrain_sift_deepF_kittiPoseLoss_v1baselineTrain_sift_deepF_poseLoss_v0

- SuperPoint baselines:

baselineTrain_kittiSp_deepF_kittiFLoss_v0baselineTrain_kittiSp_deepF_kittiPoseLoss_v1

- DeepFEPE:

baselineTrain_kittiSp_deepF_end_kittiFLoss_freezeSp_v1baselineTrain_kittiSp_deepF_end_kittiFLossPoseLoss_v1_freezeSpbaselineTrain_kittiSp_kittiDeepF_end_kittiPoseLoss_v0

- git lfs file:

- Apollo models (trained on Apollo, under apollo/):

- git lfs file:

deepFEPE/logs/apollo_models.zip - SIFT baselines:

baselineTrain_sift_deepF_fLoss_apolloseq2_v1baselineTrain_sift_deepF_poseLoss_apolloseq2_v0baselineTrain_sift_deepF_apolloFLossPoseLoss_v0

- SuperPoint baselines:

baselineTrain_apolloSp_deepF_fLoss_apolloseq2_v0baselineTrain_apolloSp_deepF_poseLoss_apolloseq2_v0

- DeepFEPE:

baselineTrain_apolloSp_deepF_fLoss_apolloseq2_end_v0_freezeSp_fLossbaselineTrain_apolloSp_deepF_poseLoss_apolloseq2_end_v0baselineTrain_apolloSp_deepF_fLossPoseLoss_apolloseq2_end_v0_freezeSp_fLoss

- git lfs file:

cd deepFEPE/

python run_eval_good.py --help

- update your dataset path in

configs/kitti_corr_baselineEval.yamlandconfigs/apollo_train_corr_baselineEval.yaml - set the model names in

deepFEPE/run_eval_good.py

def get_sequences(...):

kitti_ablation = { ... }

apollo_ablation = { ... }- check if the models exist

# kitti models on kitti dataset

python run_eval_good.py --dataset kitti --model_base kitti --exper_path logs --check_exist

# kitti models on apollo dataset

python run_eval_good.py --dataset apollo --model_base kitti --exper_path logs --check_exist

# apollo models

python run_eval_good.py --dataset kitti --model_base apollo --exper_path logs/apollo --check_exist

- run the evaluation (dataset should be ready)

python run_eval_good.py -dataset kitti --model_base kitti --exper_path logs --runEval

- open jupyter notebook

- read the sequences from the config file:

table_trans_rot_kitti_apollo.yaml

base_path: '/home/yoyee/Documents/deepSfm/logs/' # set the base path for checkpoints

seq_dict_test:

Si-D.k: ['eval_kitti', 'DeepF_err_ratio.npz', '07/29/2020']- print out the numbers based on the setting in the config file

jupyter notebook

# navigate to `notebooks/exp_process_table.ipynb`

git lfs ls-files # check the files

git lfs fetch

git lfs pull # get the files

- unzip

cd deepFEPE/logs/results/

unzip 1107.zip

unzip 1114.zip

unzip new_1119.zip

- put to the same folder

ln -s 1114/* . # do the same for the other folders

-

Then, you can follow the instructions in

Read out the results. -

Trajectory results are in

deepFEPE/logs/results/trajectory.zip.

jupyter notebook

# navigate to `notebooks/exp_process_table.ipynb`

- convert the

relative_pose_bodyto absoluted poses - export the poses to two files for sequence

09and10. - use

kitti-odom-evalfor evaluation.

tensorboard --logdir=runs/train_good

- visualization

Please cite the following papers.

- DeepFEPE

@INPROCEEDINGS{2020_jau_zhu_deepFEPE,

author={Y. -Y. {Jau} and R. {Zhu} and H. {Su} and M. {Chandraker}},

booktitle={2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

title={Deep Keypoint-Based Camera Pose Estimation with Geometric Constraints},

year={2020},

volume={},

number={},

pages={4950-4957},

doi={10.1109/IROS45743.2020.9341229}}

- SuperPoint

@inproceedings{detone2018superpoint,

title={Superpoint: Self-supervised interest point detection and description},

author={DeTone, Daniel and Malisiewicz, Tomasz and Rabinovich, Andrew},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops},

pages={224--236},

year={2018}

}

- DeepF

@inproceedings{ranftl2018deep,

title={Deep fundamental matrix estimation},

author={Ranftl, Ren{\'e} and Koltun, Vladlen},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

pages={284--299},

year={2018}

}

This implementation is developed by You-Yi Jau and Rui Zhu. Please contact You-Yi for any problems.

DeepFEPE is released under the MIT License.