The goal of the project is to understand how the supply-side of vehicle availability affects the electric vehicle market. It tracks all vehicles that are available for sale at online marketplaces(Cars.com, Autotrader) across the country through a web scraper that takes advantage of publicly available data.

The main variables that are tracked are:

| Variable | Description |

|---|---|

first_date |

Date this vehicle was first available for sale |

last_date |

Date this vehicle was last available for sale |

duration |

Number of days this vehicle was available for sale |

price |

Price of the vehicle, all price changes are tracked |

seller_id |

Seller of the car, all seller changes are tracked |

There are 4 main project components

This is a backend app written in Python3.7 using Django3 and Django Rest Framework. It provides access to a MySQL database that contains the data, through 3 main API endpoints. The backend app is hosted on Heroku and the MySQL database is hosted on Cloud SQL.

It has 3 main models, vehicle, seller and history.

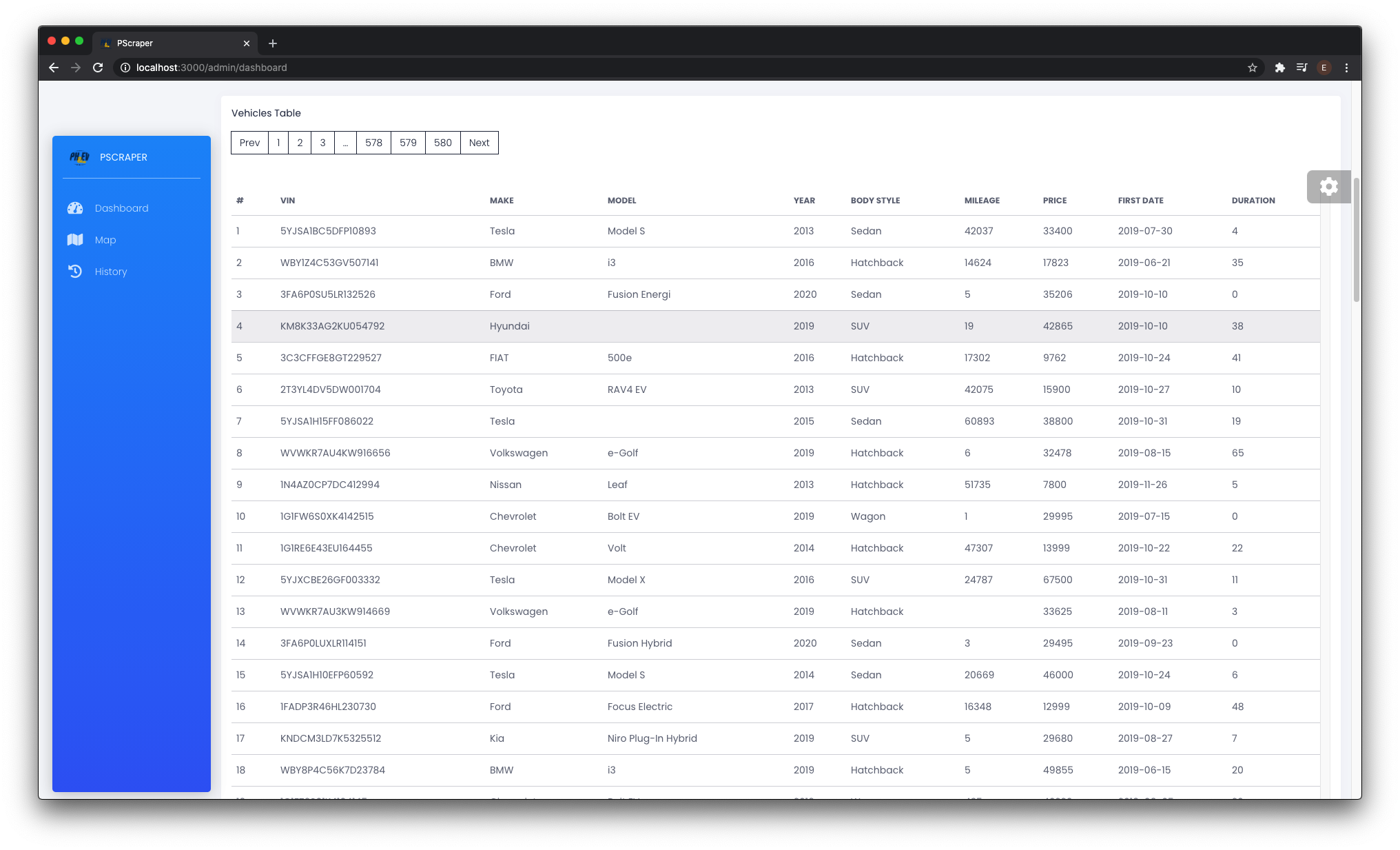

- The vehicle model has the most up to date information on the vechile such as VIN, Make, Model, Price, etc.

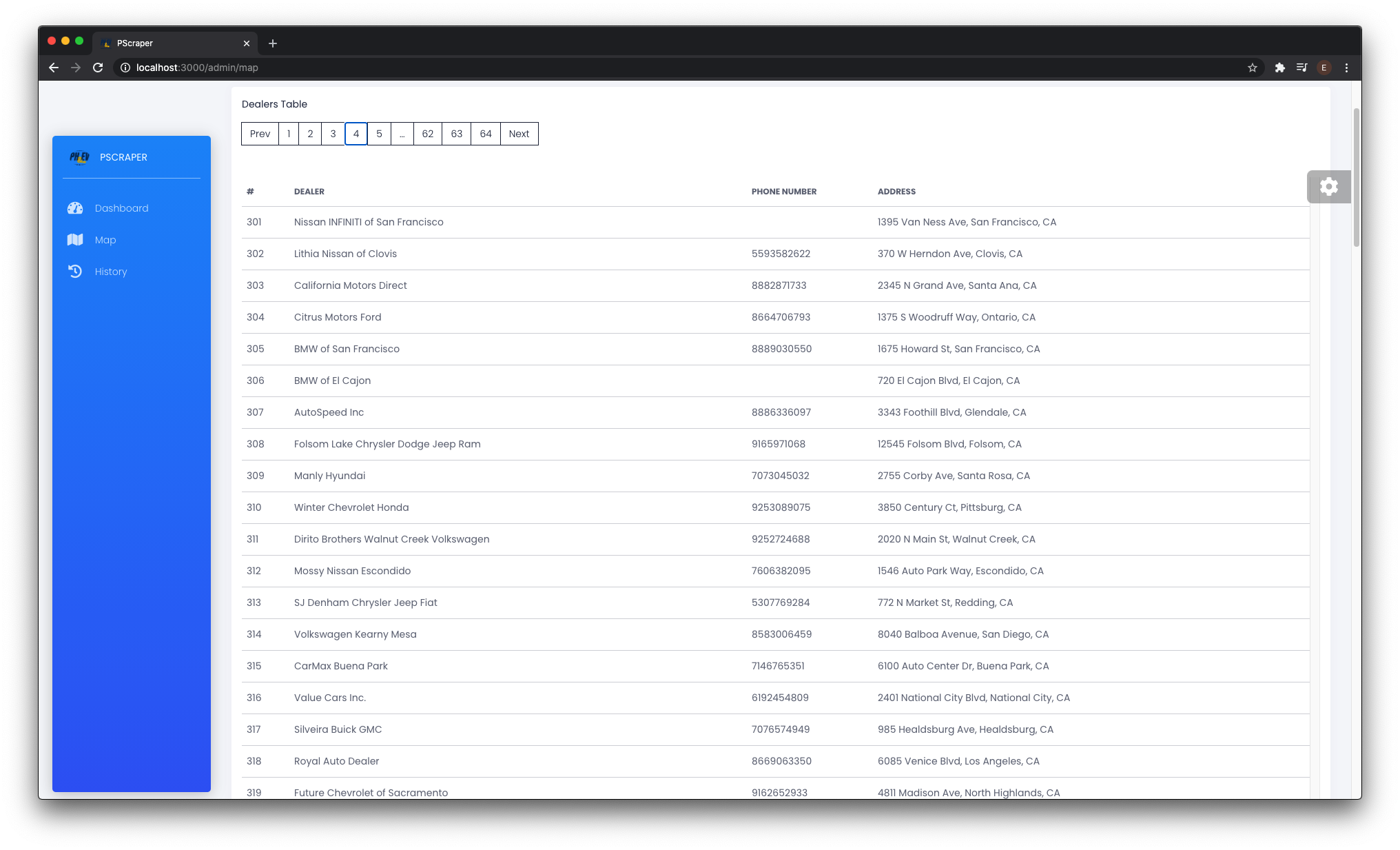

- The seller model has infomation on the sellers such as name, address, etc.

- The history model is used to track changes in price and seller.

For more information on this project see pscraper-db

This is a python library with three packages:

Main package of the library that, among others, provides one main API, scrape, which performs the scraping process following the given parameters and saves it to the database. Every vehicle is processed in a sequential manner, and there is work in progress to change that to a multithreading approach for performance improvement.

The marketplace where data is scraped from are: Cars.com, and AutoTrader.

This package has APIs that interact with the endpoints provided by the backend application. It is used to create, update or retrieve records on a vehicle or seller.

Provides several miscellaneous helpers used for the scraping and reporting process..

Since the scraping function is designed to be autonomous, the functions are configured so that if any failure occurs a slack message will be sent to a specific channel.

For more information on this project see pscraper-lib.

This project contains the script that performs web-scraping daily. At the end of the scraping process a report is build a send to a slack channel. The command to start the scheduled scraping is:

$ nohup ./scrape.py &This is run inside a tmux session on a Google Cloud Compute Engine so that the process can run without any supervision.

For more information on this project see pscraper-tool.

WIP

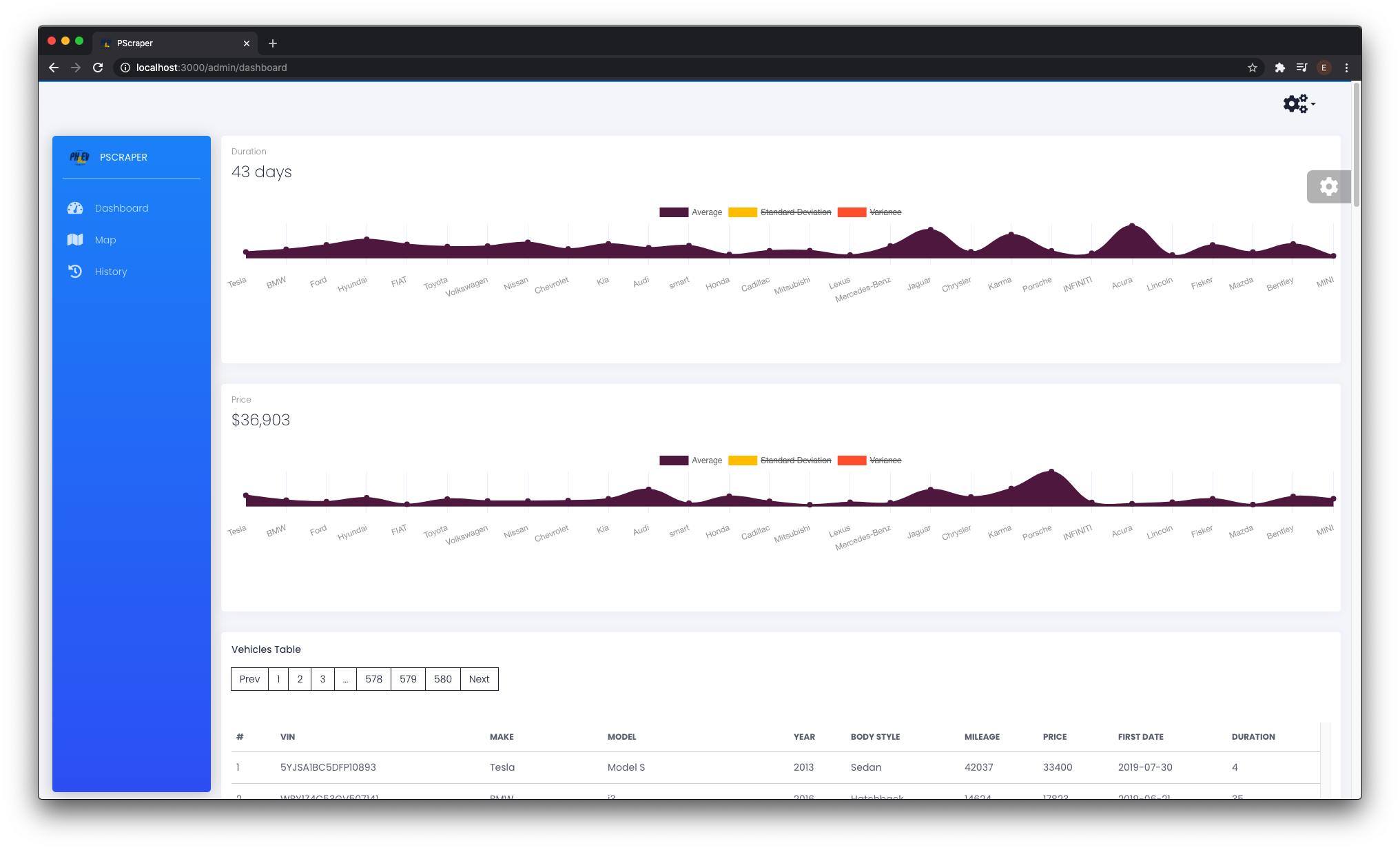

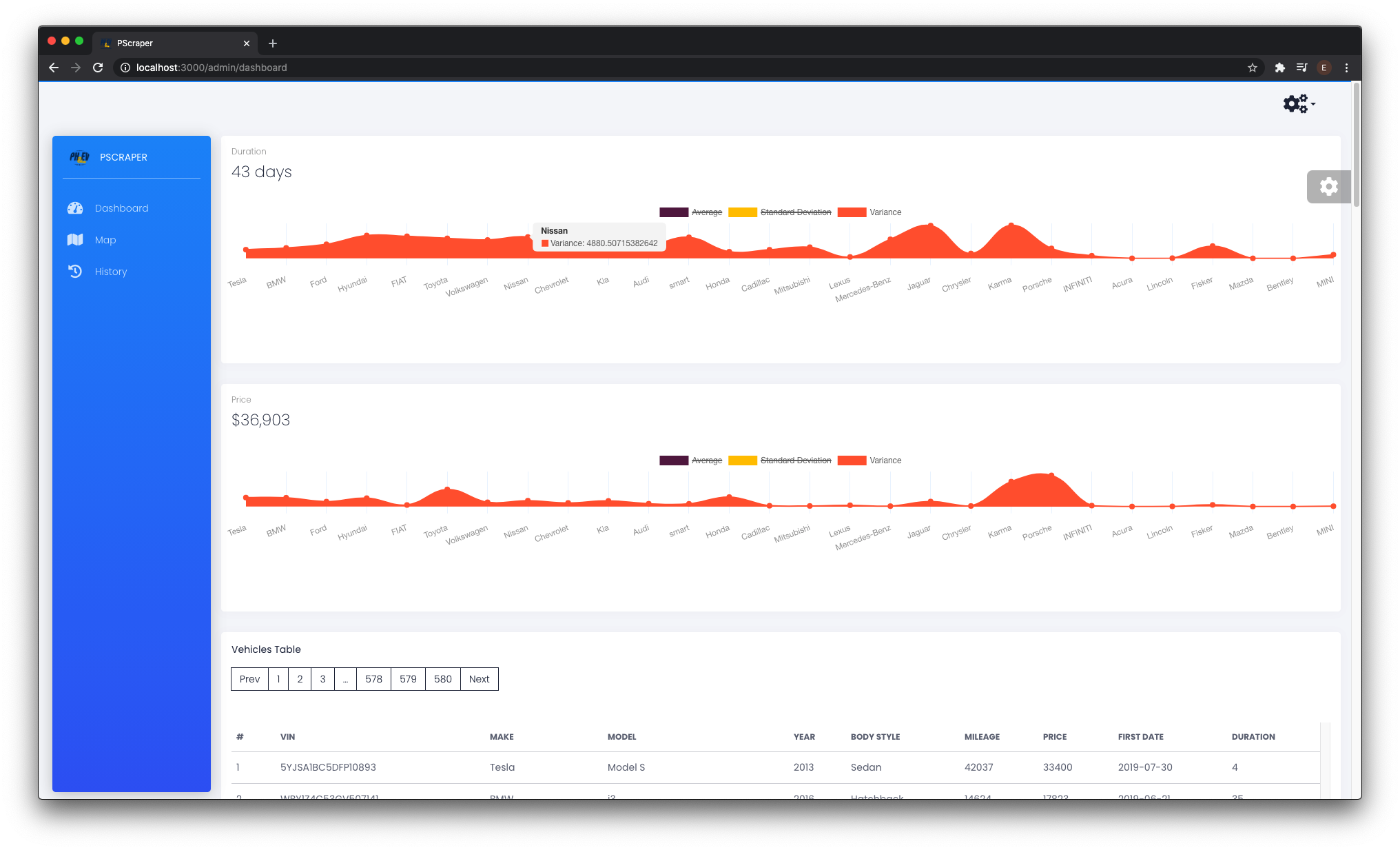

A frontend dashboard, build with ReactJS. There might be more uses for it later but at the moment it's planned to be used mainly as a data visualization tool. Users are authenticated using the Django framework that's already set up, and it will have different views/layout based on the user's permissions.

For more information on this project see pscraper-dashboard.