🚧 This project is still under development

The Arena Evaluation package provides tools to record, evaluate, and plot navigational metrics to evaluate ROS navigation planners. It is best suited for usage with our arena-rosnav repository but can also be integrated into any other ROS-based project.

It consists of 3 parts:

- To integrate arena evaluation into your project, see the guide here

- To use it along side with the arena repository, install the following requirements:

pip install scikit-learn seaborn pandas matplotlibTo record data as csv file while doing evaluation runs set the flag use_recorder:="true" in your roslaunch command. For example:

workon rosnav

roslaunch arena_bringup start_arena_gazebo.launch world:="aws_house" scenario_file:="aws_house_obs05.json" local_planner:="teb" model:="turtlebot3_burger" use_recorder:="true"The data will be recorded in .../catkin_ws/src/forks/arena-evaluation/01_recording.

The script stops recording as soon as the agent finishes the scenario and stops moving or after termination criterion is met. Termination criterion as well as recording frequency can be set in data_recorder_config.yaml.

max_episodes: 15 # terminates simulation upon reaching xth episode

max_time: 1200 # terminates simulation after x seconds

record_frequency: 0.2 # time between actions recordedNOTE: Leaving the simulation running for a long time after finishing the set number of repetitions does not influence the evaluation results as long as the agent stops running. Also, the last episode of every evaluation run is pruned before evaluating the recorded data.

NOTE: Sometimes csv files will be ignored by git so you have to use git add -f . We recommend using the code below.

roscd arena-evaluation && git add -f .

git commit -m "evaluation run"

git pull

git push- After finishing all the evaluation runs, recording the desired csv files and run the

get_metrics.pyscript in/02_evaluation. This script will evaluate the raw data recorded from the evaluation and store it (or them).ftrfile with the following naming convention:data_<planner>_<robot>_<map>_<obstacles>.ftr. During this process all the csv files will be moved from/01_recordingto/02_evaluationinto a directory with the naming conventiondata_<timestamp>. The ftr file will be stored in/02_evaluation.

Some configurations can be set in theget_metrics_config.yamlfile. Those are:

-

robot_radius: dictionary of robot radii, relevant for collision measurement -

time_out_treshold: treshold for episode timeout in seconds -

collision_treshold: treshold for allowed number of collisions until episode deemed as failedNOTE: Do NOT change the

get_metrics_config_default.yaml!

We recommend using the code below:\workon rosnav && roscd arena-evaluation/02_evaluation && python get_metrics.py

NOTE: If you want to reuse csv files, simply move the desired csv files from the data directory to

/01_recordingand execute theget_metrics.pyscript again.The repository can be used in two ways:

- Firstly it can be used to evaluate the robot performance within the scenario run, e.g visualizing the velocity distribution within each simulation run (this usage mode is currently still under development).

- Secondly, it can be used to evaluate the robot performance compare robot performance between different scenarios. For this use-case continue with the following step 2.

- The observations of the individual runs can be joined into one large dataset, using the following script:

This script will combine all ftr files in the

workon rosnav && roscd arena-evaluation/02_evaluation && python combine_into_one_dataset.py

02_evaluation/ftr_datafolder into one large ftr file, taking into account the planner, robot etc.

The data prepared in the previous steps can be visualized with two different modes, the automated or the custom mode.

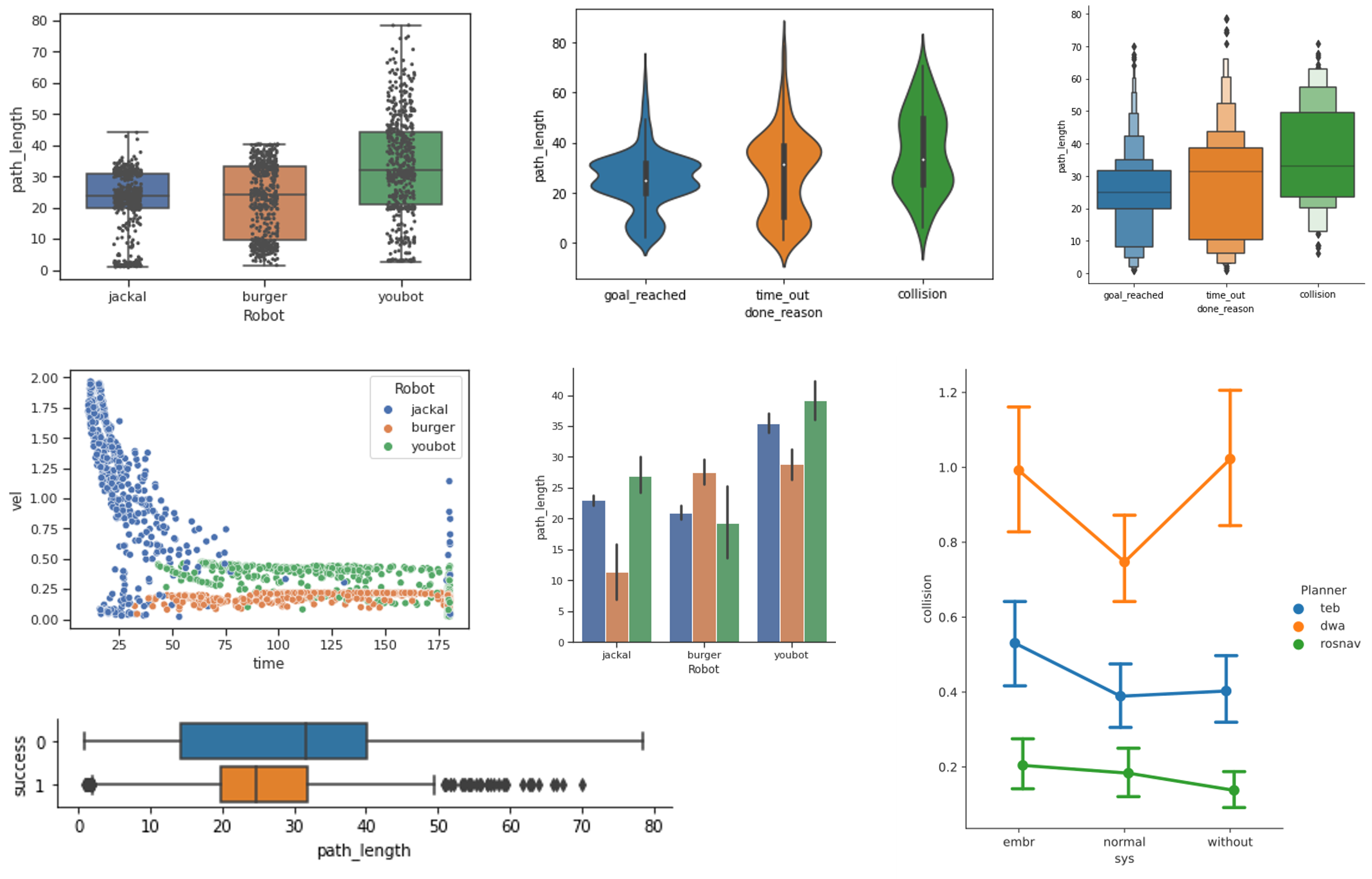

Open the following notebook to visualize your data. It contains a step-by-step guide on how to create an accurate visual representation of your data. For examples of supported plots (and when to use which plot), refer to the documentation here.

- run:

roscd arena-evaluation - run:

python world_complexity.py --image_path {IMAGE_PATH} --yaml_path {YAML_PATH} --dest_path {DEST_PATH}

with:

IMAGE_PATH: path to the floor plan of your world. Usually in .pgm format

YAML_PATH: path to the .yaml description file of your floor plan

DEST_PATH: location to store the complexity data about your map

Example launch:

python world_complexity.py --image_path ~/catkin_ws/src/forks/arena-tools/aws_house/map.pgm --yaml_path ~/catkin_ws/src/forks/arena-tools/aws_house/map.yaml --dest_path ~/catkin_ws/src/forks/arena-tools/aws_house