This repository contains the official implementation for "Cross-modal Learning for Image-Guided Point Cloud Shape Completion" (NeurIPS 2022) paper

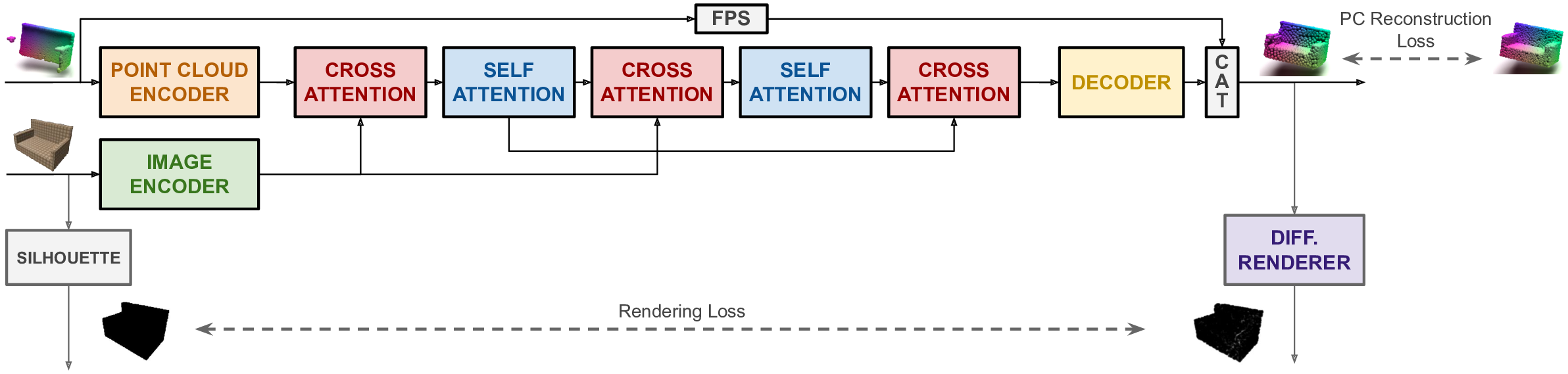

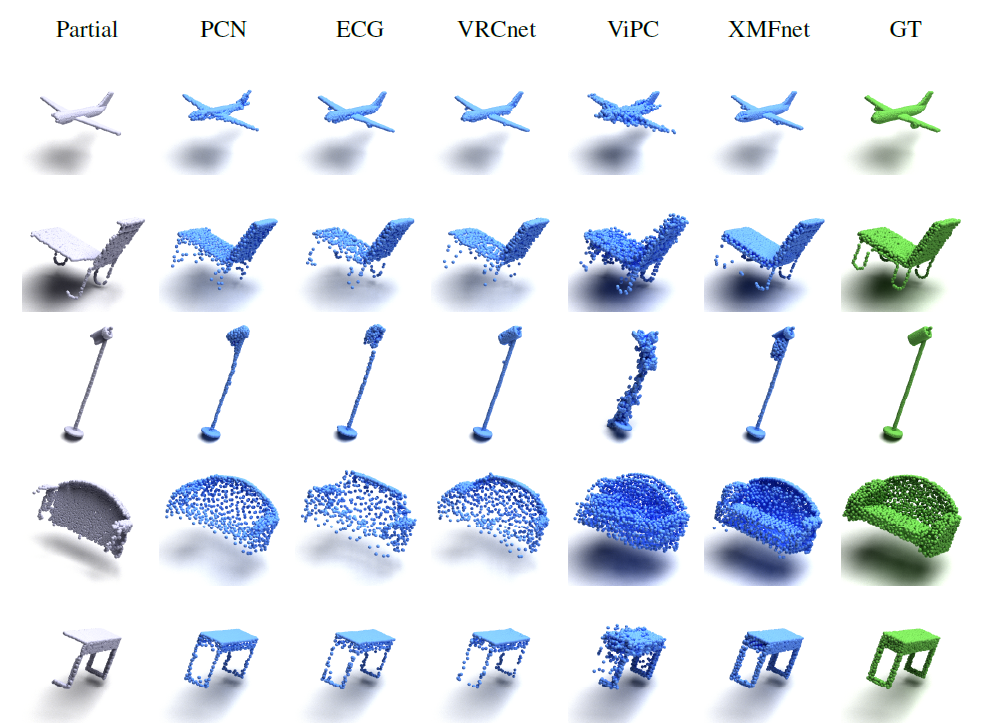

In this paper we explore the recent topic of point cloud completion, guided by an auxiliary image. We show how it is possible to effectively combine the information from the two modalities in a localized latent space, thus avoiding the need for complex point cloud reconstruction methods from single views used by the state-of-the-art. We also investigate a novel weakly-supervised setting where the auxiliary image provides a supervisory signal to the training process by using a differentiable renderer on the completed point cloud to measure fidelity in the image space. Experiments show significant improvements over state-of-the-art supervised methods for both unimodal and multimodal completion. We also show the effectiveness of the weakly-supervised approach which outperforms a number of supervised methods and is competitive with the latest supervised models only exploiting point cloud information.

The code has been developed with the following dependecies:

- Python 3.8

- CUDA version 10.2

- G++ or GCC 7.5.0

- Pytorch 1.10.2

To setup the environment and install all the required packages run:

sh setup.sh

It automatically creates the environment and install all the required packages.

If something goes wrong please consider to follow the steps in setup manually.

The dataset is borrowed from "View-guided point cloud completion".

First, please download the ShapeNetViPC-Dataset (Dropbox, Baidu) (143GB, code: ar8l). Then run cat ShapeNetViPC-Dataset.tar.gz* | tar zx, you will get ShapeNetViPC-Dataset contains three floders: ShapeNetViPC-Partial, ShapeNetViPC-GT and ShapeNetViPC-View.

For each object, the dataset include partial point cloud (ShapeNetViPC-Patial), complete point cloud (ShapeNetViPC-GT) and corresponding images (ShapeNetViPC-View) from 24 different views. You can find the detail of 24 cameras view in /ShapeNetViPC-View/category/object_name/rendering/rendering_metadata.txt.

In the "dataset" folder of this project you can find the train and test list, that are the same as the original one, except for the formatting style.

Further partialized inputs used for the weakly supervised setting are also available for download (Dropbox)

The file config.py contains the configuration for all the training parameters.

To train the models in the paper, run this command:

python train.py

To evaluate the models (select the specific category in config.py):

python eval.py

You can download pretrained models here:

Some of the code is borrowed from AXform.

Visualizations have been created using Mitsuba 2.

If you find our work useful in your research, please consider citing:

@inproceedings{aiello2022cross,

author = {Aiello, Emanuele and Valsesia, Diego and Magli, Enrico},

booktitle = {Advances in Neural Information Processing Systems},

title = {Cross-modal Learning for Image-Guided Point Cloud Shape Completion},

year = {2022}

}

Our code is released under MIT License (see LICENSE file for details).