Official PyTorch implementation of SelfHDR (ICLR 2024)

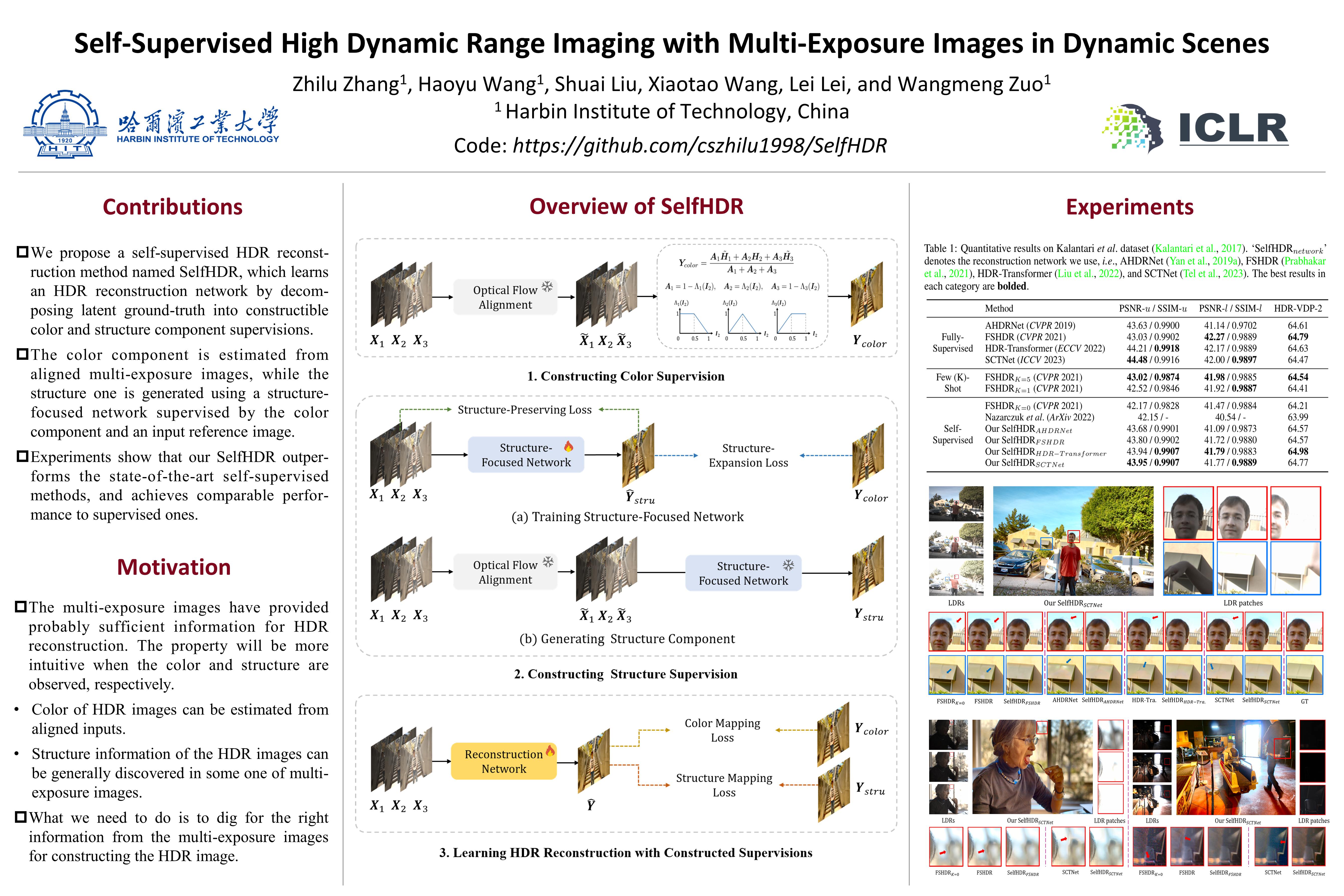

Self-Supervised High Dynamic Range Imaging with Multi-Exposure Images in Dynamic Scenes

ICLR 2024

Zhilu Zhang$^1$ , Haoyu Wang$^1$ , Shuai Liu, Xiaotao Wang, Lei Lei, Wangmeng Zuo$^1$ $^1$ Harbin Institute of Technology, China

OpenReview |

-

2024-05-01: Codes for image alignment, HDR image tone mapping visualization, and HDR-VDP metric calculation are released. -

2024-02-04: We organize the Bracketing Image Restoration and Enhancement Challenge in NTIRE 2024 (CVPR Workshop), including Track 1 (BracketIRE Task) and Track 2 (BracketIRE+ Task). Details can bee seen in BracketIRE. Welcome to participate! -

2024-01-24: The basic codes and pre-trained models are released. (The codes have been restructured. If there are any problems with the codes, please contact us.) -

2024-01-17: Our SelfHDR is accepted a poster paper in ICLR 2024. -

2024-01-01: In our latest work BracketIRE, we utilize bracketing photography to unify image restoration and enhancement (including denoising, deblurring, high dynamic range imaging, and super-resolution) tasks.

Merging multi-exposure images is a common approach for obtaining high dynamic range (HDR) images, with the primary challenge being the avoidance of ghosting artifacts in dynamic scenes. Recent methods have proposed using deep neural networks for deghosting. However, the methods typically rely on sufficient data with HDR ground-truths, which are difficult and costly to collect. In this work, to eliminate the need for labeled data, we propose SelfHDR, a self-supervised HDR reconstruction method that only requires dynamic multi-exposure images during training. Specifically, SelfHDR learns a reconstruction network under the supervision of two complementary components, which can be constructed from multi-exposure images and focus on HDR color as well as structure, respectively. The color component is estimated from aligned multi-exposure images, while the structure one is generated through a structure-focused network that is supervised by the color component and an input reference (\eg, medium-exposure) image. During testing, the learned reconstruction network is directly deployed to predict an HDR image. Experiments on real-world images demonstrate our SelfHDR achieves superior results against the state-of-the-art self-supervised methods, and comparable performance to supervised ones.

- Python 3.x and PyTorch 1.12.

- OpenCV, NumPy, Pillow, timm, tqdm, imageio, lpips, scikit-image and tensorboardX.

- Dataset and pre-trained models can be downloaded from this link.

- Place the pre-trained models in the

./pretrained_models/folder.

- In the dataset, the folders ending with

_aligncontain aligned multi-exposure images. - We adopt the same alignment method with FSHDR. The alignment codes can be seen in https://github.com/Susmit-A/FSHDR/tree/master/matlab_liu_code.

-

Modify

dataroot,name,net,batch_size,niter,lr_decay_iters, andlrintrain.sh:- Training settings for AHDRNet and FSHDR:

batch_size=16,niter=150,lr_decay_iters=50,lr=0.0001

- Training settings for HDR-Transformer and SCTNet:

batch_size=8,niter=200,lr_decay_iters=100,lr=0.0002

- Training settings for AHDRNet and FSHDR:

-

Run

sh train.sh

- Modify

dataroot,name,netanditerintest.sh - Run

sh test.sh

- We adopt

hdrvdp-2.2.2toolkit, and you can download it from this link. - Then we write code in

Contents.mand execute it. - Code example in

Contents.m:

% Load HDR Images

target = double(hdrread('tatget.hdr'));

out = double(hdrread('output.hdr'));

% HDR Output Tone Mapping Visualization

tonemap_img = tonemap(out, 'AdjustLightness', [0,1], 'AdjustSaturation', 5.3);

imwrite(tonemap_img, 'tonemap.png');

% Calculate HDR-VDP Metric

ppd = hdrvdp_pix_per_deg(24, [size(out,2) size(out,1)], 0.5);

metric = hdrvdp(target, out, 'sRGB-display', ppd);

% Print Value of HDR-VDP Metric

metric.Q

- You can specify which GPU to use by

--gpu_ids, e.g.,--gpu_ids 0,1,--gpu_ids 3,--gpu_ids -1(for CPU mode). In the default setting, all GPUs are used. - You can refer to options for more arguments.

If you find it useful in your research, please consider citing:

@inproceedings{SelfHDR,

title={Self-Supervised High Dynamic Range Imaging with Multi-Exposure Images in Dynamic Scenes},

author={Zhang, Zhilu and Wang, Haoyu and Liu, Shuai and Wang, Xiaotao and Lei, Lei and Zuo, Wangmeng},

booktitle={ICLR},

year={2024}

}

If you are interested in our follow-up work, i.e., Exposure Bracketing is All You Need, please consider citing:

@article{BracketIRE,

title={Exposure Bracketing is All You Need for Unifying Image Restoration and Enhancement Tasks},

author={Zhang, Zhilu and Zhang, Shuohao and Wu, Renlong and Yan, Zifei and Zuo, Wangmeng},

journal={arXiv preprint arXiv:2401.00766},

year={2024}

}

This repo is built upon the framework of CycleGAN, and we borrow some codes from AHDRNet, FSHDR, HDR-Transformer and SCTNet. Thanks for their excellent works!