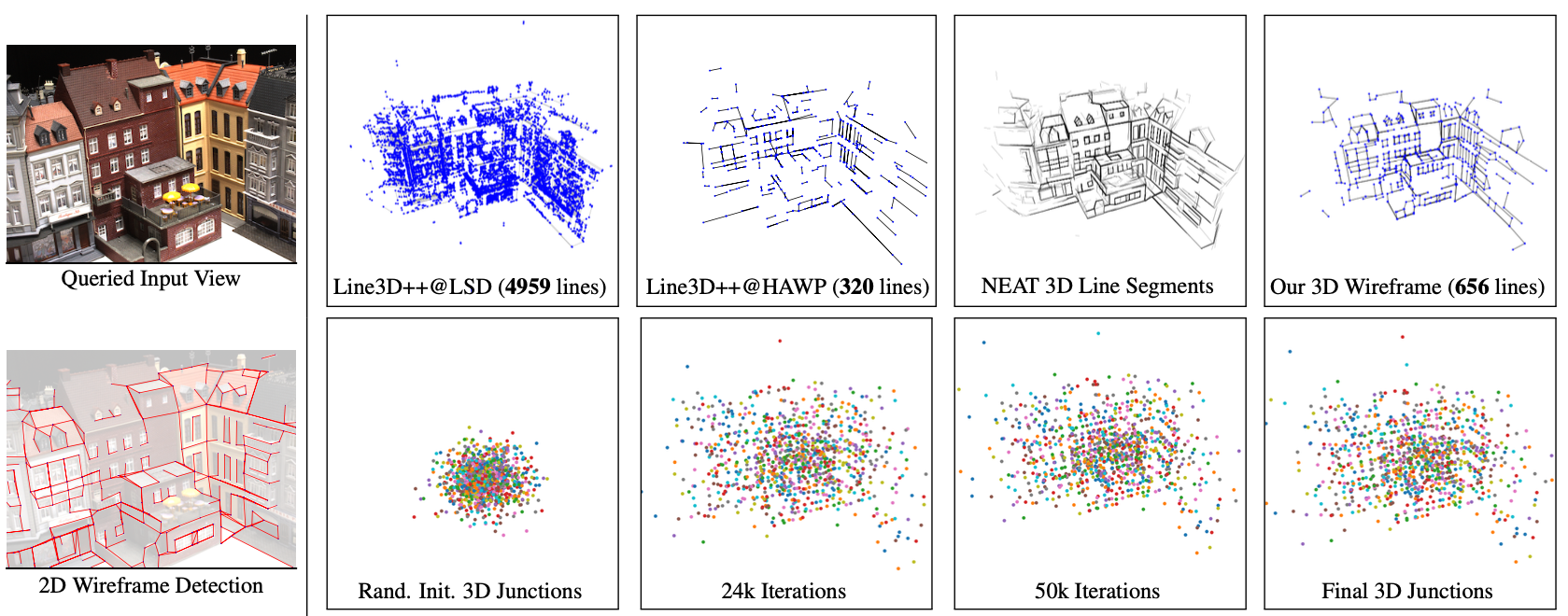

NEAT: Distilling 3D Wireframes from Neural Attraction Fields (To be updated)

Nan Xue, Bin Tan, Yuxi Xiao, Liang Dong, Gui-Song Xia, Tianfu Wu, Yujun Shen

2024

Preprint / Code / Video / Processed Data (4.73 GB) / Precomputed Results (3.01 GB)

git clone https://github.com/cherubicXN/neat.git --recursive

conda create -n neat python=3.10

conda activate neat

pip install torch==1.13.1+cu117 torchvision==0.14.1+cu117 torchaudio==0.13.1 --extra-index-url https://download.pytorch.org/whl/cu117

3. Install hawp from third-party/hawp

cd third-party/hawp

pip install -e .

cd ../..

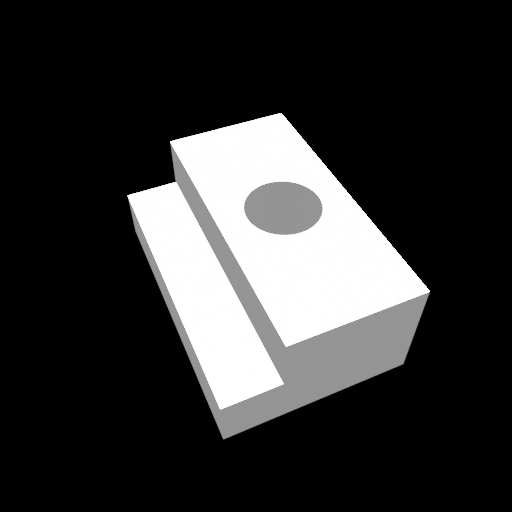

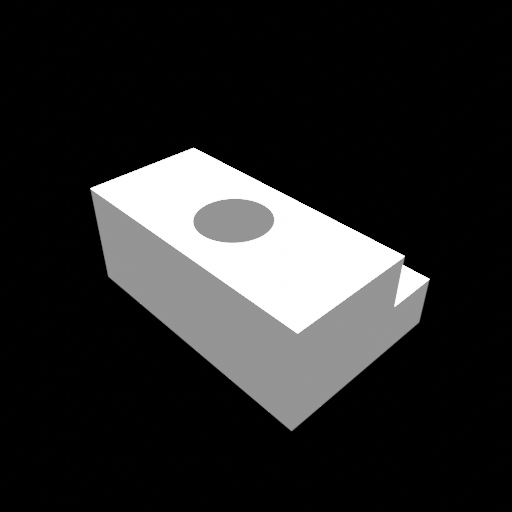

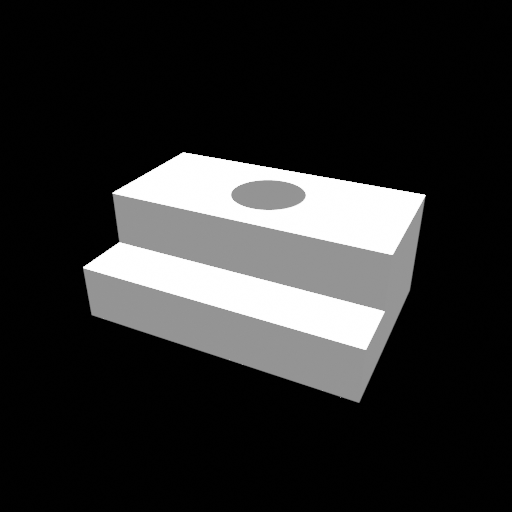

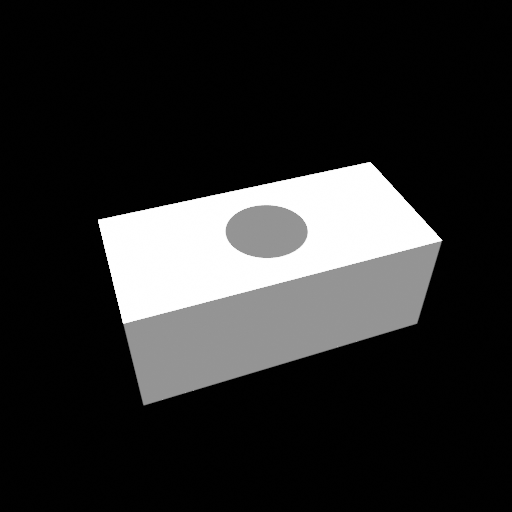

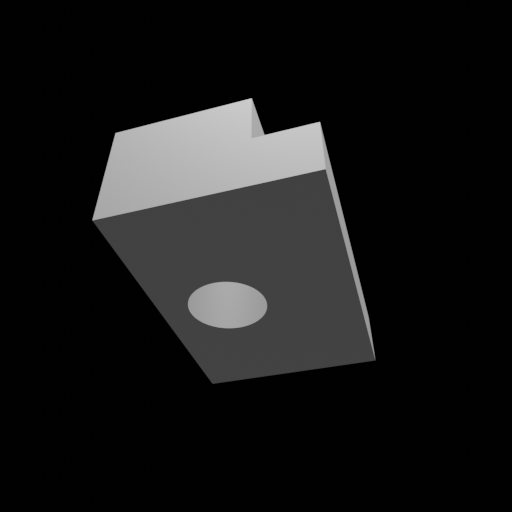

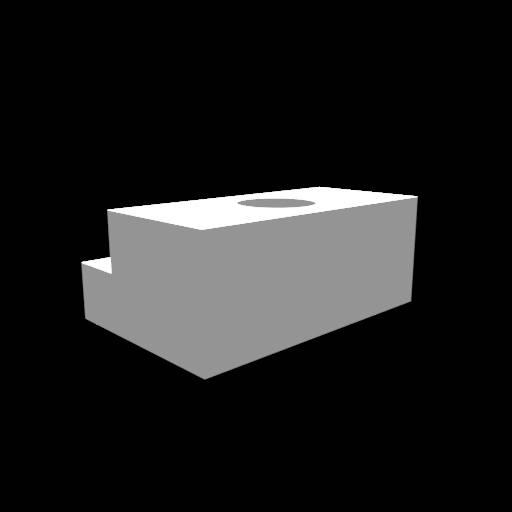

pip install -r requirements.txtA toy example on a simple object from the ABC dataset

-

Step 1: Training or Optimization

python training/exp_runner.py --conf confs/abc-neat-a.conf --nepoch 2000 --tbvis # --tbvis will use tensorboard for visualization -

Step 2: Finalize the NEAT wireframe model

python neat-final-parsing.py --conf ../exps/abc-neat-a/{timestamp}/runconf.conf --checkpoint 1000After running the above command line, you will get 4 files at

../exps/abc-neat-a/{timestamp}/wireframeswith the prefix of{epoch}-{hash}*, where{epoch}is the checkpoint you evaluated and{hash}is an hash of hyperparameters for finalization.The four files are with the different suffix strings:

{epoch}-{hash}-all.npzstores the all line segments from the NEAT field,{epoch}-{hash}-wfi.npzstores the initial wireframe model without visibility checking, containing some artifacts in terms of the wireframe edges,{epoch}-{hash}-wfi_checked.npzstores the wireframe model after visibility checking to reduce the edge artifacts,{epoch}-{hash}-neat.pthstores the above three files and some other information in thepthformat.

-

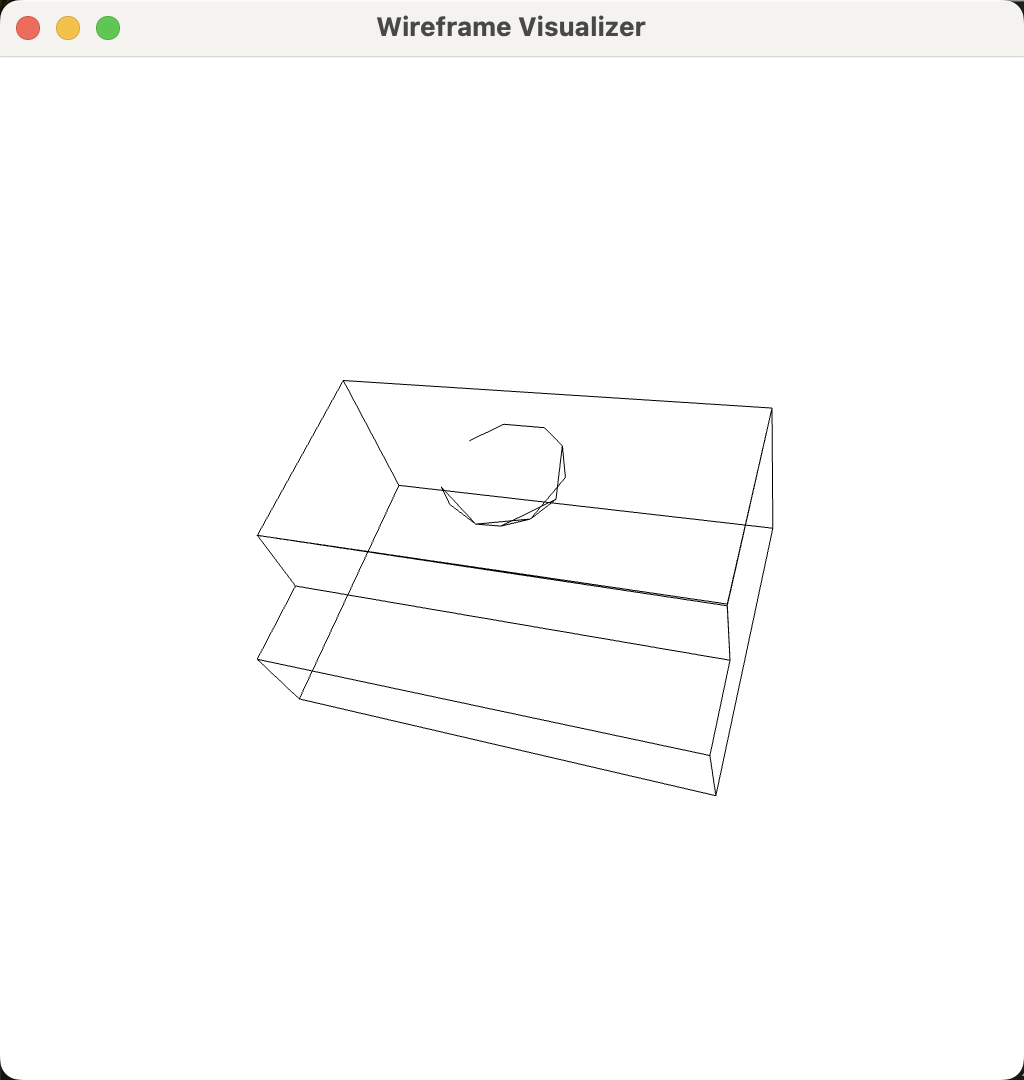

Step 3: Visualize the 3D wireframe model by

python visualization/show.py --data ../exps/abc-neat-a/{timestamp}/wireframe/{filename}.npz- Currently, the visualization script only supports the local run.

- The open3d (v0.17) plugin for tensorboard is slow

- Precomputed results can be downloaded from url-results

- Processed data can be downloaded from url-data, which are organized with the following structure:

data

├── BlendedMVS

│ ├── process.py

│ ├── scan11

│ ├── scan13

│ ├── scan14

│ ├── scan15

│ └── scan9

├── DTU

│ ├── bbs.npz

│ ├── scan105

│ ├── scan16

│ ├── scan17

│ ├── scan18

│ ├── scan19

│ ├── scan21

│ ├── scan22

│ ├── scan23

│ ├── scan24

│ ├── scan37

│ ├── scan40

│ └── scan65

├── abc

│ ├── 00004981

│ ├── 00013166

│ ├── 00017078

│ └── 00019674

└── preprocess

├── blender.py

├── extract_monocular_cues.py

├── monodepth.py

├── normalize.py

├── normalize_cameras.py

├── parse_cameras_blendedmvs.py

└── readme.md

- Evaluation code (To be updated)

If you find our work useful in your research, please consider citing

@article{NEAT-arxiv,

author = {Nan Xue and

Bin Tan and

Yuxi Xiao and

Liang Dong and

Gui{-}Song Xia and

Tianfu Wu and

Yujun Shen

},

title = {Volumetric Wireframe Parsing from Neural Attraction Fields},

journal = {CoRR},

volume = {abs/2307.10206},

year = {2023},

url = {https://doi.org/10.48550/arXiv.2307.10206},

doi = {10.48550/arXiv.2307.10206},

eprinttype = {arXiv},

eprint = {2307.10206}

}

This project is built on volsdf. We also thank the four anonymous reviewers for their feedback on the paper writing, listed as follows (copied from the CMT system):