This is the code base for our ACM CSCS 2019 paper,

RobustTP: End-to-End Trajectory Prediction for Heterogeneous Road-Agents in Dense Traffic with Noisy Sensor Inputs.

Rohan Chandra, Uttaran Bhattacharya, Christian Roncal, Aniket Bera, Dinesh Manocha

Please cite our work if you found this useful:

@article{chandra2019robusttp,

title={RobustTP: End-to-End Trajectory Prediction for Heterogeneous Road-Agents in Dense Traffic with Noisy Sensor Inputs},

author={Chandra, Rohan and Bhattacharya, Uttaran and Roncal, Christian and Bera, Aniket and Manocha, Dinesh},

journal={arXiv preprint arXiv:1907.08752},

year={2019}

}

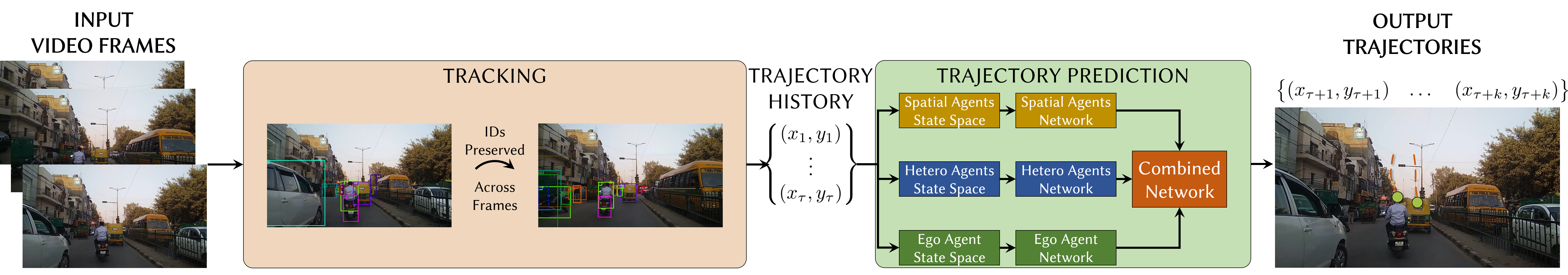

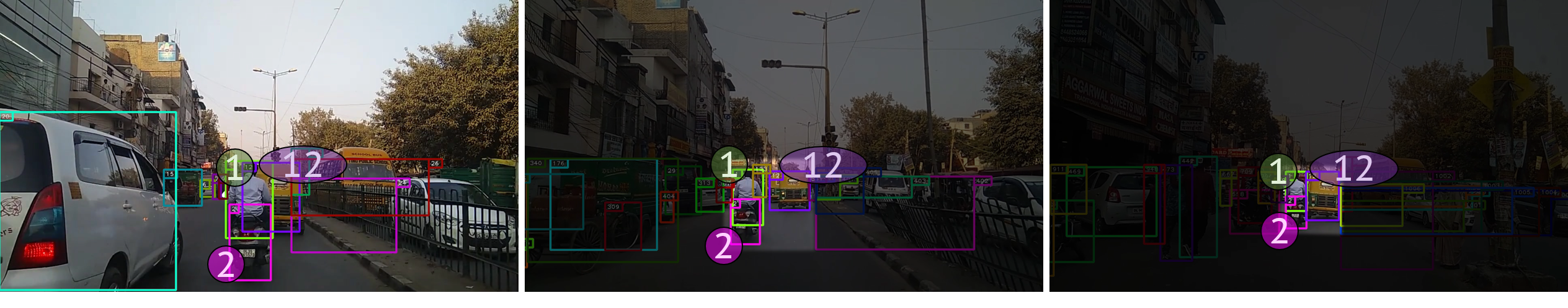

We present RobustTP, an end-to-end algorithm for predicting future trajectories of road-agents in dense traffic with noisy sensor input trajectories obtained from RGB cameras (either static or mov-ing) through a tracking algorithm. In this case, we consider noise as the deviation from the ground truth trajectory. The amount of noise depends on the accuracy of the tracking algorithm. Our approach is designed for dense heterogeneous traffic, where the road agents corresponding to a mixture of buses, cars, scooters, bicycles, or pedestrians. RobustTP is an approach that first computes trajectories using a combination of a non-linear motion model and a deep learning-based instance segmentation algorithm. Next,these noisy trajectories are trained using an LSTM-CNN neural network architecture that models the interactions between road-agents in dense and heterogeneous traffic. We also release a software framework, TrackNPred. The framework consists of implementations of state-of-the-art tracking and trajectory predictionmethods and tools to benchmark and evaluate them on real-world dense traffic datasets

TrackNPred currently supports the following:

- Tracking by Detection:

- YOLO + DeepSORT

- Mask R-CNN + DeepSORT

- Trajectory Prediction

- Social GAN: Socially Acceptable Trajectories with Generative Adversarial Networks, CVPR'18

Agrim Gupta, Justin Johnson, Fei-Fei Li, Silvio Savarese, Alexandre Alahi. - Convolutional Social Pooling for Vehicle Trajectory Prediction, CVPRW'18

Nachiket Deo and Mohan M. Trivedi. - TraPHic: Trajectory Prediction in Dense and Heterogeneous Traffic Using Weighted Interactions, CVPR'19

Rohan Chandra, Uttaran Bhattacharya, Aniket Bera, Dinesh Manocha.

If you want to use TraPHic ONLY, then use this repository as it's easier.

Support Python 3.5 and above. For a list of dependencies see requirements.txt

- Create a conda environement

conda create --name <env name e.g. tnp> - Source into the environment

source activate <env name> - Install the set of requirements

cd /path/to/requirements_file

conda install --file requirements.txt

Note that a lot of the requirements will not be installed by default. These need to installed manually as described later.

- Currently we only accept .mp4 file.

- The mp4 file must consist of a string and a number after that string.

- We need to create a folder that has the same name as the video file and put the video in that folder.

- The datafolder needs to exist in the Direcotry specified in the GUI/commandline.

Example: if the data directory isresources/data/TRAF, and the video name isTRAFx.mp4, then the location of the video should beresources/data/TRAF/TRAFx/TRAFx.mp4. - Note that currently, all the data has to reside inside

resources/data. - All of the files generated will be in the corresponding datafolder.

- Inside the conda environment, run

python setup.pyto get resources folder containing dataset and model weights. - . Try to run

python main.py. It will run into an error stating that a dependency was not found. - Manually install the dependency with

conda install DEPENDENCY(occasionally withconda install -c CHANNEL DEPENDENCY, where the channel name can be found by searching for the dependency file in the Anaconda cloud). - Repeat Steps 2-3 till all dependency issues are resolved and the GUI opens.

- We provide the options of GUI and command line control:

Examples:- Run

python main.pyto get the GUI, which will give you the options you can choose. - Run

python main_cmd.py -l 11 -l 12to run dataset TRAF11 and TRAF12 in command line. - Run

python main_cmd.py -l 11 --detection False --tracking False --train True --eval Trueto only run prediction training and evaluating on dataset TRAF11 in command line.-lis required. It takes in a series of numbers and specifies which dataset you want to run.- Other flags are not required, but you can play around the options that was provided in the GUI. Please see how they are defined in the main_cmd.py file.

- Run

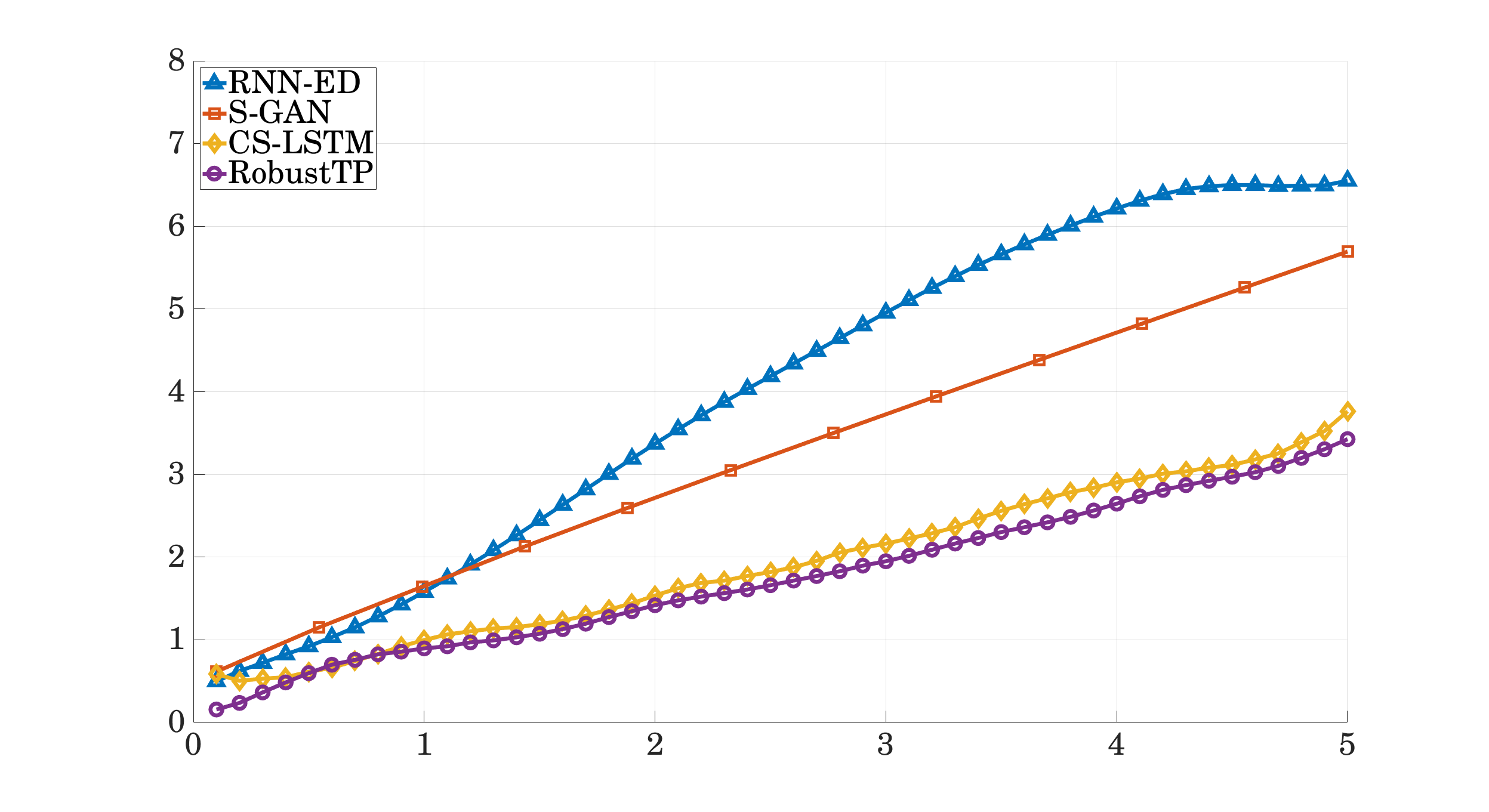

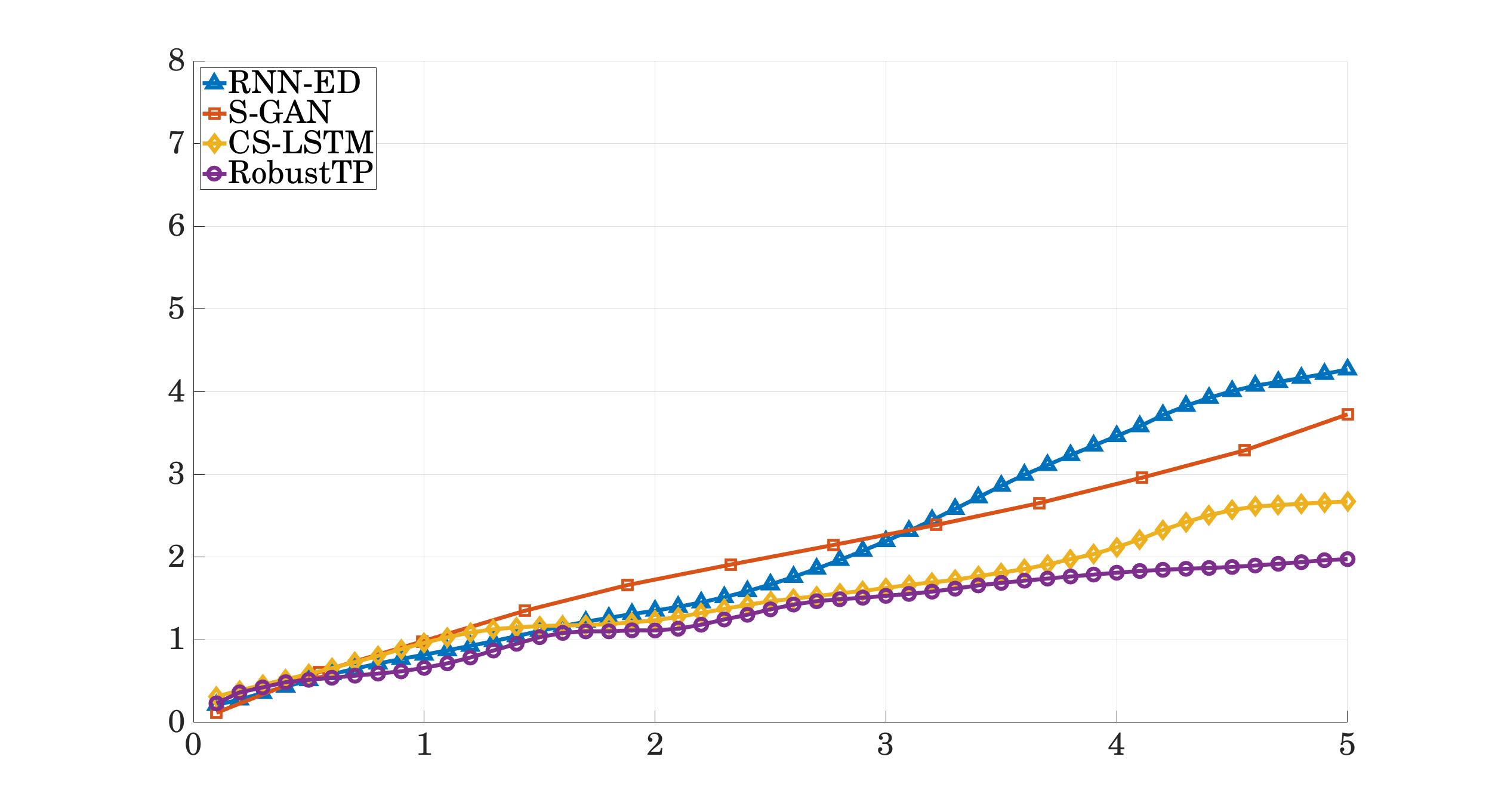

Our trajectory prediction algorithm outperforms state-of-the-art methods for end-to-end trajectory prediction using sensor inputs. We achieve an improvementof upto 18% in average displacement error and an improvement of up to 35.5% in final displacement error at the end of the prediction window (5 seconds) over the next best method. All experiments were set up on an Nvidia TiTan Xp GPU.

We evaluate RobustTP with methods that use manually annotated trajectory histories, on the TRAF Dataset. The results are reported in the following format: ADE/FDE, where ADE is the average displacement RMSE over the 5 seconds of prediction and FDE is the final displacement RMSE at the end of 5 seconds. We observe that RobustTP is at par with the stat-of-the-art:

| RNN-ED | S-GAN | CS-LSTM | TraPHic | RobustTP |

|---|---|---|---|---|

| 3.24/5.16 | 2.76/4.79 | 1.15/3.35 | 0.78/2.44 | 1.75/3.42 |

We also evaluate RobustTP using trajectory histories that are computed by two detection methods: Mask R-CNN and YOLO:

| Using Mask R-CNN | Using YOLO |

|---|---|

|

|