Official repository of "Transformer-based model for monocular visual odometry: a video understanding approach"

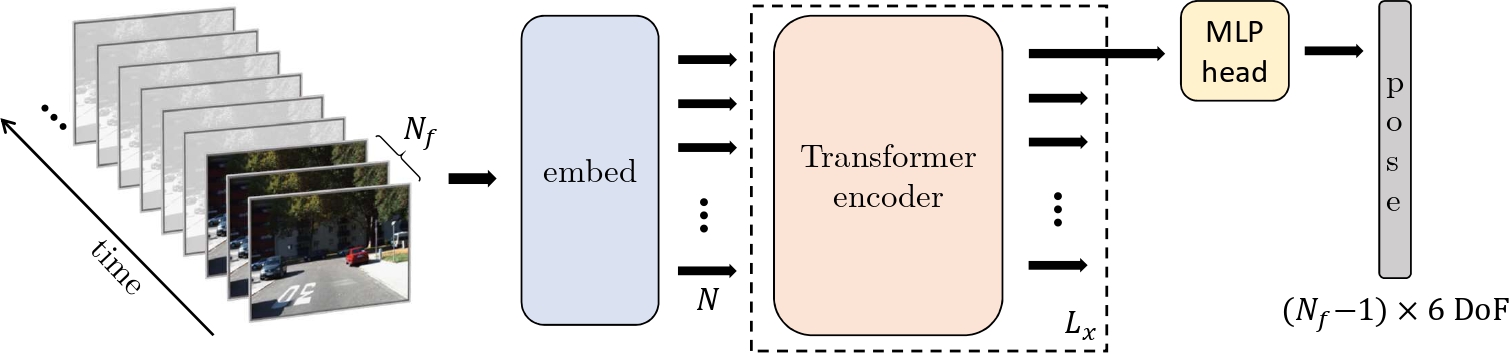

Estimating the camera pose given images of a single camera is a traditional task in mobile robots and autonomous vehicles. This problem is called monocular visual odometry and it often relies on geometric approaches that require engineering effort for a specific scenario. Deep learning methods have shown to be generalizable after proper training and a considerable amount of available data. Transformer-based architectures have dominated the state-of-the-art in natural language processing and computer vision tasks, such as image and video understanding. In this work, we deal with the monocular visual odometry as a video understanding task to estimate the 6-DoF camera's pose. We contribute by presenting the TSformer-VO model based on spatio-temporal self-attention mechanisms to extract features from clips and estimate the motions in an end-to-end manner. Our approach achieved competitive state-of-the-art performance compared with geometry-based and deep learning-based methods on the KITTI visual odometry dataset, outperforming the DeepVO implementation highly accepted in the visual odometry community.

Download the KITTI odometry dataset (grayscale).

In this work, we use the .jpg format. You can convert the dataset to .jpg format with png_to_jpg.py.

Create a simbolic link (Windows) or a softlink (Linux) to the dataset in the dataset folder:

- On Windows:

mklink /D <path_to_your_project>\TSformer-VO\data <path_to_your_downloaded_data> - On Linux:

ln -s <path_to_your_downloaded_data> <path_to_your_project>/TSformer-VO/data

The data structure should be as follows:

|---TSformer-VO

|---data

|---sequences_jpg

|---00

|---image_0

|---000000.png

|---000001.png

|---...

|---image_1

|...

|---image_2

|---...

|---image_3

|---...

|---01

|---...

|---poses

|---00.txt

|---01.txt

|---...

Here you find the checkpoints of our trained-models. The architectures vary according to the number of frames (Nf) in the input clip, which also influences the last MLP head.

Google Drive folder: link to checkpoints in GDrive

| Model | Nf | Checkpoint (.pth) | Args (Model Parameters) |

|---|---|---|---|

| TSformer-VO-1 | 2 | checkpoint_model1 | args.pkl |

| TSformer-VO-2 | 3 | checkpoint_model2 | args.pkl |

| TSformer-VO-3 | 4 | checkpoint_model3 | args.pkl |

- Create a virtual environment using Anaconda and activate it:

conda create -n tsformer-vo python==3.8.0

conda activate tsformer-vo

- Install dependencies (with environment activated):

pip install -r requirements.txt

PS: So far we are changing the settings and hyperparameters directly in the variables and dictionaries. As further work, we will use pre-set configurations with the argparse module to make a user-friendly interface.

In train.py:

- Manually set configuration in

args(python dict); - Manually set the model hyperparameters in

model_params(python dict); - Save and run the code

train.py.

In predict_poses.py:

- Manually set the variables to read the checkpoint and sequences.

| Variables | Info |

|---|---|

| checkpoint_path | String with the path to the trained model you want to use for inference. Ex: checkpoint_path = "checkpoints/Model1" |

| checkpoint_name | String with the name of the desired checkpoint (name of the .pth file). Ex: checkpoint_name = "checkpoint_model2_exp19" |

| sequences | List with strings representing the KITTI sequences. Ex: sequences = ["03", "04", "10"] |

In plot_results.py:

- Manually set the variables to the checkpoint and desired sequences, similarly to Inference

The evaluation is done with the KITTI odometry evaluation toolbox. Please go to the evaluation repository to see more details about the evaluation metrics and how to run the toolbox.

Please cite our paper you find this research useful in your work:

@article{Francani2023,

title={Transformer-based model for monocular visual odometry: a video understanding approach},

author={Fran{\c{c}}ani, Andr{\'e} O and Maximo, Marcos ROA},

journal={arXiv preprint arXiv:2305.06121},

year={2023}

}Code adapted from TimeSformer.

Check out our previous work on monocular visual odometry: DPT-VO