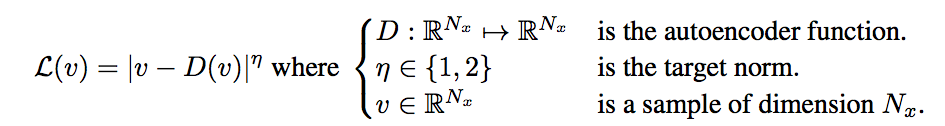

Unlike standard generative adversarial networks (Goodfellow et al. 2014), boundary equilibrium generative adversarial networks (BEGAN) use an auto-encoder as a disciminator. An auto-encoder loss is defined, and an approximation of the Wasserstein distance is then computed between the pixelwise auto-encoder loss distributions of real and generated samples.

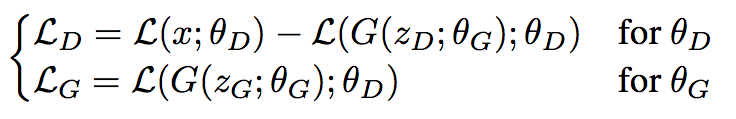

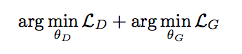

With the auto-encoder loss defined (above), the Wasserstein distance approximation simplifies to a loss function wherein the discriminating auto-encoder aims to perform well on real samples and poorly on generated samples, while the generator aims to produce adversarial samples which the discriminator can't help but perform well upon.

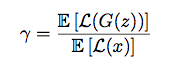

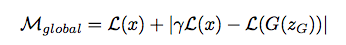

Additionally, a hyper-parameter gamma is introduced which gives the user the power to control sample diversity by balancing the discriminator and generator.

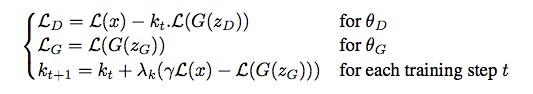

Gamma is put into effect through the use of a weighting parameter k which gets updated while training to adapt the loss function so that our output matches the desired diversity. The overall objective for the network is then:

Unlike most generative adversarial network architectures, where we need to update G and D independently, the Boundary Equilibrium GAN has the nice property that we can define a global loss and train the network as a whole (though we still have to make sure to update parameters with respect to the relative loss functions)

The final contribution of the paper is a derived convergence measure M which gives a good indicator as to how the network is doing. We use this parameter to track performance, as well as control learning rate.

The overall result is a surprisingly effective model which produces samples well beyond the previous state of the art.

128x128 samples generated from random points in Z, from (Berthelot, Schumm and Metz, 2017).

I have used source code from artcg