SelectionGAN for Cross-View Image Translation

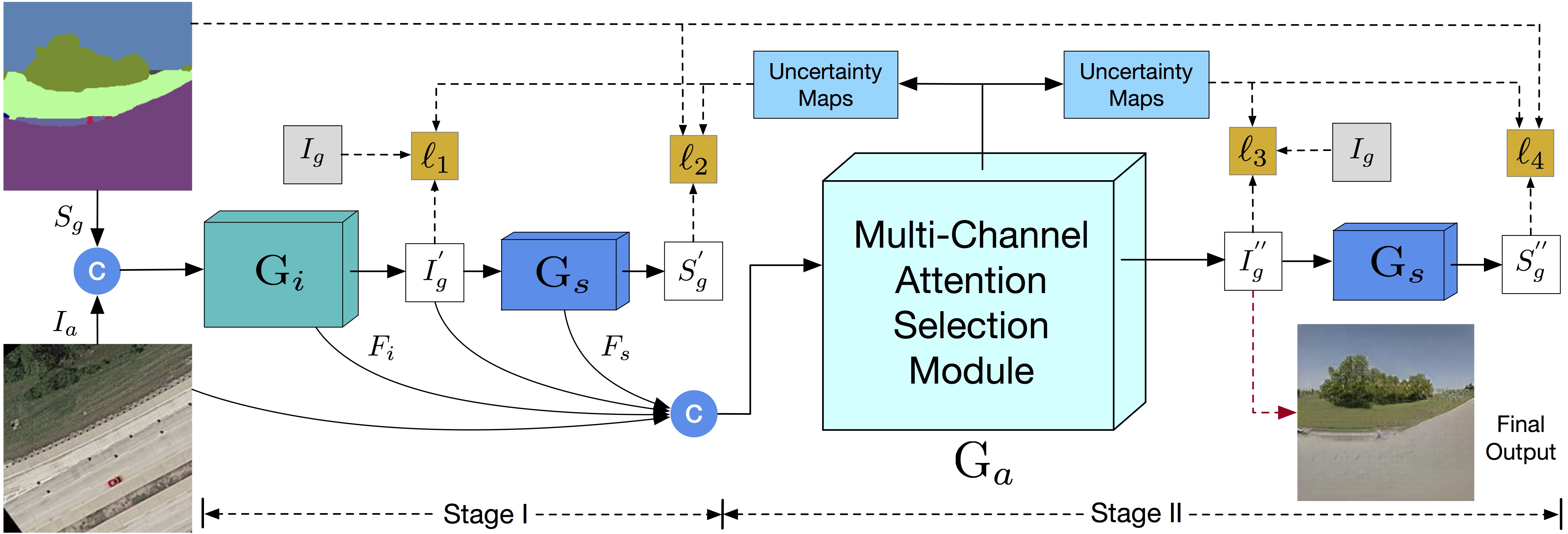

SelectionGAN Framework

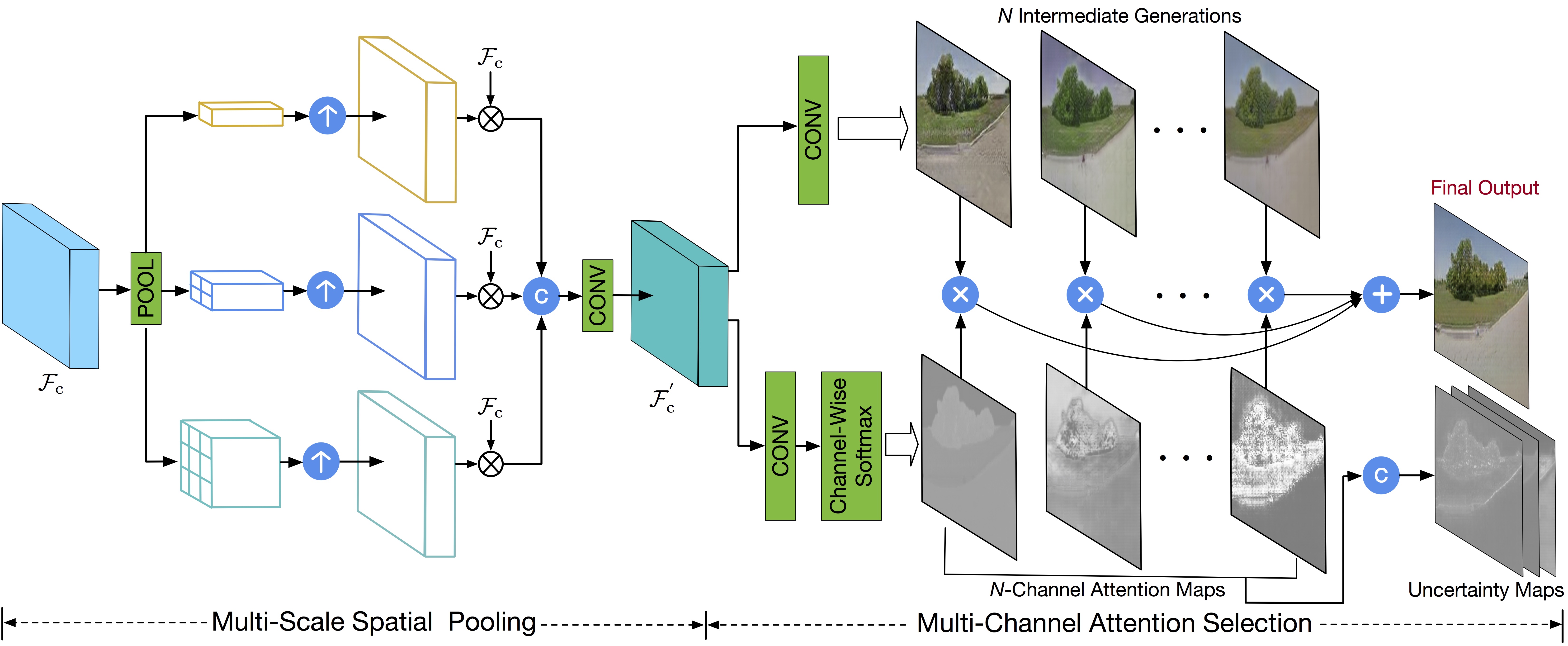

Multi-Channel Attention Selection Module

Oral Presentation Video (click image to play)

Project page | Paper | Slides | Video | Poster

Multi-Channel Attention Selection GAN with Cascaded Semantic Guidancefor Cross-View Image Translation.

Hao Tang1,2*, Dan Xu3*, Nicu Sebe1,4, Yanzhi Wang5, Jason J. Corso6 and Yan Yan2. (* Equal Contribution.)

1University of Trento, Italy, 2Texas State University, USA, 3University of Oxford, UK,

4Huawei Technologies Ireland, Ireland, 5Northeastern University, USA, 6University of Michigan, USA

In CVPR 2019 (Oral).

The repository offers the official implementation of our paper in PyTorch.

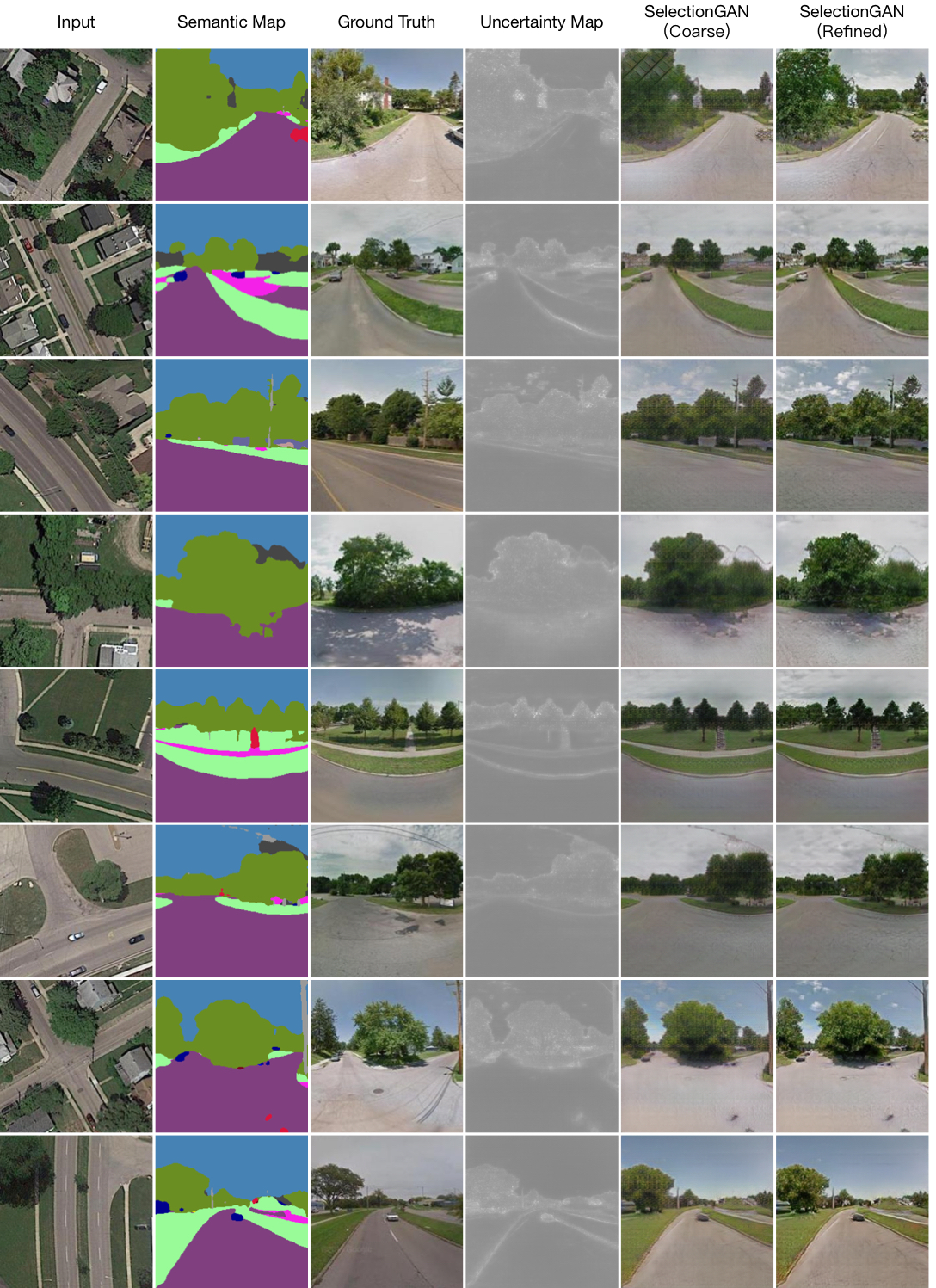

Given an image and some novel semantic maps, SelectionGAN is able to generate the same scene image but with different viewpoints.

Given an image and some novel semantic maps, SelectionGAN is able to generate the same scene image but with different viewpoints.

License

Copyright (C) 2019 University of Trento, Italy and Texas State University, USA.

All rights reserved. Licensed under the CC BY-NC-SA 4.0 (Attribution-NonCommercial-ShareAlike 4.0 International)

The code is released for academic research use only. For commercial use, please contact hao.tang@unitn.it.

Installation

Clone this repo.

git clone https://github.com/Ha0Tang/SelectionGAN

cd SelectionGAN/This code requires PyTorch 0.4.1 and python 3.6+. Please install dependencies by

pip install -r requirements.txt (for pip users)or

./scripts/conda_deps.sh (for Conda users)To reproduce the results reported in the paper, you would need an NVIDIA GeForce GTX 1080 Ti GPU with 11GB memory.

Dataset Preparation

For Dayton, CVUSA or Ego2Top, the datasets must be downloaded beforehand. Please download them on the respective webpages. In addition, we put a few sample images in this code repo. Please cite their papers if you use the data.

Preparing Ablation Dataset. We conduct ablation study in a2g (aerialto-ground) direction on Dayton dataset. To reduce the training time, we randomly select 1/3 samples from the whole 55,000/21,048 samples i.e. around 18,334 samples for training and 7,017 samples for testing. The trianing and testing splits can be downloaded here.

Preparing Dayton Dataset. The dataset can be downloaded here. In particular, you will need to download dayton.zip. Ground Truth semantic maps are not available for this datasets. We adopt RefineNet trained on CityScapes dataset for generating semantic maps and use them as training data in our experiments. Please cite their papers if you use this dataset. Train/Test splits for Dayton dataset can be downloaded from here.

Preparing CVUSA Dataset. The dataset can be downloaded here, which is from the page. After unzipping the dataset, prepare the training and testing data as discussed in our paper. We also convert semantic maps to the color ones by using this script. Since there is no semantic maps for the aerial images on this dataset, we use black images as aerial semantic maps for placehold purposes.

Preparing Ego2Top Dataset. The dataset can be downloaded here, which is from this paper. We further adopt this tool to generate the sematic maps for training. The trianing and testing splits can be downloaded here.

Preparing Your Own Datasets. Each training sample in the dataset will contain {Ia,Ig,Sa,Sg}, where Ia=aerial image, Ig=ground image, Sa=semantic map for aerial image and Sg=semantic map for ground image. Of course, you can use SelectionGAN for your own datasets and tasks.

Generating Images Using Pretrained Model

Once the dataset is ready. The result images can be generated using pretrained models.

- You can download a pretrained model (e.g. cvusa) with the following script:

bash ./scripts/download_selectiongan_model.sh cvusa

The pretrained model is saved at ./checkpoints/[type]_pretrained. Check here for all the available SelectionGAN models.

- Generate images using the pretrained model.

python test.py --dataroot [path_to_dataset] \

--name [type]_pretrained \

--model selectiongan \

--which_model_netG unet_256 \

--which_direction AtoB \

--dataset_mode aligned \

--norm batch \

--gpu_ids 0 \

--batchSize [BS] \

--loadSize [LS] \

--fineSize [FS] \

--no_flip \

--eval[path_to_dataset], is the path to the dataset. Dataset can be one of dayton, cvusa, and ego2top. [type]_pretrained is the directory name of the checkpoint file downloaded in Step 1, which should be one of dayton_a2g_64_pretrained, dayton_g2a_64_pretrained, dayton_a2g_256_pretrained, dayton_g2a_256_pretrained, cvusa_pretrained,and ego2top_pretrained. If you are running on CPU mode, change --gpu_ids 0 to --gpu_ids -1. For [BS, LS, FS],

dayton_a2g_64_pretrained: [16,72,64]dayton_g2a_64_pretrained: [16,72,64]dayton_g2a_256_pretrained: [4,286,256]dayton_g2a_256_pretrained: [4,286,256]cvusa_pretrained: [4,286,256]ego2top_pretrained: [8,286,256]

Note that testing require large amount of disk space, because the model will generate 10 intermedia image results and 10 attention maps on disk. If you don't have enough space, append --saveDisk on the command line.

- The outputs images are stored at

./results/[type]_pretrained/by default. You can view them using the autogenerated HTML file in the directory.

Training New Models

New models can be trained with the following commands.

-

Prepare dataset.

-

Train.

# To train on the dayton dataset on 64*64 resolution,

python train.py --dataroot [path_to_dayton_dataset] \

--name [experiment_name] \

--model selectiongan \

--which_model_netG unet_256 \

--which_direction AtoB \

--dataset_mode aligned \

--norm batch \

--gpu_ids 0 \

--batchSize 16 \

--niter 50 \

--niter_decay 50 \

--loadSize 72 \

--fineSize 64 \

--no_flip \

--lambda_L1 100 \

--lambda_L1_seg 1 \

--display_winsize 64 \

--display_id 0# To train on the datasets on 256*256 resolution,

python train.py --dataroot [path_to_dataset] \

--name [experiment_name] \

--model selectiongan \

--which_model_netG unet_256 \

--which_direction AtoB \

--dataset_mode aligned \

--norm batch \

--gpu_ids 0 \

--batchSize [BS] \

--loadSize [LS] \

--fineSize [FS] \

--no_flip \

--display_id 0 \

--lambda_L1 100 \

--lambda_L1_seg 1- For dayton dataset, [

BS,LS,FS]=[4,286,256], append--niter 20 --niter_decay 15. - For cvusa dataset, [

BS,LS,FS]=[4,286,256], append--niter 15 --niter_decay 15. - For ego2top dataset, [

BS,LS,FS]=[8,286,256], append--niter 5 --niter_decay 5.

There are many options you can specify. Please use python train.py --help. The specified options are printed to the console. To specify the number of GPUs to utilize, use export CUDA_VISIBLE_DEVICES=[GPU_ID]. Training will cost about one week with the default --batchSize on one NVIDIA GeForce GTX 1080 Ti GPU. So we suggest you use a larger --batchSize, while performance is not tested using a larger --batchSize.

To view training results and loss plots on local computers, set --display_id to a non-zero value and run python -m visdom.server on a new terminal and click the URL http://localhost:8097.

On a remote server, replace localhost with your server's name, such as http://server.trento.cs.edu:8097.

Can I continue/resume my training?

To fine-tune a pre-trained model, or resume the previous training, use the --continue_train --which_epoch <int> --epoch_count<int+1> flag. The program will then load the model based on epoch <int> you set in --which_epoch <int>. Set --epoch_count <int+1> to specify a different starting epoch count.

Testing

Testing is similar to testing pretrained models.

python test.py --dataroot [path_to_dataset] \

--name [type]_pretrained \

--model selectiongan \

--which_model_netG unet_256 \

--which_direction AtoB \

--dataset_mode aligned \

--norm batch \

--gpu_ids 0 \

--batchSize [BS] \

--loadSize [LS] \

--fineSize [FS] \

--no_flip \

--evalUse --how_many to specify the maximum number of images to generate. By default, it loads the latest checkpoint. It can be changed using --which_epoch.

Code Structure

train.py,test.py: the entry point for training and testing.models/selectiongan_model.py: creates the networks, and compute the losses.models/networks/: defines the architecture of all models for SelectionGAN.options/: creates option lists usingargparsepackage. More individuals are dynamically added in other files as well.data/: defines the class for loading images and semantic maps.scripts/evaluation: several evaluation source codes.

Evaluation Code

We use several metrics to evaluate the quality of the generated images.

- Inception Score: IS, need install

python 2.7 - Top-k prediction accuracy: Acc, need install

python 2.7 - KL score: KL, need install

python 2.7 - Structural-Similarity: SSIM, need install

Lua - Peak Signal-to-Noise Radio: PSNR, need install

Lua - Sharpness Difference: SD, need install

Lua

We also provide image IDs used in our paper here for further qualitative comparsion.

Citation

If you use this code for your research, please cite our papers.

@inproceedings{tang2019multichannel,

title={Multi-Channel Attention Selection GAN with Cascaded Semantic Guidancefor Cross-View Image Translation},

author={Tang, Hao and Xu, Dan and Sebe, Nicu and Wang, Yanzhi and Corso, Jason J. and Yan, Yan},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2019}

}

Acknowledgments

This source code borrows heavily from Pix2pix. We thank the authors X-Fork & X-Seq for providing the evaluation codes. This research was partially supported by National Institute of Standards and Technology Grant 60NANB17D191 (YY, JC), Army Research Office W911NF-15-1-0354 (JC) and gift donation from Cisco Inc (YY).

Related Projects

- X-Seq & X-Fork (CVPR 2018, Torch)

- Pix2pix (CVPR 2017, PyTorch)

- CrossNet (CVPR 2017, Tensorflow)

- GestureGAN (ACM MM 2018, PyTorch)

To Do List

- SelectionGAN--

- SelectionGAN

- SelectionGAN++

- Pix2pix++

- X-ForK++

- X-Seq++

Contributions

If you have any questions/comments/bug reports, feel free to open a github issue or pull a request or e-mail to the author Hao Tang (hao.tang@unitn.it).