This repo reproduces the original implementation of World Models. This implementation uses TensorFlow 2.2.

We manage to set up the project and go through the pipeline of Car-racing learning process.

-

extract.py Utilize 4 workers to generate 160 scenes(.npz), altogether 640 scenes(.npz) in which contains at most 1000 frames at least 100 frames and save in the record folder

-

vae_train.py load and unpack all the .npz file from the record folder, feed in args(batch_size=100, learning_rate=0.0001, kl_tolerance=0.5, epoch_num=10) in vae architecture, the training tensorflow summary is here, the saved_model is here

-

series.py take care of encode and decode batch data, series data is here

-

rnn_train.py use series data to perform RNN, feed in args(steps=4000, max_seq_len=1000, rnn_size=256, learning_rate=0.001, decay_rate=1.0, temperature=1.0), training tensorflow summary is here, the saved model is here

-

train.py make real CarRacing environment and start training with 4 workers. Save all the evaluations and results here

logs can be found here

gan.py or vae-gan.ipynb We look into GAN method and implement it in our case so that it could use data generated in the record folder with args(batch_size=32, Depth=32, Latent_depth=512, K_size=10, learning_rate=0.0001, normal_coefficient=0.1, kl_coefficient=0.01). The Discriminator, Encoder, and Generator weights can be find in the saved-models folder, training logs can be found here

The easiest way to handle dependencies is with Nvidia-Docker. Follow the instructions below to generate and attach to the container.

docker image build -t wm:1.0 -f docker/Dockerfile.wm .

docker container run -p 8888:8888 --gpus '"device=0"' --detach -it --name wm wm:1.0

docker attach wm

To visualize the environment from the agents perspective or generate synthetic observations use the visualizations jupyter notebook. It can be launched from your container with the following:

xvfb-run -s "-screen 0 1400x900x24" jupyter notebook --port=8888 --ip=0.0.0.0 --allow-root

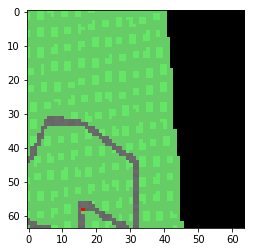

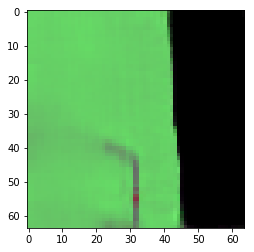

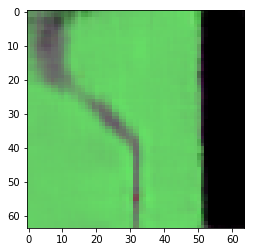

| Real Frame Sample | Reconstructed Real Frame | Imagined Frame |

|---|---|---|

|

|

|

| Ground Truth (CarRacing) | Reconstructed |

|---|---|

|

|

These instructions assume a machine with a 64 core cpu and a gpu. If running in the cloud it will likely financially make more sense to run the extraction and controller processes on a cpu machine and the VAE, preprocessing, and RNN tasks on a GPU machine.

CAUTION The doom environment leaves some processes hanging around. In addition to running the doom experiments, the script kills processes including 'vizdoom' in the name (be careful with this if you are not running in a container). To reproduce results for DoomTakeCover-v0 run the following bash script.

bash launch_scripts/wm_doom.bash

To reproduce results for CarRacing-v0 run the following bash script

bash launch_scripts/carracing.bash

I have not run this for long enough(~45 days wall clock time) to verify that we produce the same results on CarRacing-v0 as the original implementation.

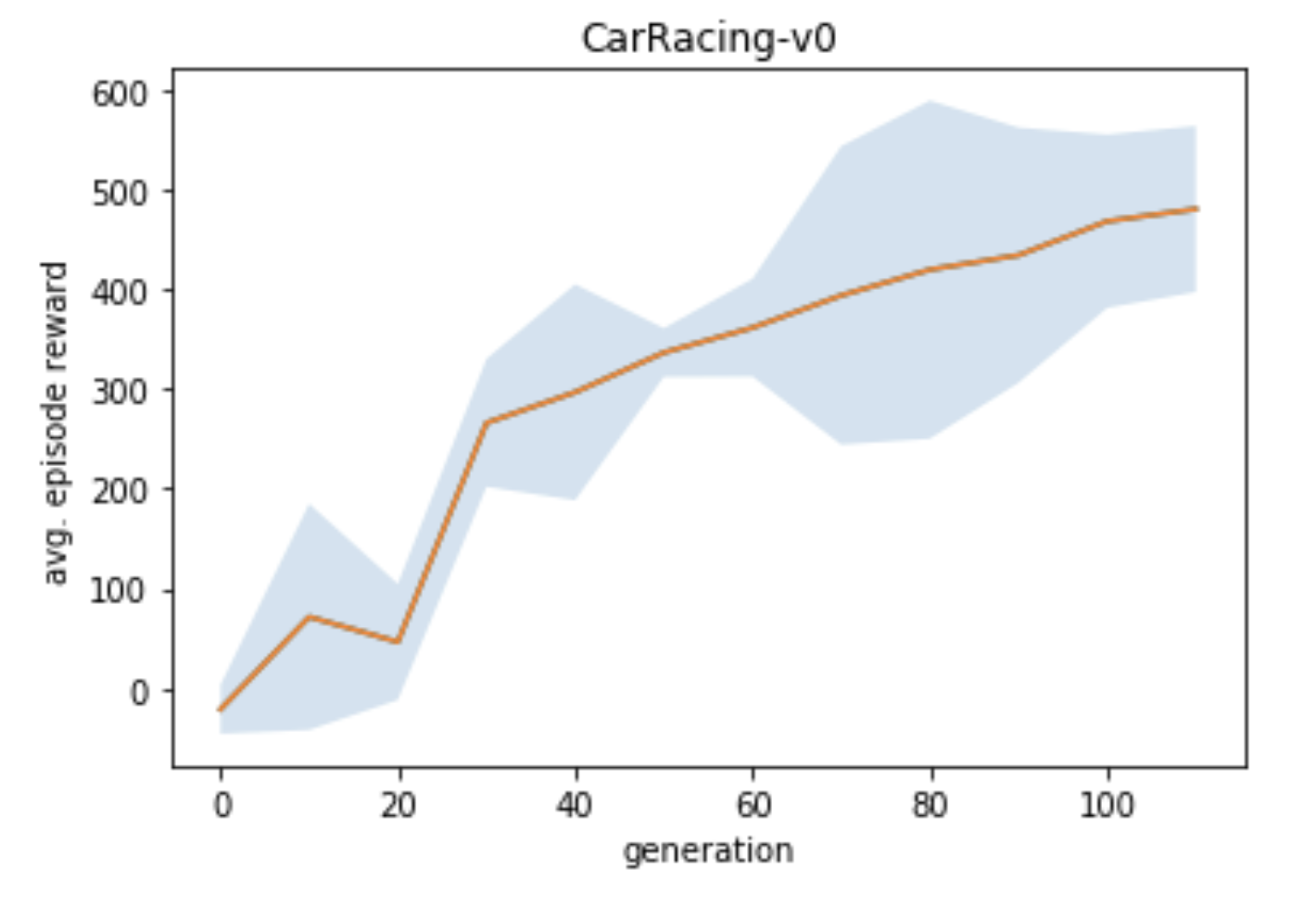

Average return curves comparing the original implementation and ours. The shaded area represents a standard deviation above and below the mean.

For simplicity, the Doom experiment implementation is slightly different than the original

- We do not use weighted cross entropy loss for done predictions

- We train the RNN with sequences that always begin at the start of an episode (as opposed to random subsequences)

- We sample whether the agent dies (as opposed to a deterministic cut-off)

| \tau | Returns Dream Environment | Returns Actual Environment | |

|---|---|---|---|

| D. Ha Original | 1.0 | 1145 +/- 690 | 868 +/- 511 |

| Eager | 1.0 | 1465 +/- 633 | 849 +/- 499 |