This repository contains an unofficial implementation to the paper: "Phase Transitions, Distance Functions, and Implicit Neural Representations". It is implemented on top of the IGR Codebase.

Representing surfaces as zero level sets of neural networks recently emerged as a powerful modeling paradigm, named Implicit Neural Representations (INRs), serving numerous downstream applications in geometric deep learning and 3D vision. Training INRs previously required choosing between occupancy and distance function representation and different losses with unknown limit behavior and/or bias. In this paper we draw inspiration from the theory of phase transitions of fluids and suggest a loss for training INRs that learns a density function that converges to a proper occupancy function, while its log transform converges to a distance function. Furthermore, we analyze the limit minimizer of this loss showing it satisfies the reconstruction constraints and has minimal surface perimeter, a desirable inductive bias for surface reconstruction. Training INRs with this new loss leads to state-of-the-art reconstructions on a standard benchmark.

For more details:

- Changes are made on the IGR Codebase to incorporate phase only at the following files:

reconstruction/run.pyreconstruction/setup.confmodel/network.pyutils/general.py

These changes are included within the following lines in each file:

# PHASE IMPLEMENTATION: START

...

# PHASE IMPLEMENTATION: ENDThe code is compatible with python 3.7 and pytorch 1.2. In addition, the following packages are required:

pip install numpy scipy pyhocon plotly scikit-image trimesh GPUtil open3dPHASE-INR can be used to reconstruct a single surface given a point cloud with or without normal data. Adjust reconstruction/setup.json to the

path of the input 2D/3D point cloud:

train

{

...

d_in=D

...

input_path = your_path

...

}

Where D=3 in case we use 3D data or 2 if we use 2D. It supports xyz,npy,npz,ply files.

Additional, hyperparameters can be tweaked in the reconstruction/setup.json file.

Then, run training:

cd ./code

python reconstruction/run.py Finally, to produce the meshed surface, run:

cd ./code

python reconstruction/run.py --eval --checkpoint CHECKPOINTwhere CHECKPOINT is the epoch you wish to evaluate or skip --checkpoint CHECKPOINT to evaluate the 'latest' epoch.

Chamfer Distance is implemented in utils/general.py and can be called to evaluate the reconstruction quality of the reconstructed mesh with a target mesh.

Here, is a test run of this inside this Colab Notebook

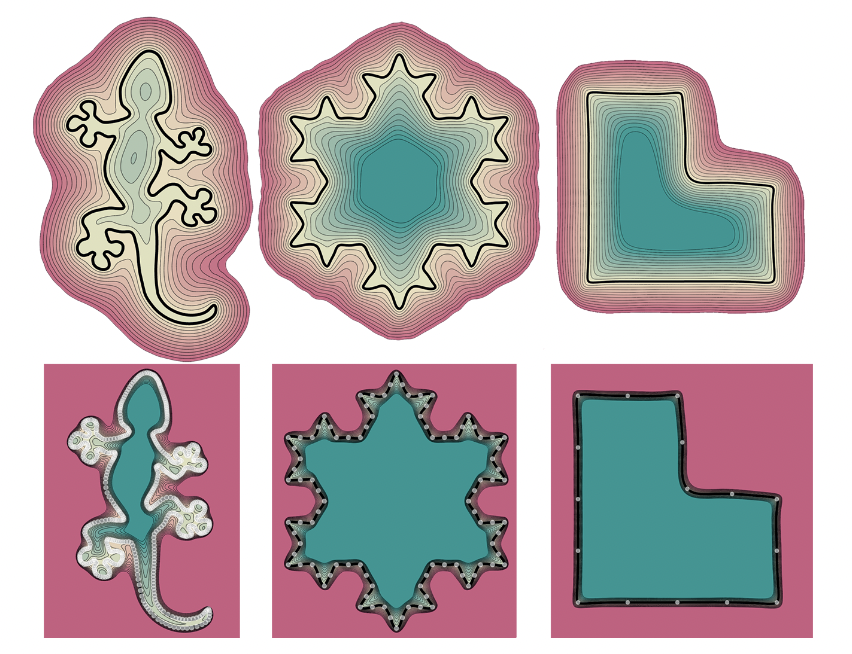

Because of computational constraints and availability of only the Colab Enviornment, I could only test it for smaller training duration and network size. (For more details check the Implementation Paper). Below are some preliminary results after just 1000 epochs each for the vanilla PHASE (left), PHASE with Fourier Feature (right) implementation and target mesh (middle)

For citation of this implementation use: