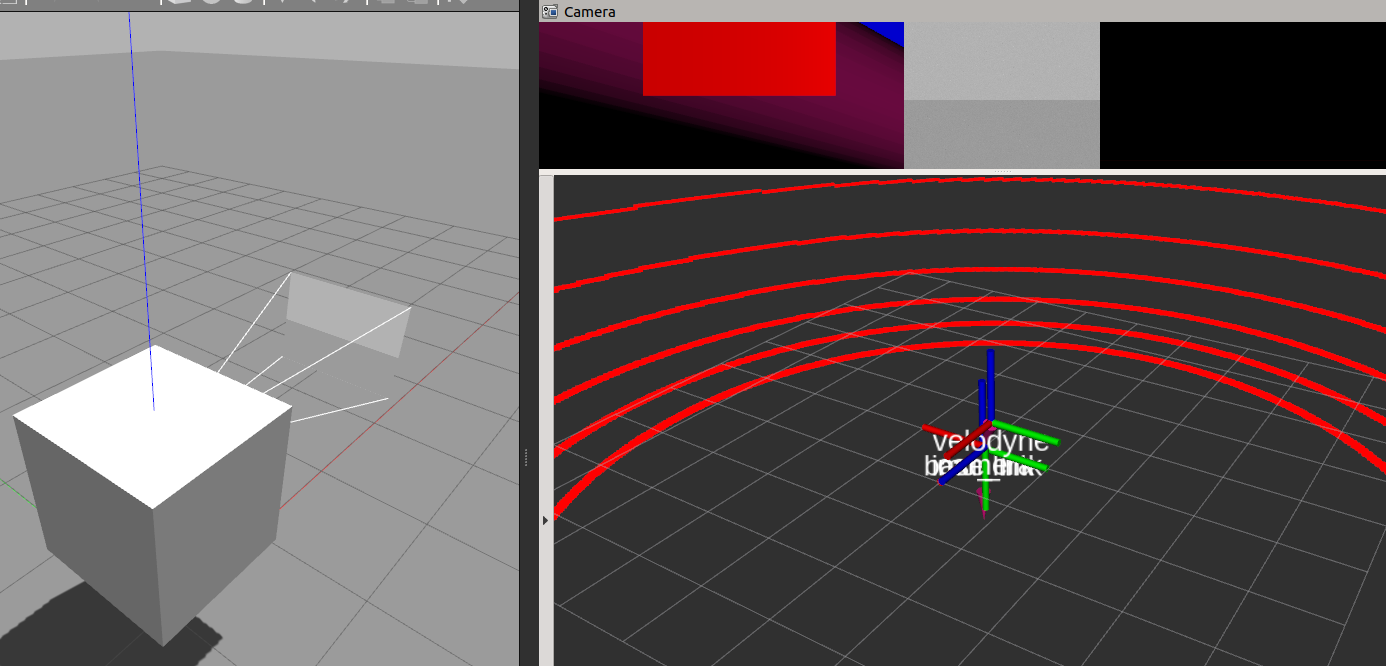

Conducting or evaluating calibration algorithms needs groud-thuth to compared with. However, exactly accurate reference is impossible to obtain. But in simulation scenarios, obtaining ground truth is easy. The repo. get a cubic with sensors move as you like with interact terminal. It moves 6dof to excite or get enough observation.

- ROS(melodic or noetic are tested)

- Gazebo with proper version to ROS

- (optional) Hector imu gazebo plugin.

- (optional) velodyne gazebo plugin supporting GPU.

mkdir -p catkin_ws/src

cd catkin_ws/src

git clone <this repo.>

cd .. && catkin buildcd catkin_ws

source devel/setup.bash

roslauch obj_with_6dof demo.launchThen you should see interactive terminal to control motion of platform.

Control Your Vehicle!

---------------------------

Moving around:

W/S: X-Axis

A/D: Y-Axis

X/Z: Z-Axis

Q/E: Yaw

I/K: Pitch

J/L: Roll

Trans Speed:

Slow / Fast: 1 / 2

Rot Speed:

Slow / Fast: 3 / 4

CTRL-C to quit

pose xyz: [0.0, 0.0, 0.0]

pose rpy: [0.0, 0.0, 0.0]

trans speed: 0.1, rot speed: 0.5roslaunch obj_with_6dof run.launchsee urdf/robot.xacro:

<!-- velodyne and its parameters-->

<xacro:include filename="$(find obj_with_6dof)/urdf/velodyne.xacro"/>

<xacro:VLP name="rslidar16" topic="lidar_points" organize_cloud="false" hz="10" gpu="true" visualize="false">

<!-- extrincis to imu -->

<origin xyz="0.1 0.2 0.5" rpy="0 0 0" />

</xacro:VLP>

<!-- camera and its parameters-->

<xacro:include filename="$(find obj_with_6dof)/urdf/CameraSensor.xacro"/>

<xacro:Macro_CameraROS name="realsense" parent="base_link" frame="camera_fake" image_topic="image" fov="1.047198" hz="20" width="640" height="480">

<!-- extrincis to imu -->

<origin xyz="0.1 0.1 0.1" rpy="0 0 0" />

</xacro:Macro_CameraROS>If you dont need both of the sensors, just feel free to comment out what you dont need!

rosbag record -O <your bag> /imu/data /lidar_points /imageFor example, get lidar frame w.r.t imu frame

rosrun tf tf_echo /imu_link /velodyne

showing:

At time 0.000

- Translation: [0.100, 0.200, 0.500]

- Rotation: in Quaternion [0.000, 0.000, 0.000, 1.000]

in RPY (radian) [0.000, -0.000, 0.000]

in RPY (degree) [0.000, -0.000, 0.000]