This repository provides implementation with training/testing codes of various human pose estimation architectures in Pytorch Authors : Naman Jain and Sahil Shah

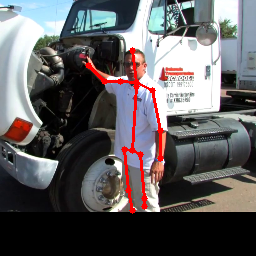

Some visualizations from pretrained models:

-

DeepPose: Human Pose Estimation via Deep Neural Networks :

multiple resnet/inception base networks [Pretrained Models Available (MPII and COCO)]

-

Stacked Hourglass Networks for Human Pose Estimation :

standard hourglass architecture [Pretrained Models Available (MPII and COCO)]

-

Chained Predictions Using Convolutional Neural Networks :

Sequential prediction of joints [Pretrained Models Available (MPII and COCO)]

-

Multi-Context Attention for Human Pose Estimation (Pose-Attention) :

Uses soft attention in heatmaps to locate joints

-

Learning Feature Pyramids for Human Pose Estimation (PyraNet) :

pyramid residual modules, fractional maxpooling

- Human Pose Estimation with Iterative Error Feedback (IEF)

- Deeply Learned Compositional Models for Human Pose Estimation (DLCM)

- pytorch == 0.4.1

- torchvision ==0.2.0

- scipy

- configargpare

- progress

- json_tricks

- Cython

pip install -r requirements.txt

For setting up MPII dataset please follow this link and update the dataDir parameter in mpii.defconf configration file. Also please download and unzip this folder and updates the paths for worldCoors & headSize in the config file.

For setting up COCO dataset please follow this link and update the dataDir parameter in coco.defconf

There are two important parameters that are required for running, DataConfig and ModelConfig.

Corresponding to both datasets (MPII & COCO) config files are provided in the conf/datasets folder.

Corresponding to all models implemented config files are provided in conf/models folder.

To train a model please use

python main.py -DataConfig conf/datasets/[DATA].defconf -ModelConfig conf/models/[MODEL_NAME].defconf

-ModelConfig config file for the model to use

-DataConfig config file for the dataset to use

To continue training a pretrained model please use

python main.py -DataConfig conf/datasets/[DATA].defconf -ModelConfig conf/models/[MODEL_NAME].defconf --loadModel [PATH_TO_MODEL]

-ModelConfig config file for the model to use

-DataConfig config file for the dataset to use

--loadModel path to the .pth file for the model (containing state dicts of model, optimizer and epoch number)

(use [-test] to run only the test epoch)

Further options can (and should!) be tweaked from the model and data config files (in the conf folder).

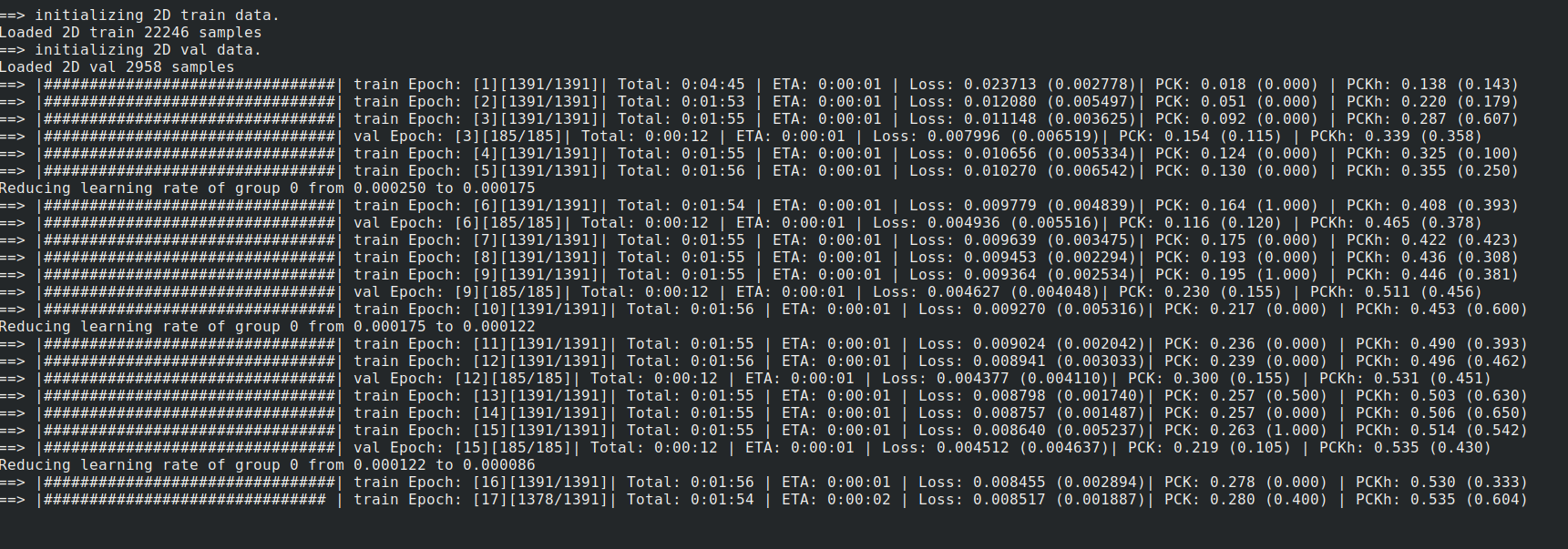

The training window looks like this (Live-Updating Progress Bar Support):

To download the pretrained-models please use this link.

| Model | DataSet | Performance |

|---|---|---|

| ChainedPredictions | MPII | PCKh : 81.8 |

| StachedHourGlass | MPII | PCKh : 87.6 |

| DeepPose | MPII | PCKh : 54.2 |

| ChainedPredictions | COCO | PCK : 82 |

| StachedHourGlass | COCO | PCK : 84.7 |

| DeepPose | COCO | PCK : 70.4 |

We used help of various open source implementations. We would like to thank Microsoft Human Pose Estimation for providing dataloader for COCO, Xingi Zhou's 3D Hourglass Repo for MPII dataloader and HourGlass Pytorch Codebase. We would also like to thank PyraNet & Attention-HourGlass for open-sourcing their code in lua.

- Implement code for showing the MAP performance on the COCO dataset

- Add visualization code

- Add more models

- Add visdom support

We plan (and will try) to complete these very soon!!