This contains the code and model for ground-view geo-localization method described in: Stochastic Attraction-Repulsion Embedding for Large Scale Image Localization, ICCV2019.

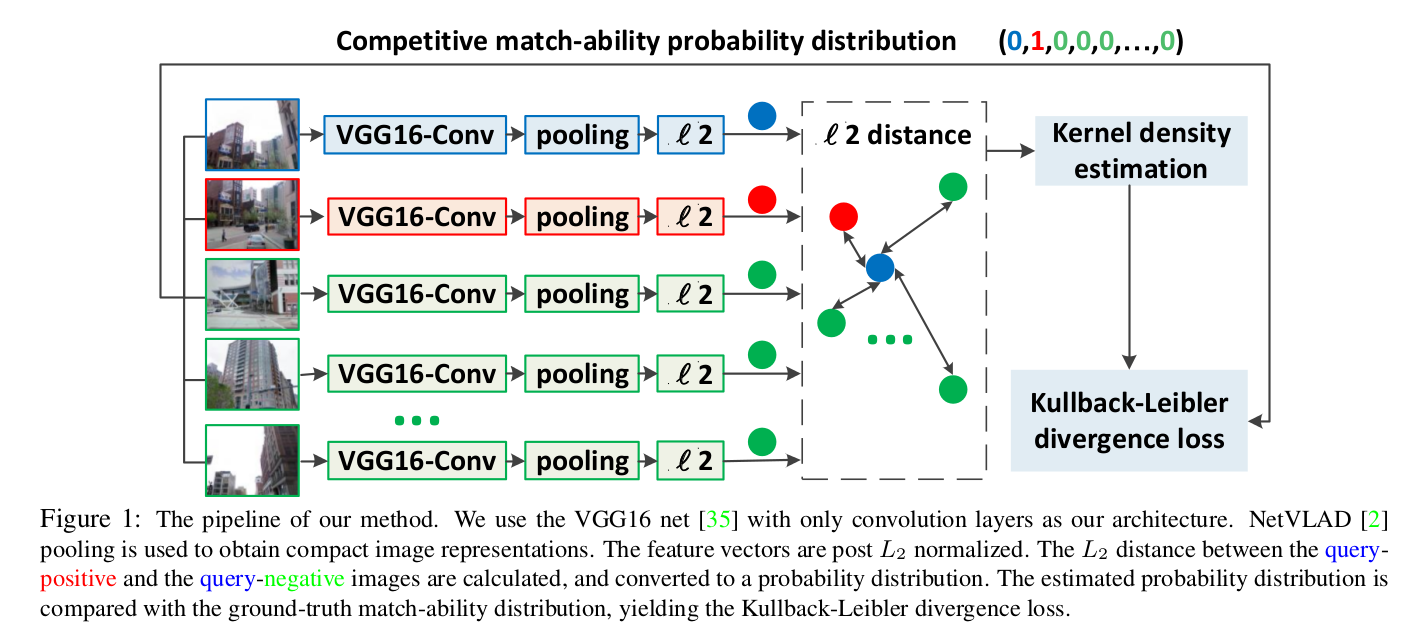

This paper tackles the problem of large-scale image-based localization (IBL) where the spatial location of a query image is determined by finding out the most similar reference images in a large database. For solving this problem, a critical task is to learn discriminative image representation that captures informative information relevant for localization. We propose a novel representation learning method having higher location-discriminating power. It provides the following contributions: 1) we represent a place (location) as a set of exemplar images depicting the same landmarks and aim to maximize similarities among intra-place images while minimizing similarities among inter-place images; 2) we model a similarity measure as a probability distribution on

Our model is implemented in MatConvNet 1.0-beta25. Other versions should be OK. You first need to download the NetVLAD code (https://github.com/Relja/netvlad), then run our training methods. Our pre-trained model by Gaussian kernel defined SARE loss (Our-Ind.) is available at: https://drive.google.com/file/d/1riu7rJEH4Eh5vhQ_tM8dBtUfwhKilqhq/view?usp=sharing

The model is VGG-16 + NetVLAD + whitening, trained on Pitts30k. The feature dimension is 4096. If you need feature embedding at a smaller dimension, you can simply take the top-K elements, and then L_2 normalize it.

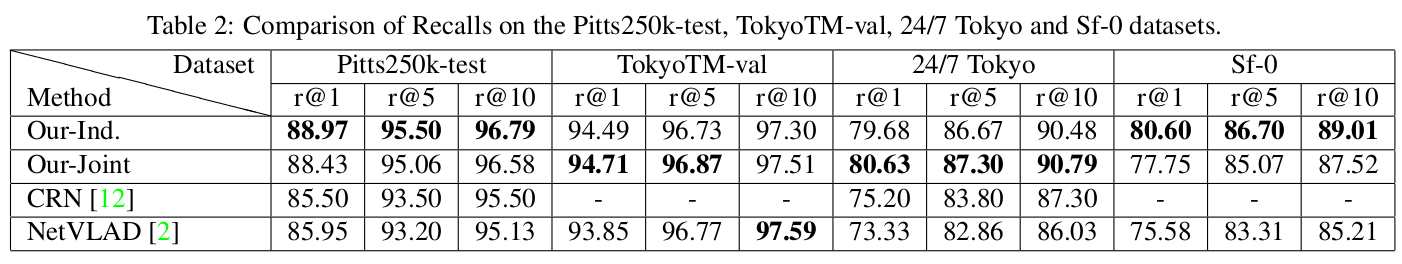

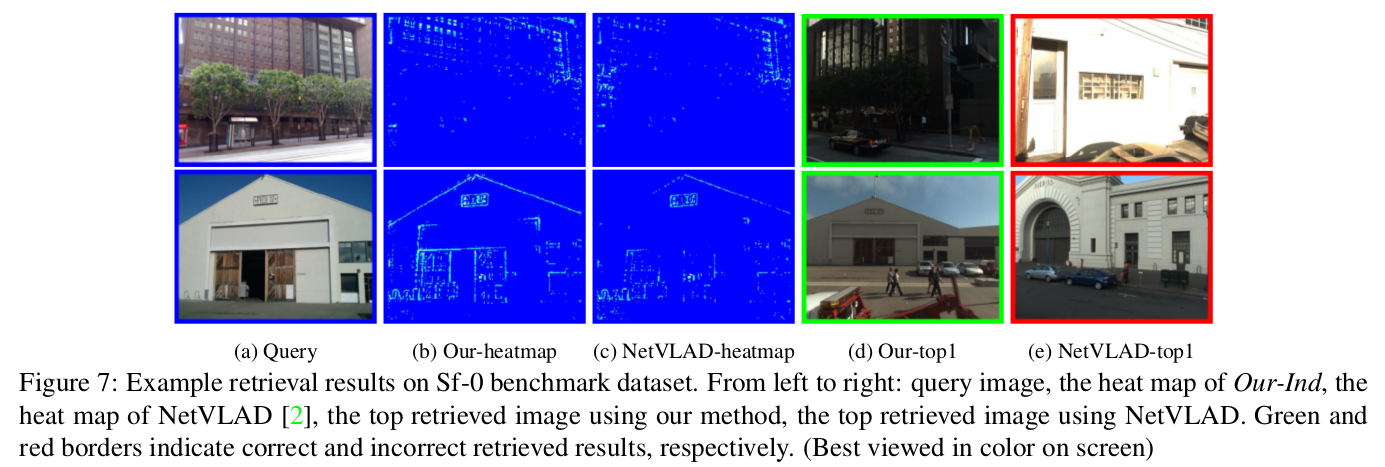

From the above table, we can see that our method significantly outperforms the original NetVLAD on challenging Tokyo 24/7 and Sf-0 dataset. You can get some ideas why our trained CNN performs better from the following figure:

As can be seen, our method focuses on regions that are useful for image geo-localization while emphasizing the distinctive details on buildings. On the other hand, the original NetVLAD emphasizes local features, not the overall building style.

As can be seen, our method focuses on regions that are useful for image geo-localization while emphasizing the distinctive details on buildings. On the other hand, the original NetVLAD emphasizes local features, not the overall building style.

If you want to do some experiments on the above 4 datasets, please contact the original authors, not me. I would express my thanks to Prof. Akihiko Torii who generously provided me with the data.

setup;

doPitts250k= false;

lr= 0.001;

dbTrain= dbPitts(doPitts250k, 'train');

dbVal= dbPitts(doPitts250k, 'val');

% Gaussian kernel defined SARE loss (Our-Ind.)

sessionID= trainGaussKernalInd(dbTrain, dbVal, ...

'netID', 'vd16','layerName', 'conv5_3', 'backPropToLayer', 'conv5_1', ...

'method', 'vlad_preL2_intra', ...

'learningRate', lr, ...

'doDraw', true);

The training stage usually can be early stopped in 4 epoches. For example, using the function pickBestNet, below is my result.

53e0 Best epoch: 4 (out of 7)

===========================================

Recall@N 0001 0002 0003 0004 0005 0010

===========================================

off-the-shelf 0.80 0.86 0.90 0.92 0.93 0.96

our trained 0.89 0.94 0.95 0.96 0.97 0.98

trained/shelf 1.12 1.09 1.06 1.05 1.05 1.02

- Since I have already included the PCA whitening weights, please do not add PCA layer again. That is to say, you need to comment the below lines in your testing codes:

net= addPCA(net, dbTrain, 'doWhite', true, 'pcaDim', 4096);

- To do experiments on Tokyo 24/7 dataset, you need to resize query images (Only query, don't resize database images):

ims_= vl_imreadjpeg(thisImageFns, 'numThreads', opts.numThreads, 'Resize', 640);

You also need to convert all .png images to .jpg images to enable vl_imreadjpeg. You can use convertPngtoJPG.m to do that.

If you like, you can cite our following publication:

Liu Liu; Hongdong Li; Yuchao Dai. Stochastic Attraction-Repulsion Embedding for Large Scale Image Localization. In IEEE International Conference on Computer Vision (ICCV), October 2019.

@InProceedings{Liu_2019_ICCV, author = {Liu, Liu and Li, Hongdong and Dai, Yuchao}, title = {Stochastic Attraction-Repulsion Embedding for Large Scale Image Localization}, booktitle = {The IEEE International Conference on Computer Vision (ICCV)}, month = {October}, year = {2019} }

and also the following prior work:

- Arandjelovic, Relja, et al. "NetVLAD: CNN architecture for weakly supervised place recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

If you have any questions, drop me an email (Liu.Liu@anu.edu.au)