Imitation learning algorithms (using SAC [HZA18, HZH18] as the base RL algorithm):

- AdRIL [SCB21]

- DRIL [BSH20] (dropout version)

- GAIL [HE16] (a.k.a. DAC/SAM when using an off-policy algorithm [KAD18, BK18])

- GMMIL [KP18]

- PWIL [DHG20] (nofill version)

- RED [WCA19]

General options include:

- BC [P91] (pre)training:

bc_pretraining.iterations: >= 0; default 0 - State-only imitation learning:

imitation.state-only: true/false; default false - Absorbing state indicator [KAD18]:

imitation.absorbing: true/false; default true - Training on a mix of agent and expert data:

imitation.mix_expert_data: none/mixed_batch/prefill_memory'; default none - BC auxiliary loss:

imitation.bc_aux_loss: true/false; default false(truefor DRIL)

DRIL, GAIL and RED include several options for their trained discriminators.

AdRIL options include:

- Balanced sampling:

imitation.balanced: true/false(alternate sampling expert and agent data batches vs. mixed batches) - Discriminator update frequency:

imitation.update_freq: >= 0(set to 0 for SQIL [RDL19])

DRIL options include:

- Quantile cutoff:

imitation.quantile_cutoff: >= 0, <= 1

GAIL options include:

- Reward shaping (AIRL) [FLL17]:

imitation.model.reward_shaping: true/false - Subtract log π(a|s) (AIRL) [FLL17]:

imitation.model.subtract_log_policy: true/false - Reward functions (GAIL/AIRL/FAIRL) [HE16, FLL17, GZG19]:

imitation.model.reward_function: AIRL/FAIRL/GAIL - Gradient penalty [KAD18, BK18]:

imitation.grad_penalty: >= 0 - Spectral normalisation [BSK20]:

imitation.spectral_norm: true/false - Entropy bonus [ORH21]:

imitation.entropy_bonus: >= 0 - Loss functions (BCE/Mixup/nn-PUGAIL) [HE16, CNN20, XD19]:

imitation.loss_function: BCE/Mixup/PUGAIL - Additional hyperparameters for the loss functions:

imitation.mixup_alpha: >= 0,imitation.pos_class_prior: >= 0, <= 1,imitation.nonnegative_margin: >= 0

PWIL options include:

- Reward scale α:

imitation.reward_scale: >= 0 - Reward bandwidth scale β:

imitation.reward_bandwidth_scale: >= 0

RED options include:

- Reward bandwidth scale σ1:

imitation.reward_bandwidth_scale: >= 0

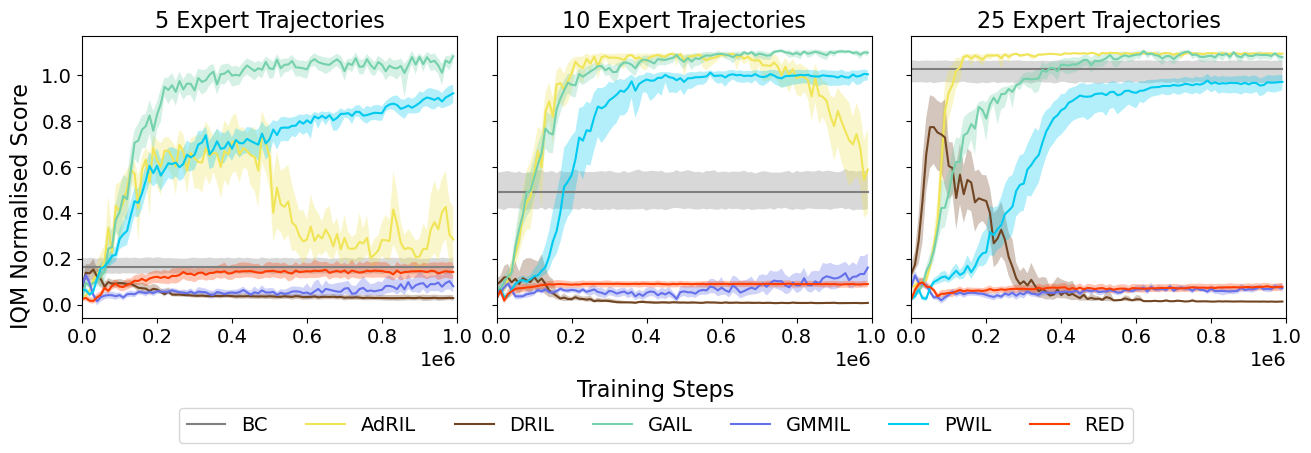

Benchmarked on Gym MuJoCo environments with D4RL "expert-v2" data.

Requirements can be installed with:

pip install -r requirements.txtNotable required packages are PyTorch, OpenAI Gym, D4RL and Hydra. Ax and the Hydra Ax Sweeper plugin are required for hyperparameter optimisation.

The training of each imitation learning algorithm (or SAC with the real environment reward) can be started with:

python train.py algorithm=<ALG> env=<ENV>where <ALG> is one of AdRIL/BC/DRIL/GAIL/GMMIL/PWIL/RED/SAC and <ENV> is one of ant/halfcheetah/hopper/walker2d. For example:

python train.py algorithm=GAIL env=hopperResults will be saved in outputs/<ALGO>_<ENV>/m-d_H-M-S with the last subfolder indicating the current datetime.

Hyperparameters can be found in conf/config.yaml and conf/algorithm/<ALG>.yaml. To use algorithm- + number-of-trajectory-specific tuned hyperparameters [AL21], add option optimised_hyperparameters=<ALG>_<NUM_TRAJECTORIES>_trajectories (note that algorithm=<ALG> also needs to be specified to load other algorithm-specific hyperparameters). For example:

python train.py algorithm=AdRIL optimised_hyperparameters=AdRIL_5_trajectories env=halfcheetahRunning the algorithm on all environments in parallel can be achieved with:

python train_all.py algorithm=<ALG> env=<ENV>with results saved in outputs/<ALGO>_all/m-d_H-M-S, containing subdirectories for each environment.

A hyperparameter sweep can be performed using -m and a series of hyperparameter values. For example:

python train.py -m algorithm=PWIL env=walker2d reinforcement.discount=0.97,0.98,0.99Results will be saved in outputs/<ALGO>_<ENV>_sweep/m-d_H-M-S with a subdirectory (named by job number) for each run.

Therefore to run seed sweeps with optimised hyperparameters, we can run the following:

python train.py -m algorithm=RED optimised_hyperparameters=RED_25_trajectories env=halfcheetah seed=1,2,3,4,5,6,7,8,9,10Bayesian hyperparameter optimisation (jointly, over all environments) can be run with:

python train_all.py -m algorithm=<ALG>This command is used to optimise hyperparameters for a given number of expert trajectories, for example:

python train_all.py -m algorithm=GAIL imitation.trajectories=5To view the results of the optimisation process, run:

python scripts/print_plot_sweep_results.py --path <PATH>for example, as follow:

python scripts/print_plot_sweep_results.py --path outputs/BC_all_sweeper/03-09_19-13-21- @ikostrikov for https://github.com/ikostrikov/pytorch-a2c-ppo-acktr-gail

- @RuohanW for https://github.com/RuohanW/RED

- @jonathantompson for https://github.com/google-research/google-research/tree/master/dac

- @gkswamy98 for https://github.com/gkswamy98/pillbox

- @ddsh for https://github.com/google-research/google-research/tree/master/pwil

If you find this work useful and would like to cite it, please use the following:

@inproceedings{arulkumaran2023pragmatic,

author = {Arulkumaran, Kai and Ogawa Lillrank, Dan},

title = {A Pragmatic Look at Deep Imitation Learning},

booktitle = {Asian Conference on Machine Learning},

year = {2023}

}v1.0 of the library contains on-policy IL algorithms. v2.0 of the library contains off-policy IL algorithms.

[AL23] A Pragmatic Look at Deep Imitation Learning

[BK18] Sample-Efficient Imitation Learning via Generative Adversarial Nets

[BSH20] Disagreement-Regularized Imitation Learning

[BSK20] Lipschitzness Is All You Need To Tame Off-policy Generative Adversarial Imitation Learning

[CNN20] Batch Exploration with Examples for Scalable Robotic Reinforcement Learning

[DHG20] Primal Wasserstein Imitation Learning

[FLL17] Learning Robust Rewards with Adversarial Inverse Reinforcement Learning

[GZG19] A Divergence Minimization Perspective on Imitation Learning Methods

[HE16] Generative Adversarial Imitation Learning

[HZA18] Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor

[HZH18] Soft Actor-Critic Algorithms and Applications

[KAD18] Discriminator-Actor-Critic: Addressing Sample Inefficiency and Reward Bias in Adversarial Imitation Learning

[KP18] Imitation Learning via Kernel Mean Embedding

[ORH21] What Matters for Adversarial Imitation Learning?

[P91] Efficient Training of Artificial Neural Networks for Autonomous Navigation

[RDL19] SQIL: Imitation Learning via Reinforcement Learning with Sparse Rewards

[SCB21] Of Moments and Matching: A Game-Theoretic Framework for Closing the Imitation Gap

[WCA19] Random Expert Distillation: Imitation Learning via Expert Policy Support Estimation

[XD19] Positive-Unlabeled Reward Learning