- This is a GUI program for pose estimation and action recognition based on Openpose.

- You can visualize key-points on image or camera and save the key-points data(as npy format) at the same time.

- You can train a deep learning model for action ( or gesture or emotion) recognition through the data collected from this program.

- My platform is windows10. I have complied the openpose with the python api and the complied binary files will be given below. So you don't have to compile the openpose from scratch

- numpy==1.14.2

- PyQt5==5.11.3

- opencv-python==4.1.0.25

- torch==1.0.1(only for gesture recognition)

-

Install cuda10 and [cudnn7]. Or here is my BaiduDisk password:

4685. -

Run

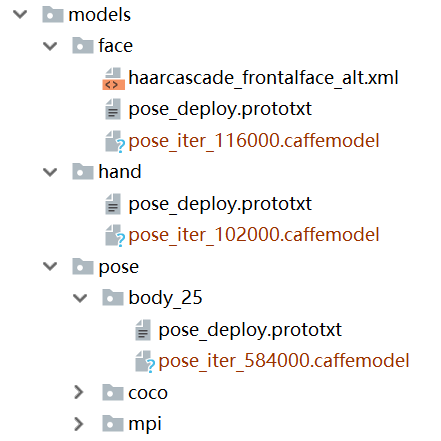

models/getModels.batto get model. Or here is my BaiduDisk password:rmknand put models in the corresponding position -

Download 3rd-party dlls from my BaiduDisk password:

64sgand unzip in your 3rdparty folder.

: save current result

: save current result : save result every interval while camera or video is opening

: save result every interval while camera or video is opening : open camera

: open camera : show setting view

: show setting view : show file-tree view

: show file-tree view

- First, you should select which kind of key-points do you want to visualize or collect by checking the checkbox(

Body,Hand,Face). - The threshold of three model can be controled by draging corresponding slider.

- Change save interval.

- Change net resolution for smaller GPU memery, but it will reduce the accuracy.

- The function of

gesture recognitioncan only be used when the hand checkbox is on. My model is only a 2 layers MLP, and the data was collected with front camera and left hand. So it may have many limitations. Your can train your own model and replace it.

-

action recognition

-

emotion recognition

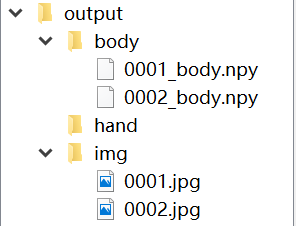

You will get a output folder like the following figure. The count is set to 0 when the program begins and will automatically increase with the number of images saved.

data_body = np.load('body/0001_body.npy')

data_hand = np.load('hand/0001_hand.npy')

data_face = np.load('face/0001_face.npy')

print(data_body.shape)

# (1, 25, 3) : person_num x kep_points_num x x_y_scroe

print(data_hand.shape)

# (2, 1, 21, 3) : left_right x person_num x kep_points_num x x_y_scroe

print(data_face.shape)

# (1, 70, 3) : person_num x kep_points_num x x_y_scroe-

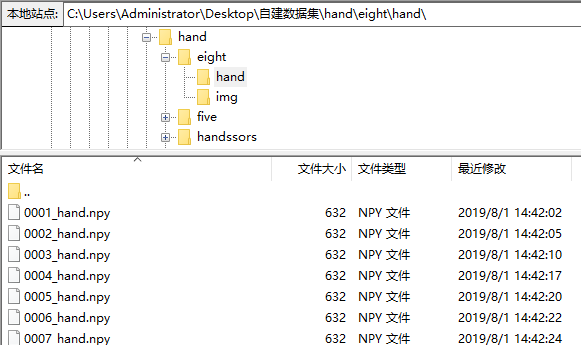

Collect data and make folder for every class.

-

run

python train.py -p C:\Users\Administrator\Desktop\自建数据集\handto train your model(replace path with your dataset path)