Table of Contents

This project aims to achieve two goals:

Modularization of functionalities from state-of-the-art projects in Automatic Speech Recognition (ASR), Text-to-Speech (TTS), and Wav2Lip, allowing independent usage of each module.

The project focuses on extracting the functionalities of leading projects in the field of ASR, TTS, and Wav2Lip and making them available as individual modules. By doing so, developers can easily integrate these modules into their applications as standalone components, without the need for extensive modifications or dependencies on the entire project.

Integration of multiple projects into a comprehensive end-to-end solution.

This project aims to combine the functionalities of various projects into a unified and seamless solution. By integrating ASR, TTS, and Wav2Lip capabilities, it provides a comprehensive pipeline that covers the entire process of converting text to synthesized speech with lip movement synchronization. This end-to-end solution simplifies the overall workflow and allows users to achieve high-quality results without the hassle of manually coordinating multiple projects.

By offering both independent modules and an integrated solution, this project provides flexibility and convenience for developers and researchers working in the field of digital humans. It enables them to leverage the best features from various projects and seamlessly integrate them into their own applications or research work.

This project uses GPT-SoVITS as submodule for voice clone, please follow the installation guideline from GPT-SoVITS to setup environment.

Please mind I've rename GPT-SoVITS by removing dash, as it's invalid character as pacakge name.

git submodule add git@github.com:RVC-Boss/GPT-SoVITS.git GPTSoVITSFor high quality wav2lip, I also cloned two submodules from Wav2Lip-GFPGAN

git submodule add git@github.com:ajay-sainy/Wav2Lip-GFPGAN.git Wav2Lip-GFPGAN

ln -s Wav2Lip-GFPGAN/Wav2Lip-master ./Wav2Lip

ln -s Wav2Lip-GFPGAN/GFPGAN-master ./GFPGAN

git add Wav2Lip

git add GFPGAN-

Install ffmpeg

-

Pretrain Models

Download pretrained models from GPT-SoVITS Models and place them in GPTSoVITS/GPT_SoVITS/pretrained_models

- Create independent Python Environment.

conda create -n ai_twins python=3.9 conda activate ai_twins

- Clone the repo

git clone https://github.com/Jeru2023/ai_twins.git

- Install packages

install -r requirements.txt install -r GPTSoVITS/requirements.txt

- Overwrite config.py under GPTSoVITS

cd ai_twins cp config-GPTSoVITS.py GPTSoVITS/config.py - To run test or WebUI Demo, create three empty folders in output: slice_trunks, tts, upload

Please refer to the test files in test folder.

from infer.tts_model import TTSModel

import utils

from infer.persona_enum import PersonaEnum

import os

tts_model = TTSModel()

root_path = utils.get_root_path()

persona_name = PersonaEnum.NORMAL_FEMALE.get_name()

text = '今天天气不错呀,我真的太开心了。'

uuid = utils.generate_unique_id(text)

# en for english

text_language = 'zh'

output_path = os.path.join(root_path, 'output', 'tts', f'{uuid}.wav')

tts_model.inference(persona_name, text, text_language, output_path)import utils

import os

from infer.asr_model import ASRModel

asr_model = ASRModel()

audio_sample = os.path.join(utils.get_root_path(), 'data', 'audio', 'sample_short.wav')

output = asr_model.inference(audio_sample)

print(output)import utils

from tools import slice_tool

in_path = utils.get_root_path() + '/data/audio/sample_long.wav'

out_folder = utils.get_root_path() + '/output/slice_trunks'

out_file_prefix = 'sample'

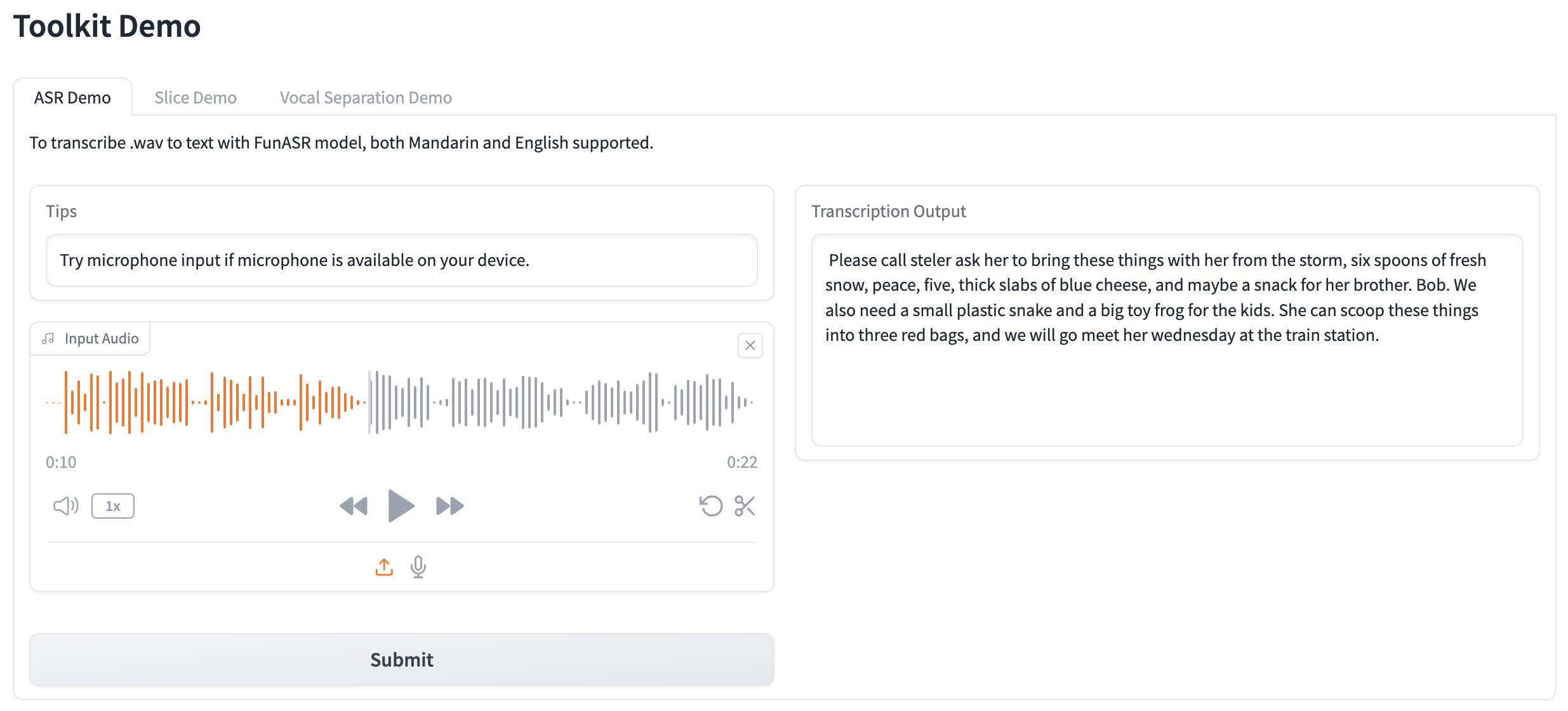

slice_tool.slice_audio(in_path, out_folder, out_file_prefix, threshold=-40) python asr_toolkit_webui.py python tts_toolkit_webui.py- [*] Toolkit - ASR

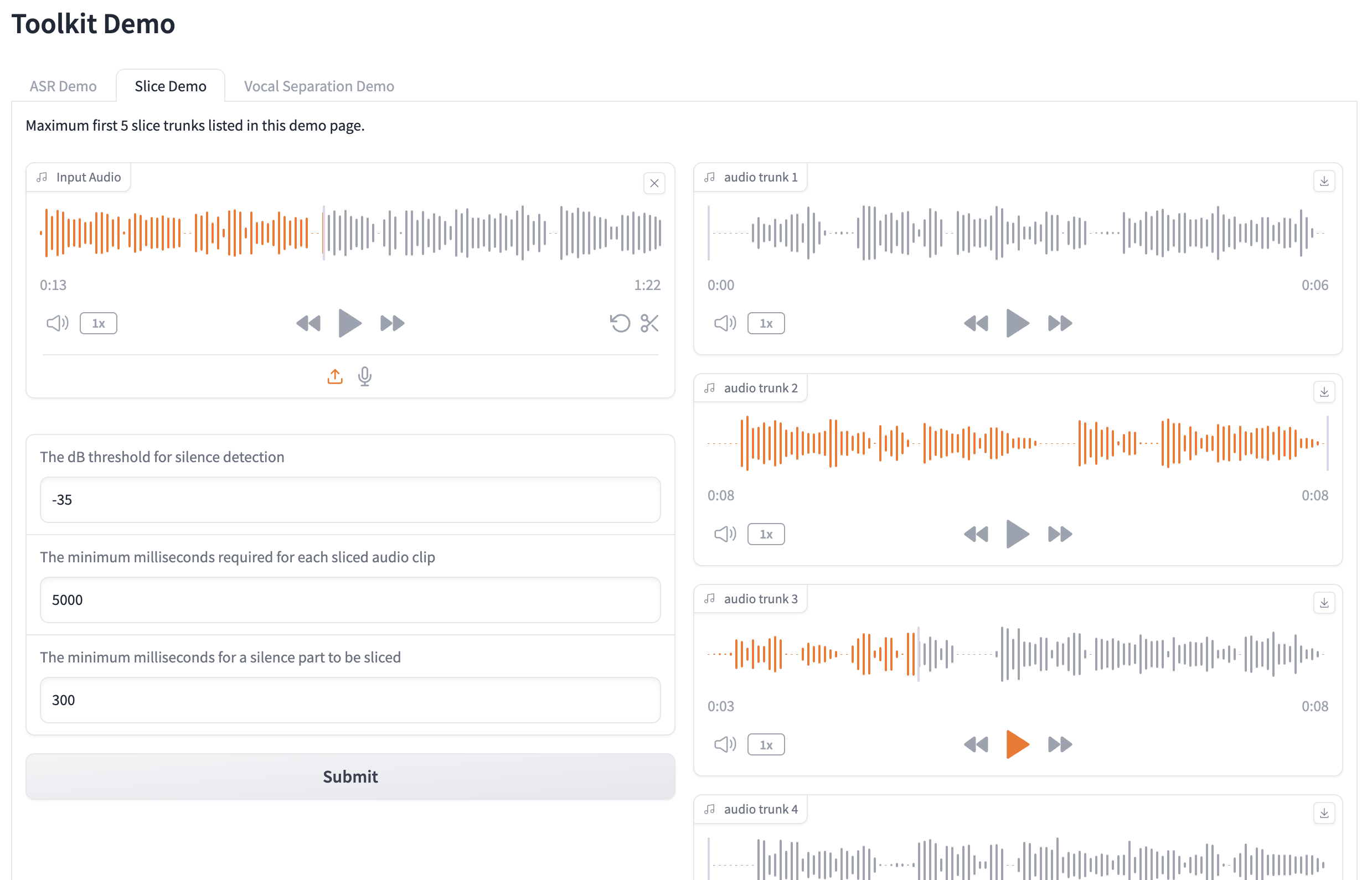

- [*] Toolkit - Audio Slicer

- Toolkit - Vocal Separation

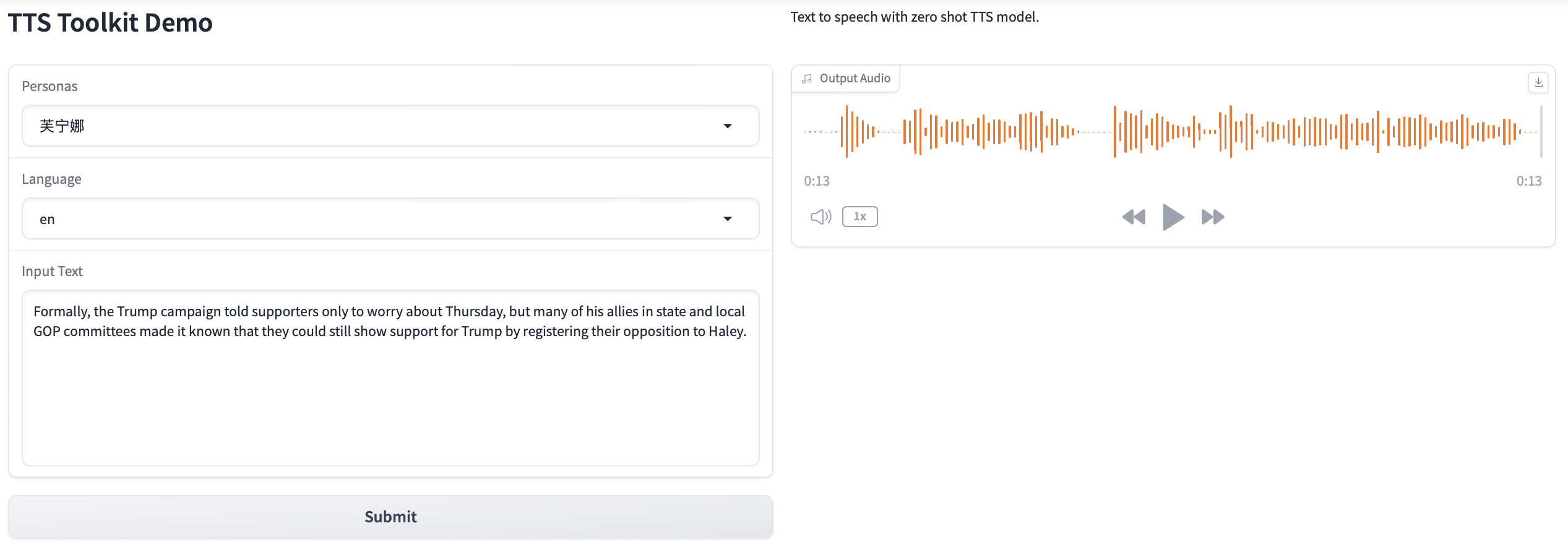

- [*] Toolkit - Zero-shot TTS

- [*] ASR Toolkit Demo WebUI

- [*] TTS Toolkit Demo WebUI

- wav2lip integration

- GFPGAN enhancement

- integration Demo WebUI

See the open issues for a full list of proposed features (and known issues).

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the MIT License. See LICENSE.txt for more information.

Jeru Liu - @Jeru_AGI - jeru.token@gmail.com

Project Link: https://github.com/Jeru2023/ai_twins