This is a pytorch implementation of Deep Deterministic Policy Gradients, using Ornstein–Uhlenbeck process for exploring in continuous action space while using a Deterministic policy.

Environment is provided by the openAI gym

Base environment and agent is written in RL-Glue standard, providing the library and abstract classes to inherit from for reinforcement learning experiments.

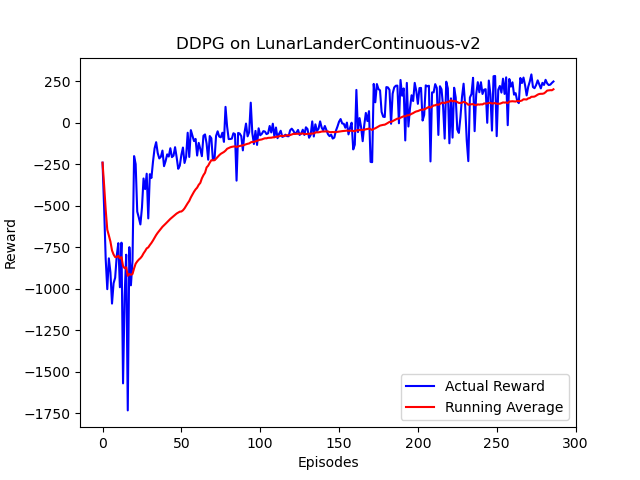

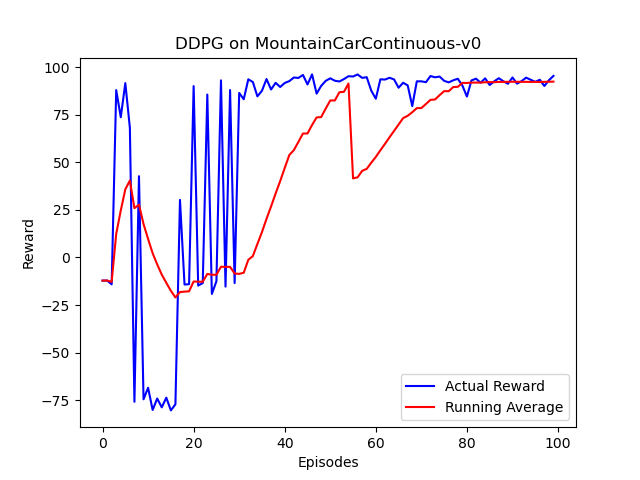

| Environment | reward sum for each episode | result |

| LunarLanderContinuous-v2 |

|

|

| MountainCarContinuous-v0 |

|

|

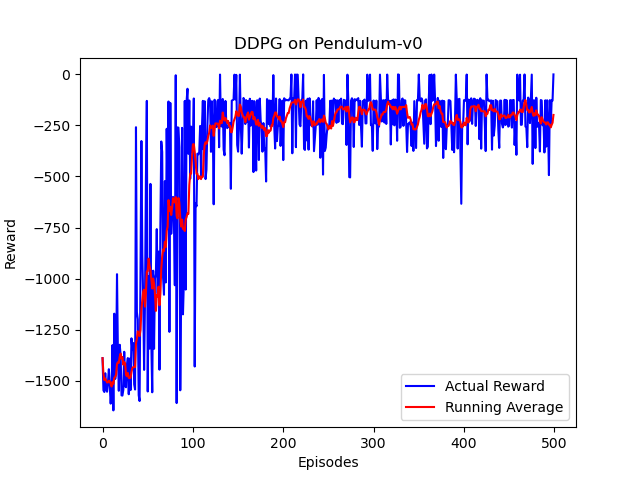

| Pendulum-v0 |

|

|

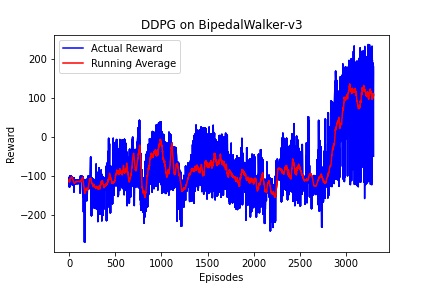

| BipedalWalker-v3 |

|

|

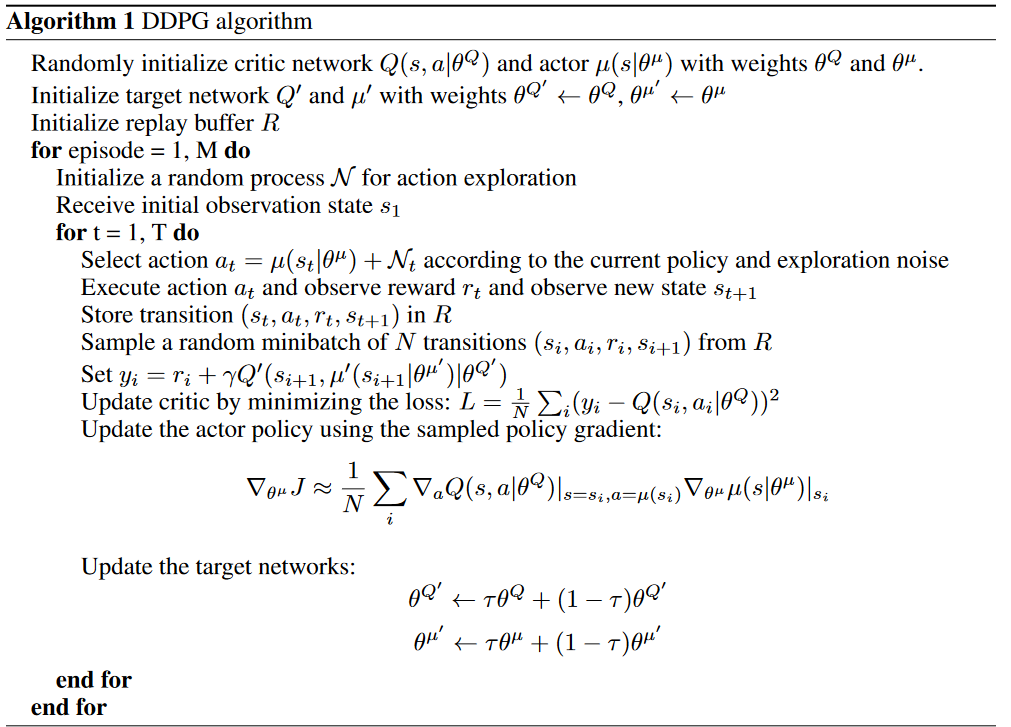

Actor Network consists of a 3-layer neural network taking into input the state (s) and outputs the action (a) which should be taken denoted by Pi(s). The 2 hidden layers are 400 and 300 units respectively as per paper by Lilicrap et al.

Input layer and intermediate layers uses ReLU activation function, with output layers using tanh activation function.

Critic Network consists of a 3-layer neural network taking into input the state (s) and corresponding action (a) and outputs the state-action value denoted by Q(s, a). The 2 hidden layers are 400 and 300 units respectively as per paper by Lilicrap et al.

Input layer and intermediate layers uses ReLU activation function.

Actor network is optimized by minimizing -Q(s, Pi(s)).mean()

Critic network is optimized by minimizing mse_loss(r+ γ*Q(s', Pi(s')) - Q(s, a))

- Replay buffer size of 1,000,000.

- Weight decay = 10−2

- Discount factor γ = 0.99

- Soft target updates τ = 0.001

- Final layer weights and biases initialized from uniform distribution of [−3×10−3,3×10−3]

- ALl other layers weights initialized from uniform distributions[−1/√f,1/√f], where f is the fan-in of the layer

- Final layer weights and biases initialized from uniform distribution of [−3×10−3,3×10−3]

- ALl other layers weights initialized from uniform distributions[−1/√f,1/√f], where f is the fan-in of the layer

- Instructions for installing openAI gym environment in Windows

- Tqdm

- ffmpeg (conda install -c conda-forge ffmpeg)

- pytorch (conda install pytorch torchvision cudatoolkit=10.2 -c pytorch)

- numpy

- argparse

- moviepy

git clone https://github.com/Jason-CKY/pytorch_DDPG.git

cd lunar_lander_DQN

Edit experiment parameters in main.py

* change environment_parameters['gym_environment'] to desired environment to train in

python main.py

python test.py

usage: test.py [-h] [--env ENV] [--checkpoint CHECKPOINT] [--gif]

optional arguments:

-h, --help show this help message and exit

--env ENV Environment name

--checkpoint CHECKPOINT Name of checkpoint.pth file under model_weights/env/

--gif Save rendered episode as a gif to model_weights/env/recording.gif