Official python implementation of the ASGRL in the ICML 2022 paper: Leveraging Approximate Symbolic Models for Reinforcement Learning via Skill Diversity

Note: The code has been refactored for better readability. If you encounter any problem, feel free to email lguan9@asu.edu or submit an issue on Github.

- Q-Learning Agent

- Tabular Q-Learning is used to implement the low-level policy for each skill.

- File: q_learning.py

- Diversity Q-Learning

- A Diversity-Q-Learning agent maintains a set of skill policies (i.e., a set of Q-Learning agents) to reach the same subgoal.

- The diversity rewards are computed in this agent.

- File: diversity_q_learning.py

- The curriculum-based version can be found in: curriculum_diversity_learning.py

- Hierarchical-Diversity-RL (Meta-Controller)

- The Hierarchical-Diversity-RL agent implements the meta-controller.

- A Hierarchical-Diversity-RL agent maintains a set of Diversity-Q-Learning agents to achieve different subgoals.

- Given a list of linearizations of landmarks sequence (i.e., a list of subgoal sequences), the Hierarchical-Diversity-RL agent is responsible for finding the right skill to achieve each subgoal and the final goal. Note that given a set of relative landmarks orderings, there could be multiple linearizations of landmark sequences.

- File: hierarchical_diversity_rl.py

- The curriculum-based version: hierarchical_diversity_rl_curriculum.py

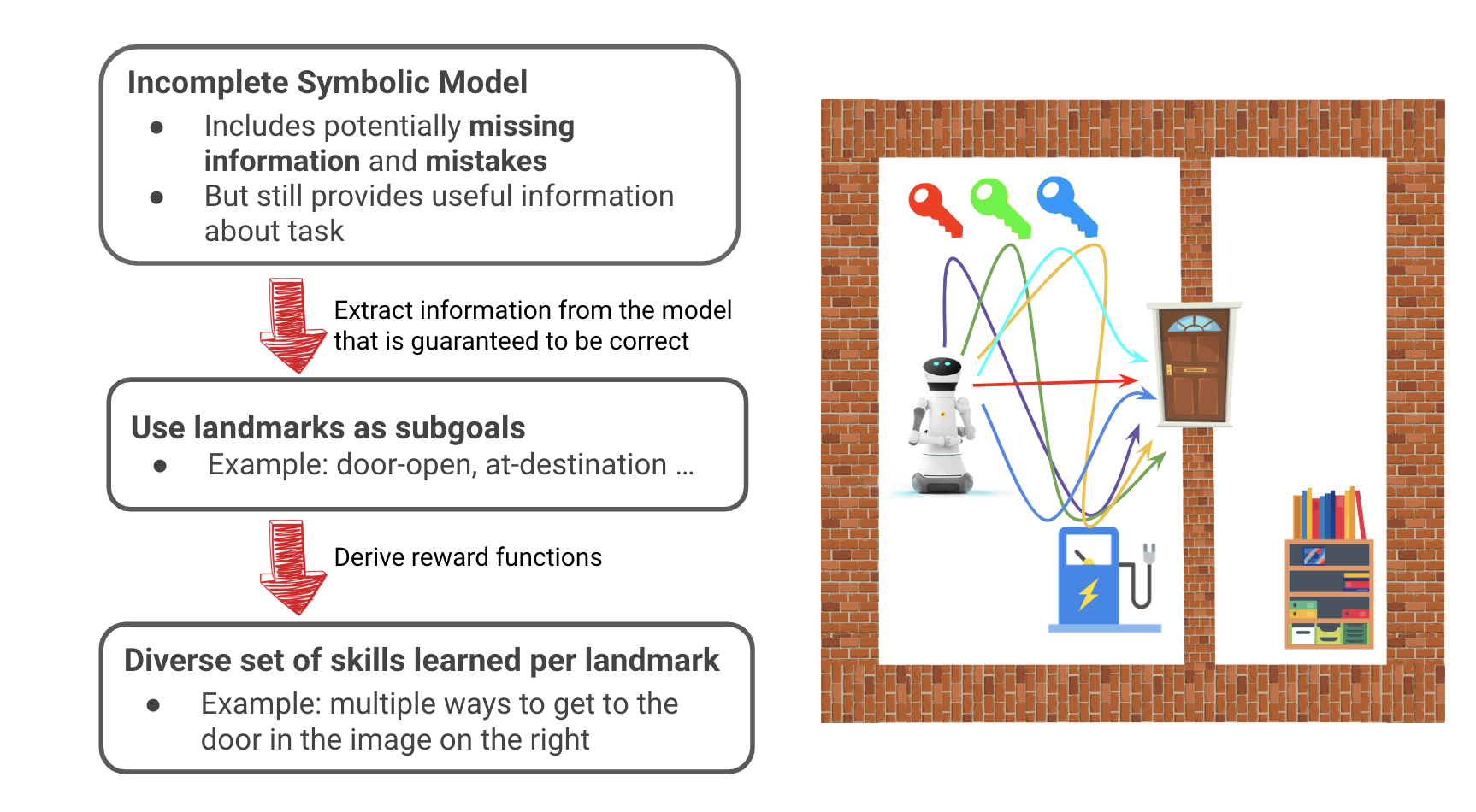

- Summary

- A Hierarchical-Diversity-RL agent (Meta-Controller) maintains a set of Diversity-Q-Learning agents

- A Diversity-Q-Learning agent maintains a set of Q-Learning agents

- Scripts

- q_train_mario.py

- q_curriculum_train_mario.py

- The list of available command line arguments can be found at the class Agent_Config

- Logging

- We implement the logging system (in the class Wandb_Logger) with the powerful experiment tracking tool Weights & Biases.

- The logging system is only activated when the flag

--use-wandbis added, e.g.,python q_train_mario.py --use-wandb

-

General Instructions:

- To customize the system, you can take our current code (based on the Mario environment) as example.

- The example config can be found at the config directory.

- Note that our implementation separates the symbolic planning part and the reinforcement learning part, and this repository only contains the code for the reinforcement learning. So the extracted landmark sequences need to be manually provided in the config class.

- To apply ASGRL in new environments, you also need to provide the function that tells whether certain subgoal/landmark is satisfied. See the

check_skill_successfunction in the classHierarchical_Diversity_RLorCurriculum_Hierarchical_Diversity_RLfor our Mario domain.

-

An Example of Customizing the System for a New Household Environment

- An example of how to apply ASGRL on a new environment can be found at directory example.

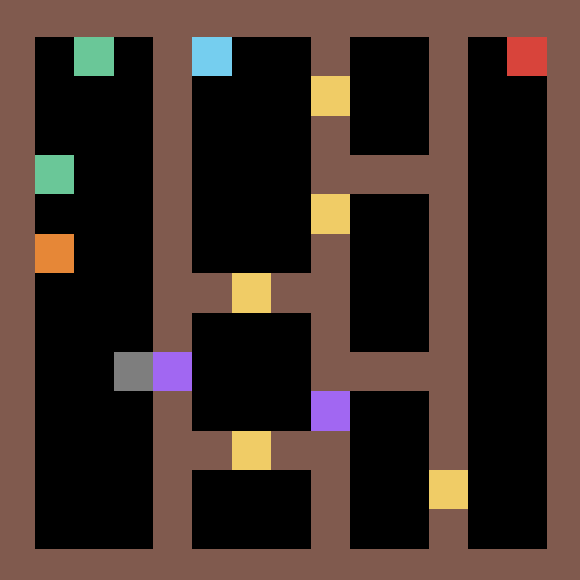

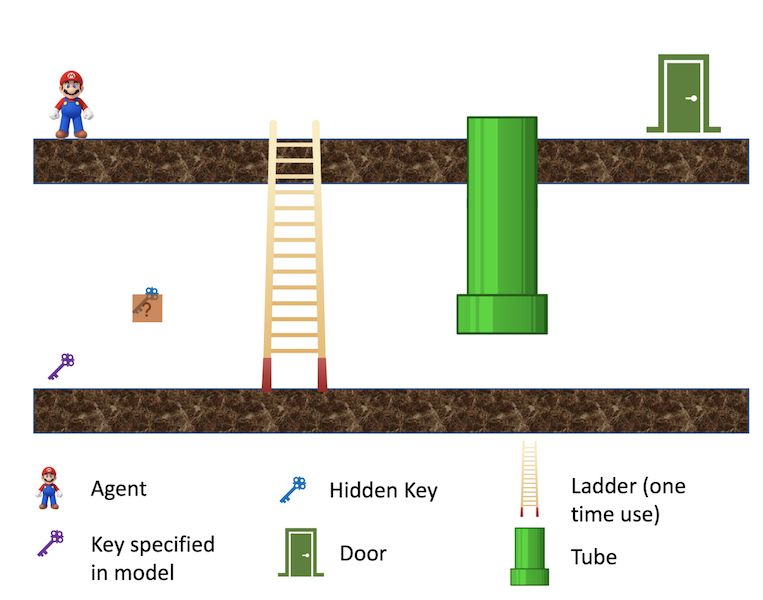

- Note that this new Household environment is more challenging than the one(s) in the paper:

- This new Household env has more rooms, thus making it more difficult to explore.

- Same as the ones in the paper, the task of the robot is to reach the destination (i.e., the red block). But the difference is that here the new environment has two locked doors and has more restrictions.

- There are three keys. Two of them (i.e., the green ones) can be used to open the corresponding locked door, and one (i.e., the orange one) is only for distraction.

- Locked doors are marked as purple blocks and unlocked doors are marked as yellow blocks.

- The robot (i.e., the gray block) can only carry one key at a time.

- There is a charging dock (i.e., the blue block). However, the robot needs to wisely choose the timing to recharge itself. More specifically, the robot should only recharge itself after it unlocks the two purple doors and before it enters the final room.

- Hence, the optimal policy for this task should be: pickup the key for the first locked door, unlock the first locked door, return and pickup the key for the second door, unlock the second door, head to the charging dock, go to the final room and perform the final task at the destination.

- We assume the human knows the structure of this task but doesn't know there are multiple keys & the correspondence between doors and keys. So the extract landmark sequence is:

pickup-first-key,unlock-door-0,pickup-second-key,unlock-door-1,recharge,at-final-room,at-destination. - We also provide a reference implementation of the baselines Landmark-Shaping and Vanilla Q-Learning (Note that the code has been refactored for better readability, please contact the authors if you encounter any runtime problem).

- We ran each algorithm for 10 times (not a formal experiment). The vanilla Q-Learning never succeeded within 500k env steps, highlighting the need for human symbolic advice. The Landmark-Shaping never succeeded either. It is biased by the shaping rewards and it gets stuck on several local minima, such as always picking up the nearest (wrong) key, or always heading to the final room without recharging itself.

- ASGRL managed to solve the task for 6 times (out of 10). We observed some factors that negatively affect the performance: (a) There were a couple of times when ASGRL found a goal-achieving policy for a while, but then as training proceeded, it forgot about this goal-achieving policy and eventually converged to a "bad" policy. One possible reason for this is that the meta-controller failed to adapt effectively to changes in low-level skills. (b) Some easier-to-learn skills already converge before some preceding skills discovered new low-level subgoal state(s). As a result, ASGRL may occasionally suffer from insufficient exploration.