![[PePy - Downloads]](https://static.pepy.tech/badge/hebg)

![[PePy - Downloads per week]](https://static.pepy.tech/badge/hebg/week)

![[CodeStyle - Black]](https://img.shields.io/badge/code%20style-black-000000.svg)

![[Licence - GPLv3]](https://img.shields.io/github/license/MathisFederico/Crafting?style=plastic)

This package is meant to build programatic hierarchical behaviors as graphs to compare them to human explanations of behavior.

We take the definition of "behavior" as a function from observation to action.

pip install hebgHere is an example to show how could we hierarchicaly build an explanable behavior to pet a cat.

"""

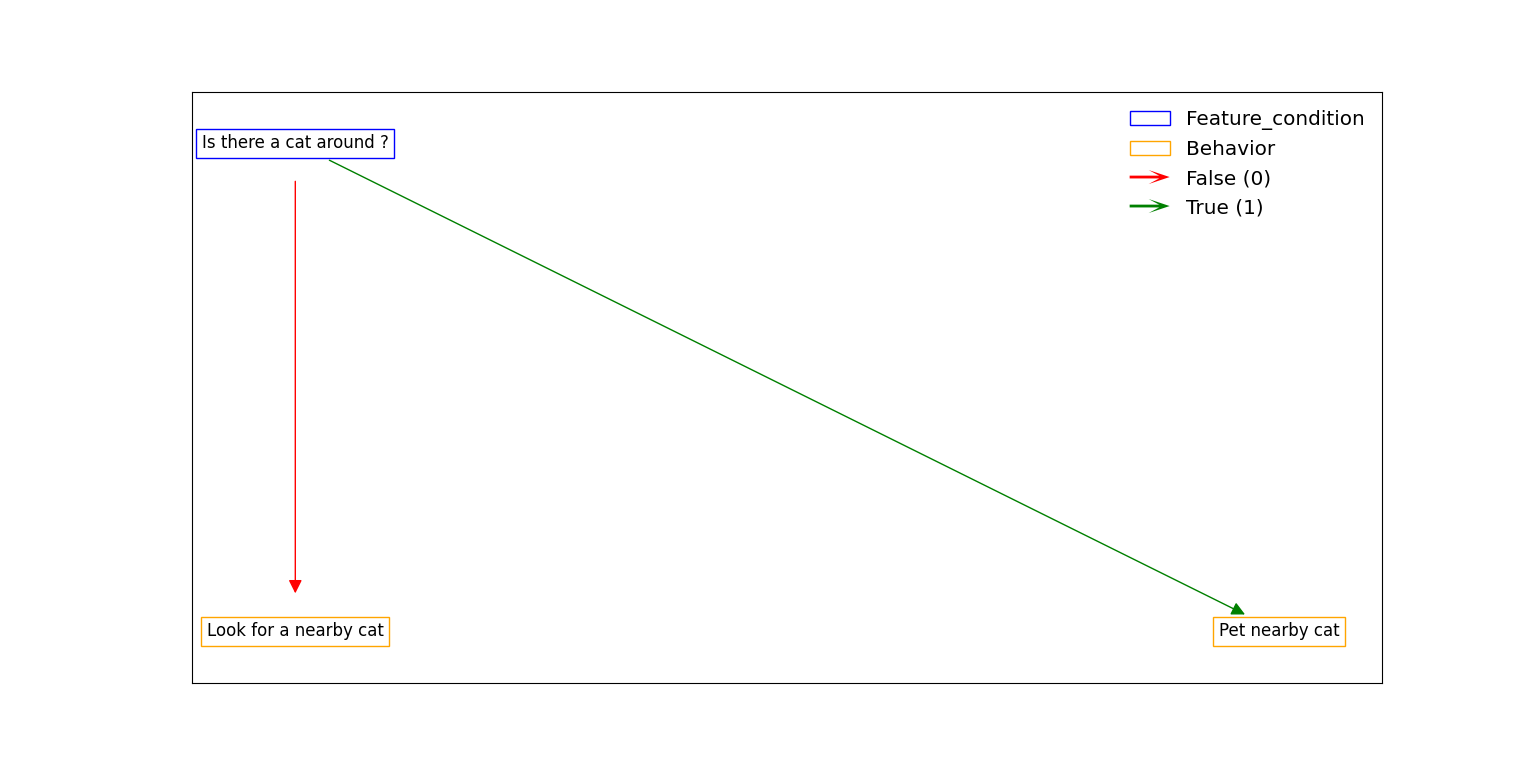

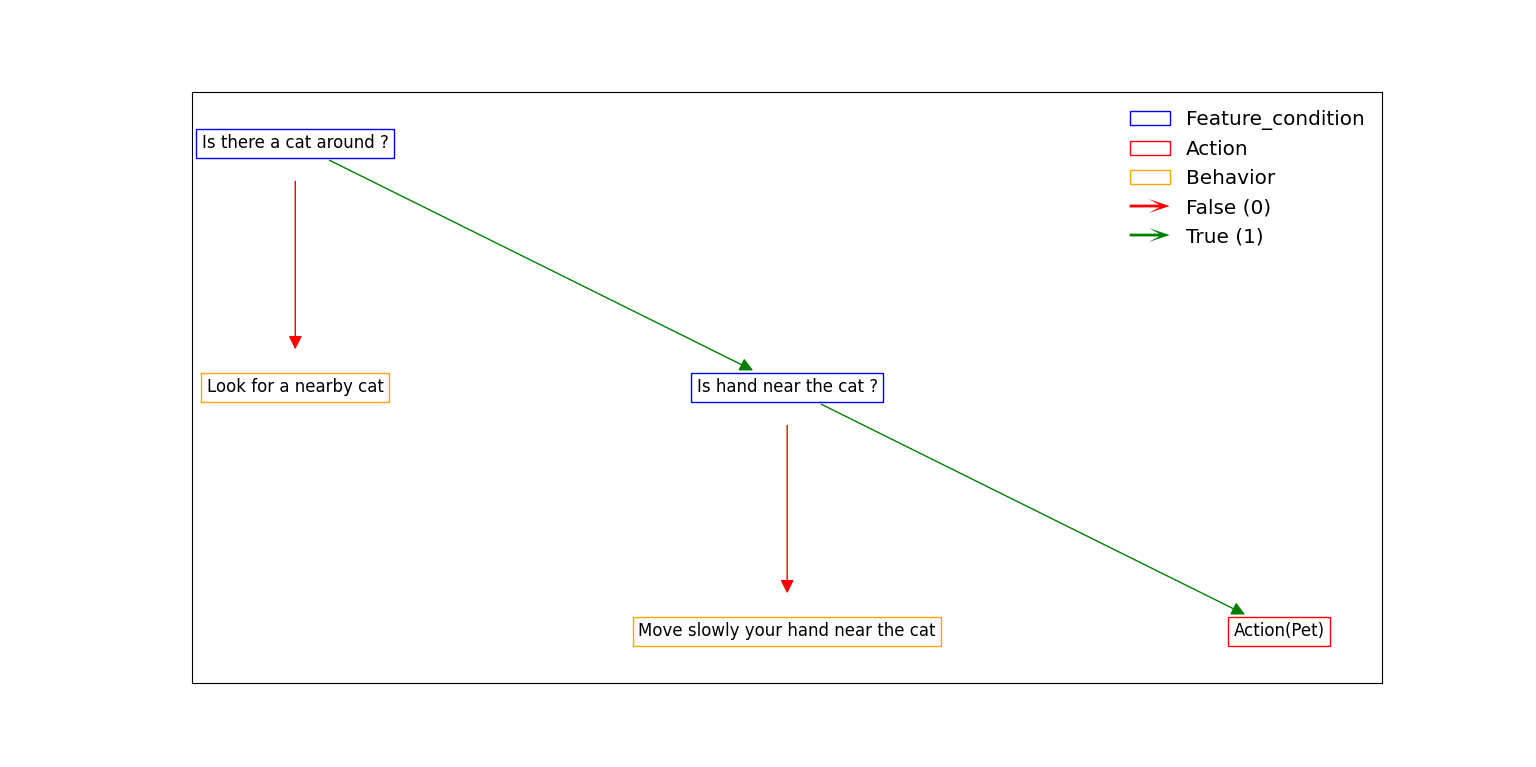

Here is the hierarchical structure that we would want:

```

PetACat:

IsThereACatAround ?

-> Yes:

PetNearbyCat

-> No:

LookForACat

PetNearbyCat:

IsYourHandNearTheCat ?

-> Yes:

Pet

-> No:

MoveYourHandNearTheCat

```

"""

from hebg import HEBGraph, Action, FeatureCondition, Behavior

from hebg.unrolling import unroll_graph

# Add a fundamental action

class Pet(Action):

def __init__(self) -> None:

super().__init__(action="Pet")

# Add a condition on the observation

class IsYourHandNearTheCat(FeatureCondition):

def __init__(self, hand) -> None:

super().__init__(name="Is hand near the cat ?")

self.hand = hand

def __call__(self, observation):

# Could be a very complex function that returns 1 is the hand is near the cat else 0.

if observation["cat"] == observation[self.hand]:

return int(True) # 1

return int(False) # 0

# Add an unexplainable Behavior (without a graph, but a function that can be called).

class MoveYourHandNearTheCat(Behavior):

def __init__(self) -> None:

super().__init__(name="Move slowly your hand near the cat")

def __call__(self, observation, *args, **kwargs) -> Action:

# Could be a very complex function that returns actions from any given observation

return Action("Move hand to cat")

# Add a sub-behavior

class PetNearbyCat(Behavior):

def __init__(self) -> None:

super().__init__(name="Pet nearby cat")

def build_graph(self) -> HEBGraph:

graph = HEBGraph(self)

is_hand_near_cat = IsYourHandNearTheCat(hand="hand")

graph.add_edge(is_hand_near_cat, MoveYourHandNearTheCat(), index=int(False))

graph.add_edge(is_hand_near_cat, Pet(), index=int(True))

return graph

# Add an other condition on observation

class IsThereACatAround(FeatureCondition):

def __init__(self) -> None:

super().__init__(name="Is there a cat around ?")

def __call__(self, observation):

# Could be a very complex function that returns 1 is there is a cat around else 0.

if "cat" in observation:

return int(True) # 1

return int(False) # 0

# Add an other unexplainable Behavior (without a graph, but a function that can be called).

class LookForACat(Behavior):

def __init__(self) -> None:

super().__init__(name="Look for a nearby cat")

def __call__(self, observation, *args, **kwargs) -> Action:

# Could be a very complex function that returns actions from any given observation

return Action("Move to a cat")

# Finally, add the main Behavior

class PetACat(Behavior):

def __init__(self) -> None:

super().__init__(name="Pet a cat")

def build_graph(self) -> HEBGraph:

graph = HEBGraph(self)

is_a_cat_around = IsThereACatAround()

graph.add_edge(is_a_cat_around, LookForACat(), index=int(False))

graph.add_edge(is_a_cat_around, PetNearbyCat(), index=int(True))

return graph

if __name__ == "__main__":

pet_a_cat_behavior = PetACat()

observation = {

"cat": "sofa",

"hand": "computer",

}

# Call on observation

action = pet_a_cat_behavior(observation)

print(action) # Action("Move hand to cat")

# Obtain networkx graph

graph = pet_a_cat_behavior.graph

print(list(graph.edges(data="index")))

# Draw graph using matplotlib

import matplotlib.pyplot as plt

fig, ax = plt.subplots()

graph.draw(ax)

plt.show()When ploting an HEBGraph of a behavior, only the graph of the behavior itself is shown. To see the full hierarchical graph (including sub-behaviors), we need to unroll the graph as such:

from hebg.unrolling import unroll_graph

unrolled_graph = unroll_graph(pet_a_cat_behavior.graph, add_prefix=False)

# Is also a networkx graph

print(list(unrolled_graph.edges(data="index")))

# Draw graph using matplotlib

import matplotlib.pyplot as plt

fig, ax = plt.subplots()

unrolled_graph.draw(ax)

plt.show()Note that unexplainable behaviors (the one without graphs) are kept as is.

Once you have a HEBGraph, you can use it to generate a working python code that replicates the HEBGraph's behavior:

code = pet_a_cat_behavior.graph.generate_source_code()

with open("pet_a_cat.py", "w") as pyfile:

pyfile.write(code)Will generate the code bellow:

from hebg.codegen import GeneratedBehavior

# Require 'Look for a nearby cat' behavior to be given.

# Require 'Move slowly your hand near the cat' behavior to be given.

class PetTheCat(GeneratedBehavior):

def __call__(self, observation):

edge_index = self.feature_conditions['Is there a cat around ?'](observation)

if edge_index == 0:

return self.known_behaviors['Look for a nearby cat'](observation)

if edge_index == 1:

edge_index_1 = self.feature_conditions['Is hand near the cat ?'](observation)

if edge_index_1 == 0:

return self.known_behaviors['Move slowly your hand near the cat'](observation)

if edge_index_1 == 1:

return self.actions['Action(Pet)'](observation)Whenever you encounter a 🐛 bug or have 🎉 feature request, report this via Github issues.

If you wish to contribute directly, see CONTRIBUTING