This Code Pattern is the third part of the series Extracting Textual Insights from Videos with IBM Watson. Please complete the Extract audio from video and Build custom Speech to Text model with speaker diarization capabilities code patterns of the series before continuing further since all the three code patterns are linked.

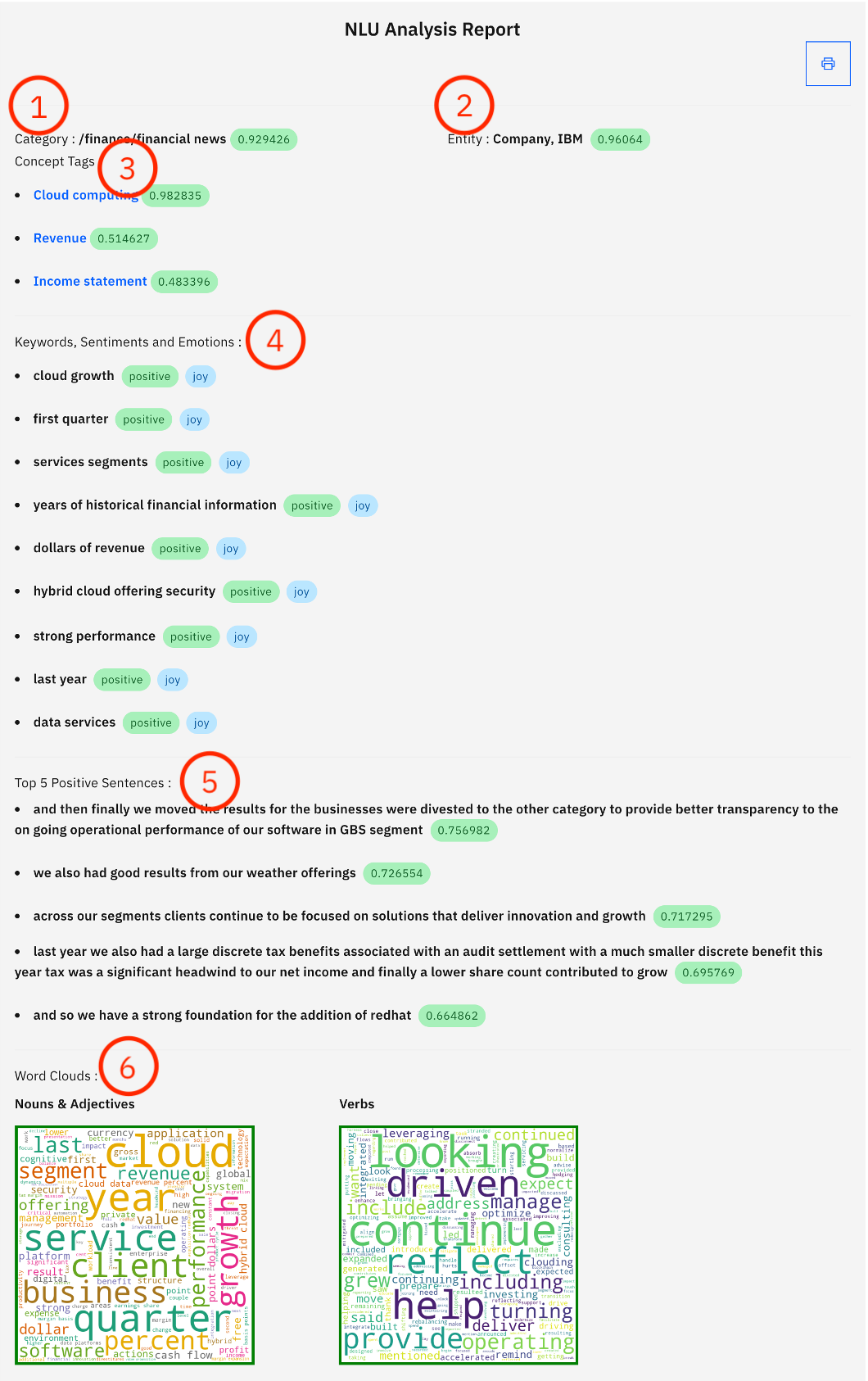

Natural Language Understanding includes a set of text analytics features that can be used to extract meanings from unstructured data such as a text file. Tone Analyzer on the other hand understand emotions and communication styles in a text. We combine the capabilities of both the services to extract meaningful insights in the form of NLU Analysis Report from a natural language transcript generated by transcribing IBM earnings call Q1 2019 meeting video recording. The report will consists of sentiment analysis of the meeting, top positive sentences spoken in the meeting and word clouds based on keywords, using Python Flask runtime.

In this code pattern, given a text file, we learn how to extract keywords, emotions, sentiments, positive sentences, and much more using Watson Natural Language Understanding and Tone Analyzer.

When you have completed this code pattern, you will understand how to:

- Use advanced NLP to analyze text and extract meta-data from content such as concepts, entities, keywords, categories, sentiment and emotion.

- Leverage Tone Analyzer's cognitive linguistic analysis to identify a variety of tones at both the sentence and document level.

- Connect applications directly to Cloud Object Storage.

-

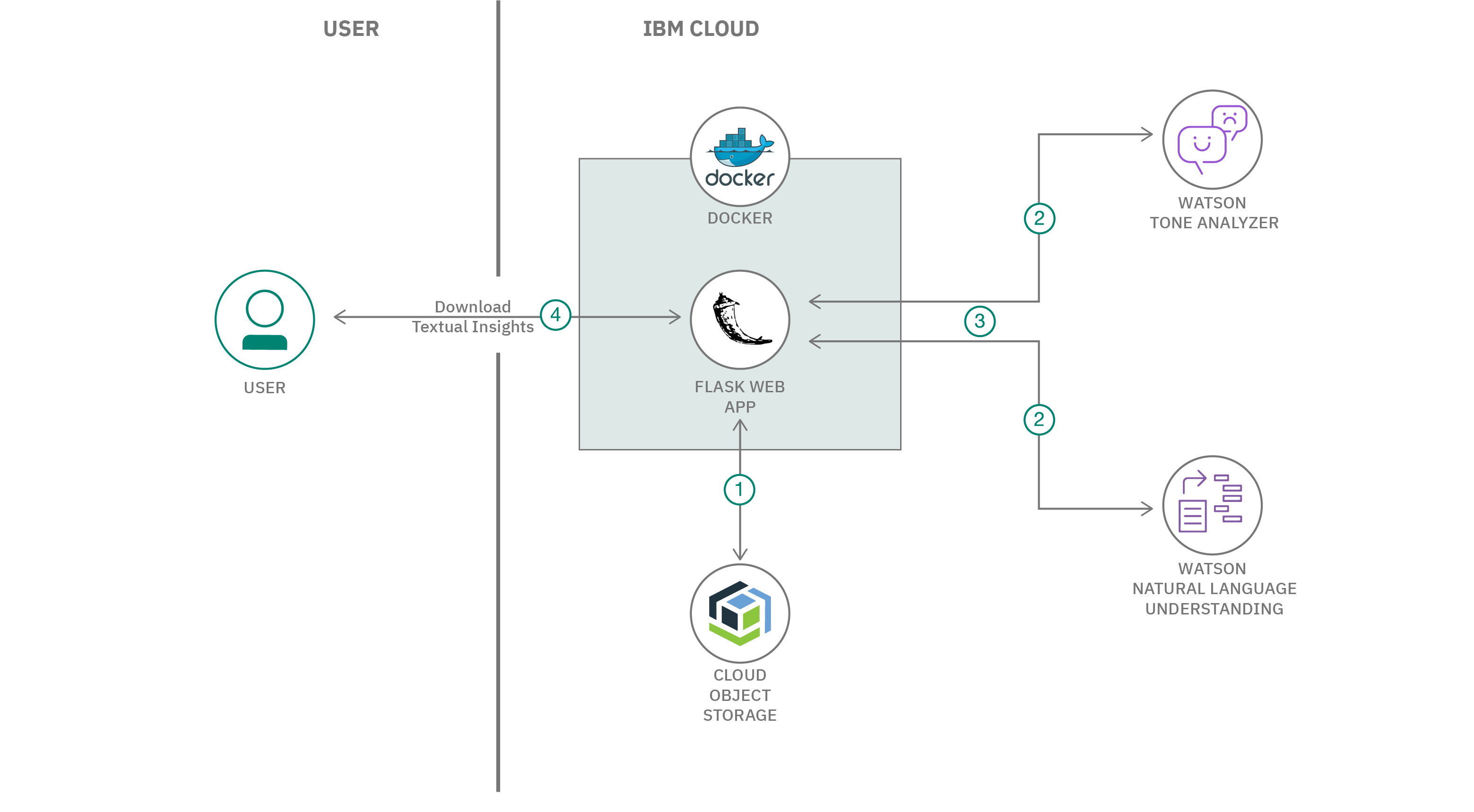

The transcribed text from the previous code pattern of the series is retrived from Cloud Object Storage

-

Watson Natural Language Understanding and Watson Tone Analyzer are used to extract insights from the text.

-

The response from Natural Language Understanding and Watson Tone Analyzer is analyzed by the application and a report is generated.

-

User can download the report which consists of the textual insights.

Clone the use-advanced-nlp-and-tone-analyser-to-analyse-speaker-insights repo locally. In a terminal, run:

$ git clone https://github.com/IBM/use-advanced-nlp-and-tone-analyser-to-analyse-speaker-insightsWe will be using the following datasets from the Cloud Object Storage:

Note: These files were uploaded to Cloud Object Storage in previous code pattern of the series.

-

earnings-call-test-data.txt- To extract Category, Concept Tags, Entity, Keywords, sentiments, emotions, positive sentences, and Wordcloud. -

earnings-call-Q-and-A.txt- To extract Category, Concept Tags, Entity, Keywords, sentiments, emotions, positive sentences, and Wordcloud.

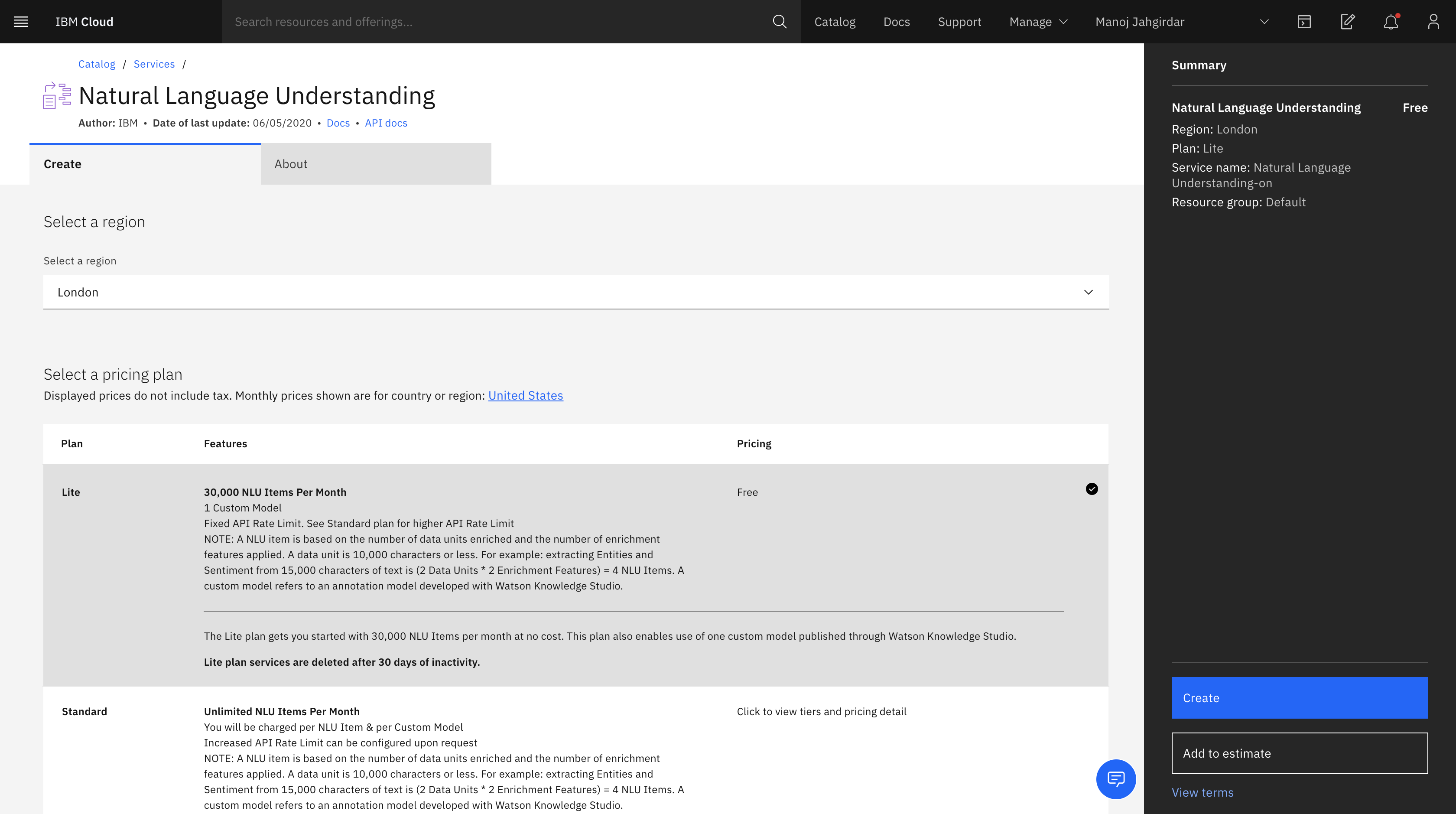

- On IBM Cloud, create a Natural Language Understanding service, under

Select a pricingplan selectLiteand click oncreateas shown.

-

In Natural Language Understanding dashboard, click on Services Credentials

-

Click on New credential and add a service credential as shown. Once the credential is created, you can copy the credentials using the small two overlapping squares and save the credentials in a text file for using it in later steps in this code pattern.

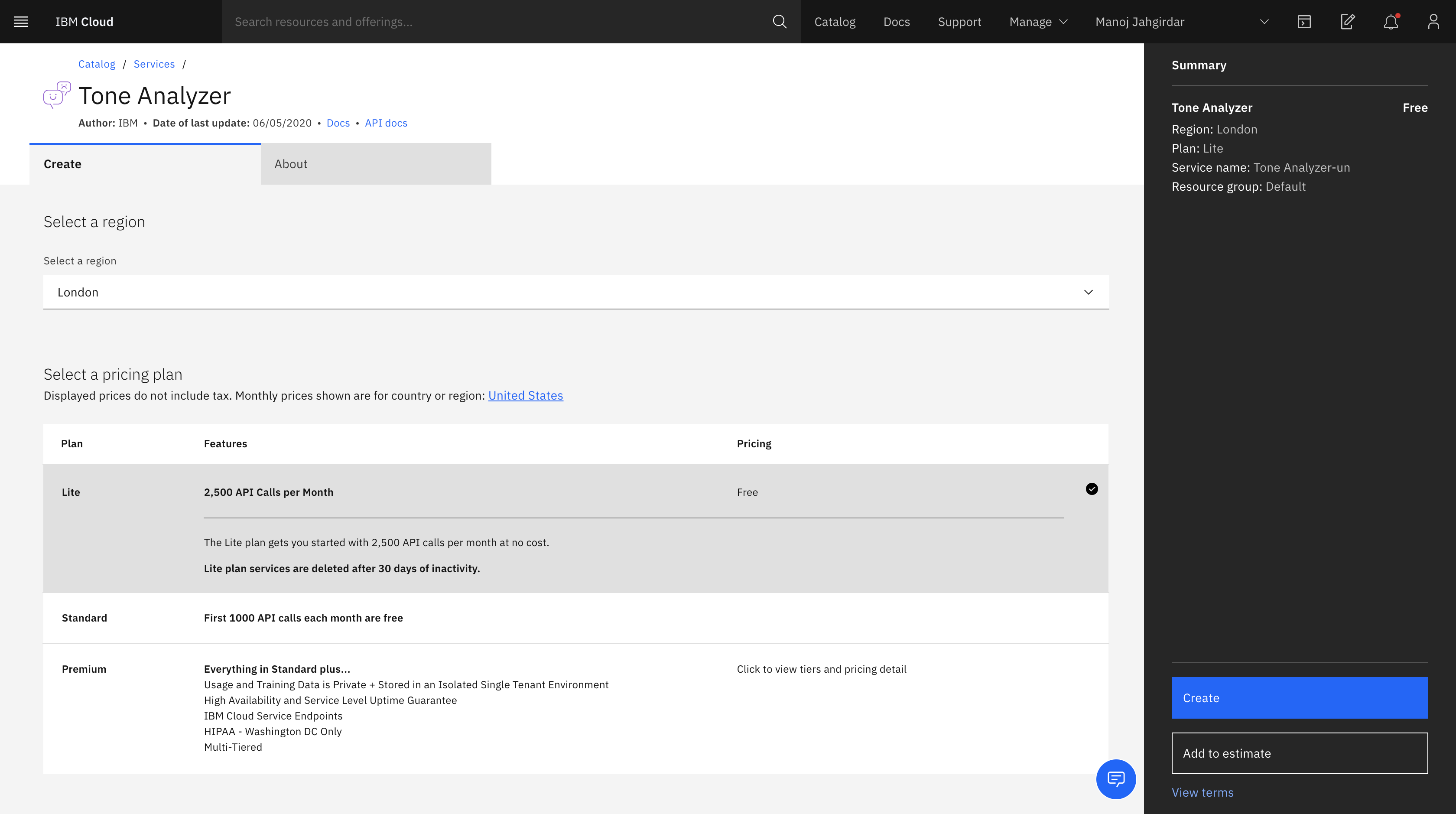

- On IBM Cloud, create a Tone Analyzer service, under

Select a pricingplan selectLiteand click oncreateas shown.

-

In Tone Analyzer dashboard, click on Services Credentials

-

Click on New credential and add a service credential as shown. Once the credential is created, you can copy the credentials using the small two overlapping squares and save the credentials in a text file for using it in later steps in this code pattern.

-

In the first code pattern of the series cloned repo, you will have updated credentials.json file with cloud object storage credentials. Copy that file and paste it in parent folder of the repo that you cloned in step 1.

-

In the repo parent folder, open the naturallanguageunderstanding.json file and paste the credentials copied in step 2.1 and save the file.

-

Similarly, in the repo parent folder, open the toneanalyzer.json file and paste the credentials copied in step 2.2 and save the file.

With Docker Installed

- Build the Dockerfile as follows :

$ docker image build -t use-advanced-nlp-to-extract-insights .- once the dockerfile is built run the dockerfile as follows :

$ docker run -p 8080:8080 use-advanced-nlp-to-extract-insights- The Application will be available on http://localhost:8080

Without Docker

-

Install the python libraries as follows:

- change directory to repo parent folder

$ cd use-advanced-nlp-and-tone-analyser-to-analyse-speaker-insights/- use

python pipto install the libraries

$ pip install -r requirements.txt

-

Finally run the application as follows:

$ python app.py- The Application will be available on http://localhost:8080

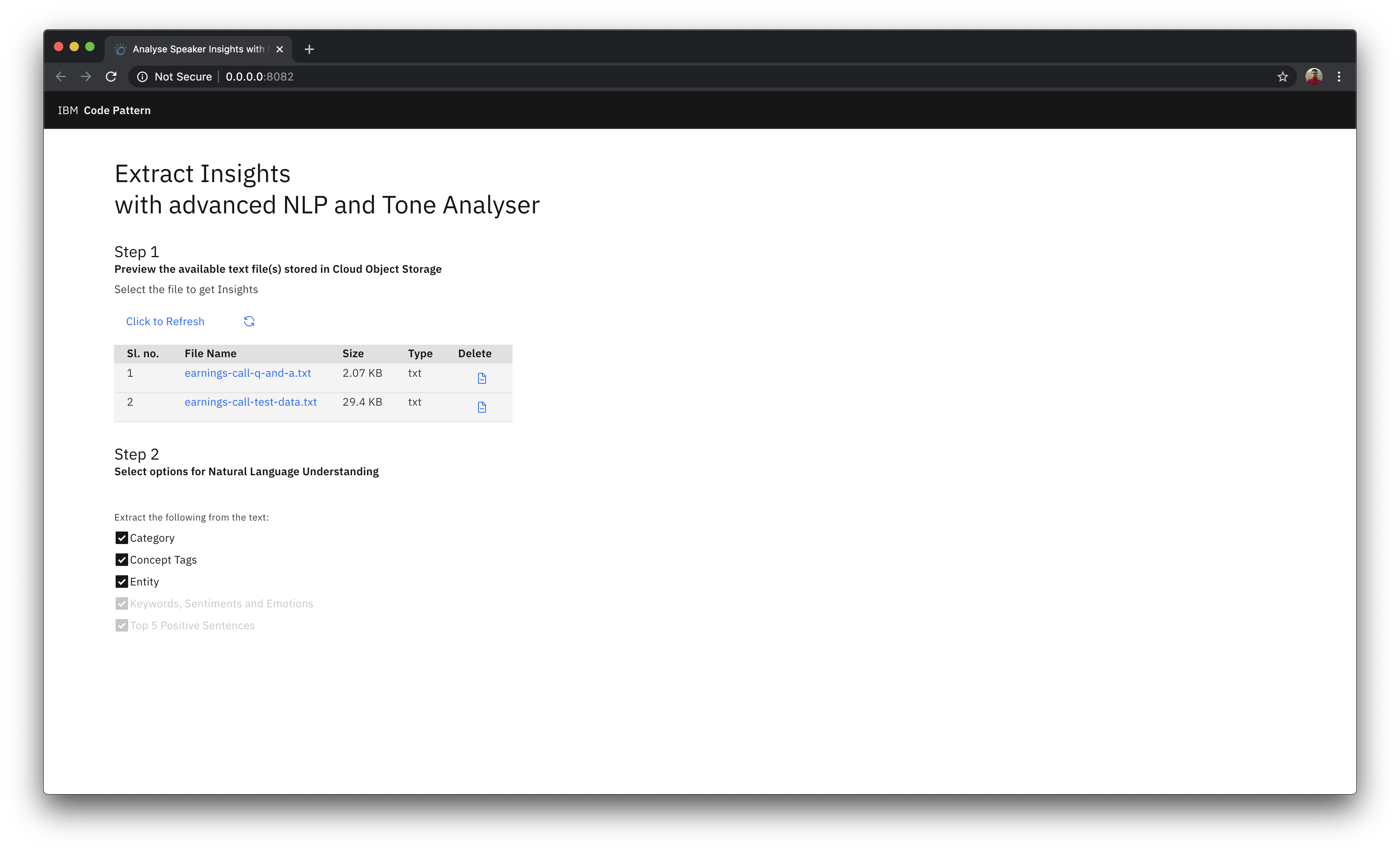

- Visit http://localhost:8080 on your browser to run the application.

We Extract Category, Concept Tags, Entity, Keywords, Sentiments, Emotions, Top 5 Positive Sentences and Word Cloud from the text in just 2 steps:

- Click on

earnings-call-test-data.txtas the text file to extract insights.

Note: These files are present in Cloud Object Storage and were uploaded to it in previous code pattern of the series.

- Select the entities that you want to extract from the text and click on Analyze button as shown. Now the selected entities will be extracted giving an analysis report.

Note: It should take about 2min to analyze the text, please be patient.

- More about the entities:

Category- Categorize your content using a five-level classification hierarchy. View the complete list of categories here.Concept Tags: Identify high-level concepts that aren't necessarily directly referenced in the text.Entity: Find people, places, events, and other types of entities mentioned in your content. View the complete list of entity types and subtypes here.Keywords: Search your content for relevant keywords.Sentiments: Analyze the sentiment toward specific target phrases and the sentiment of the document as a whole.Emotions: Analyze emotion conveyed by specific target phrases or by the document as a whole.Positive sentences: The Watson Tone Analyzer service uses linguistic analysis to detect emotional and language tones in written text

- Learn more features of:

- Watson Natural Language Understanding service. Learn more.

- Watson Tone Analyzer service. Learn more.

-

Once the NLU Analysis Report is generated you can review it. The Report consists of:

-

Features extracted by Watson Natural Language Understanding

-

Features extracted by Watson Tone Analyzer:

-

Other features

-

Category: As we have used the IBM earnings call Q1 2019 meeting recording dataset, you can see that the category was extracted asfinancespecificallyfinancial news.

Note : You can see the confidence score of the model in green bubble tags.

-

Entity: As you can see entity isCompanyspecificallyIBMindicating that, in the video recording most of the emphisis was on theCompany, IBM. -

Concept Tags: Top 3 concept tags are extracted from the video,Cloud computing,RevenueandIncome statementindicating that the speaker spoke about these contexts more often. -

Keywords,SentimentsandEmotions: Top keywords along with their sentiments and emotions are extracted, giving a sentiment analysis of the entire meeting. -

Top Positive Sentences: Based on emotional tone and language tone, positive sentences spoken in the video is extracted and is limited to 5 top positive sentences. -

Word Clouds: Based on the keywords,Nouns & Adjectivesas well asVerbsare analyzed, and the result is then turned into word clouds.

- The Report can be printed by clicking on the

printbutton as shown.

We have seen how to extract meaningful insights from the transcribed text files. In the next code pattern of the series we will learn how these three code patterns from the series can be plugged together so that uploading any video will extract audio, transcribe the audio and extract meaningfull insights all in one application.

This code pattern is licensed under the Apache License, Version 2. Separate third-party code objects invoked within this code pattern are licensed by their respective providers pursuant to their own separate licenses. Contributions are subject to the Developer Certificate of Origin, Version 1.1 and the Apache License, Version 2.