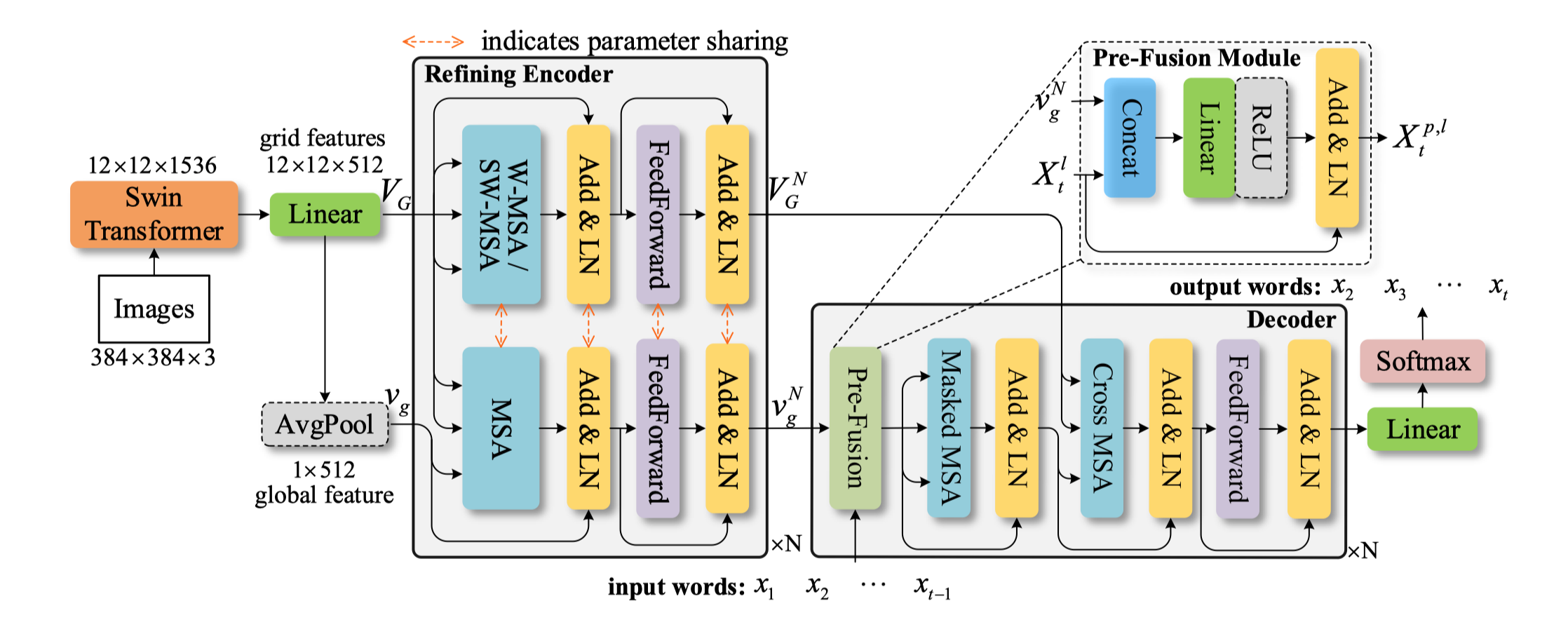

Implementation of End-to-End Transformer Based Model for Image Captioning [PDF/AAAI] [PDF/Arxiv] [AAAI 2022]

中文介绍请参考README_CN.md

- Python 3.7.4

- PyTorch 1.5.1

- TorchVision 0.6.0

- coco-caption

- numpy

- tqdm

Refer coco-caption README.md, you will first need to download the Stanford CoreNLP 3.6.0 code and models for use by SPICE. To do this, run:

cd coco_caption

bash get_stanford_models.shThe necessary files in training and evaluation are saved in mscoco folder, which is organized as follows:

mscoco/

|--feature/

|--coco2014/

|--train2014/

|--val2014/

|--test2014/

|--annotations/

|--misc/

|--sent/

|--txt/

where the mscoco/feature/coco2014 folder contains the raw image and annotation files of MSCOCO 2014 dataset. You can download other files from GoogleDrive or 百度网盘(提取码: hryh).

NOTE: You can also extract image features of MSCOCO 2014 using Swin-Transformer or others and save them as ***.npz files into mscoco/feature for training speed up, refer to coco_dataset.py and data_loader.py for how to read and prepare features.

In this case, you need to make some modifications to pure_transformer.py (delete the backbone module). For you smart and excellent people, I think it is an easy work.

Note: our repository is mainly based on JDAI-CV/image-captioning, and we directly reused their config.yml files, so there are many useless parameter in our model. (waiting for further sorting)

Download pre-trained Backbone model (Swin-Transformer) from GoogleDrive or 百度网盘(提取码: hryh) and save it in the root directory.

Before training, you may need check and modify the parameters in config.yml and train.sh files. Then run the script:

# for XE training

bash experiments_PureT/PureT_XE/train.sh

Copy the pre-trained model under XE loss into folder of experiments_PureT/PureT_SCST/snapshot/ and modify config.yml and train.sh files. Then run the script:

# for SCST training

bash experiments_PureT/PureT_SCST/train.shYou can download the pre-trained model from GoogleDrive or 百度网盘(提取码: hryh).

CUDA_VISIBLE_DEVICES=0 python main_test.py --folder experiments_PureT/PureT_SCST/ --resume 27| BLEU-1 | BLEU-2 | BLEU-3 | BLEU-4 | METEOR | ROUGE-L | CIDEr | SPICE |

|---|---|---|---|---|---|---|---|

| 82.1 | 67.3 | 52.0 | 40.9 | 30.2 | 60.1 | 138.2 | 24.2 |

If you find this repo useful, please consider citing (no obligation at all):

@inproceedings{wangyiyu2022PureT,

title={End-to-End Transformer Based Model for Image Captioning},

author={Yiyu Wang and Jungang Xu and Yingfei Sun},

booktitle={AAAI},

year={2022}

}

This repository is based on JDAI-CV/image-captioning, ruotianluo/self-critical.pytorch and microsoft/Swin-Transformer.