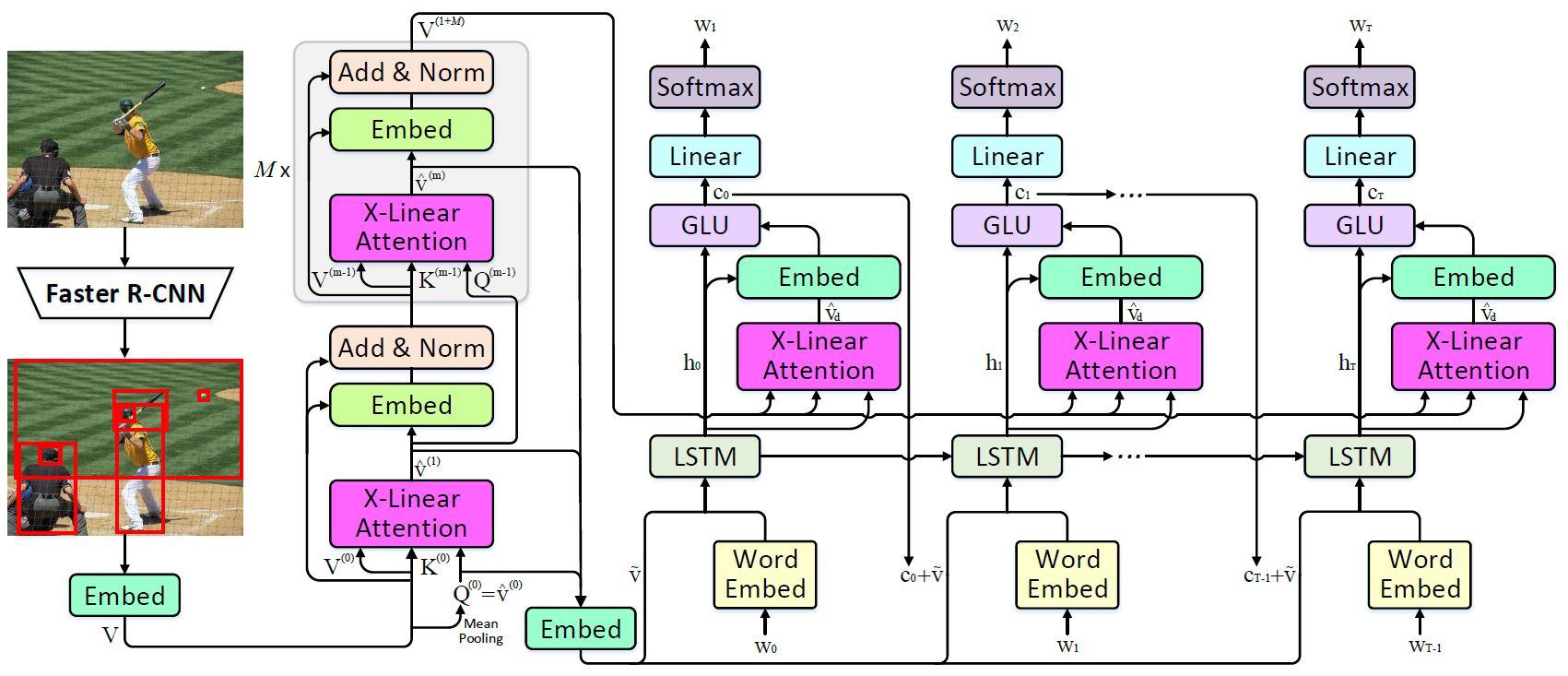

This repository is for X-Linear Attention Networks for Image Captioning (CVPR 2020). The original paper can be found here.

Please cite with the following BibTeX:

@inproceedings{xlinear2020cvpr,

title={X-Linear Attention Networks for Image Captioning},

author={Pan, Yingwei and Yao, Ting and Li, Yehao and Mei, Tao},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2020}

}

- Python 3

- CUDA 10

- numpy

- tqdm

- easydict

- PyTorch (>1.0)

- torchvision

- coco-caption

- Download the bottom up features and convert them to npz files

python2 tools/create_feats.py --infeats bottom_up_tsv --outfolder ./mscoco/feature/up_down_10_100

-

Download the annotations into the mscoco folder. More details about data preparation can be referred to self-critical.pytorch

-

Download coco-caption and setup the path of __C.INFERENCE.COCO_PATH in lib/config.py

-

The pretrained models and results can be downloaded here.

-

The pretrained SENet-154 model can be downloaded here.

bash experiments/xlan/train.sh

Copy the pretrained model into experiments/xlan_rl/snapshot and run the script

bash experiments/xlan_rl/train.sh

bash experiments/xtransformer/train.sh

Copy the pretrained model into experiments/xtransformer_rl/snapshot and run the script

bash experiments/xtransformer_rl/train.sh

CUDA_VISIBLE_DEVICES=0 python3 main_test.py --folder experiments/model_folder --resume model_epoch

Thanks the contribution of self-critical.pytorch and awesome PyTorch team.