- Put more details in the README.

- Add support for FlashAttn.

- Add support for efficient processing of batched input.

- Add an example of HEPT with minimal code.

-

2024.06: HEPT has been accepted to ICML 2024 and is selected as an oral presentation (144/9473, 1.5% acceptance rate)!

-

2024.04: HEPT now supports efficient processing of batched input by this commit. This is implemented via integrating batch indices in the computation of AND hash codes, which is more efficient than naive padding, especially for batches with imbalanced point cloud sizes. Note:

- Only the code in

./exampleis updated to support batched input, and the original implementation in./srcis not updated. - The current implementation for batched input is not yet fully tested. Please feel free to open an issue if you encounter any problems.

- Only the code in

-

2024.04: An example of HEPT with minimal code is added in

./exampleby this commit. It's a good starting point for users who want to use HEPT in their own projects. There are minor differences between the example and the original implementation in./src/models/attention/hept.py, but they should not affect the performance of the model.

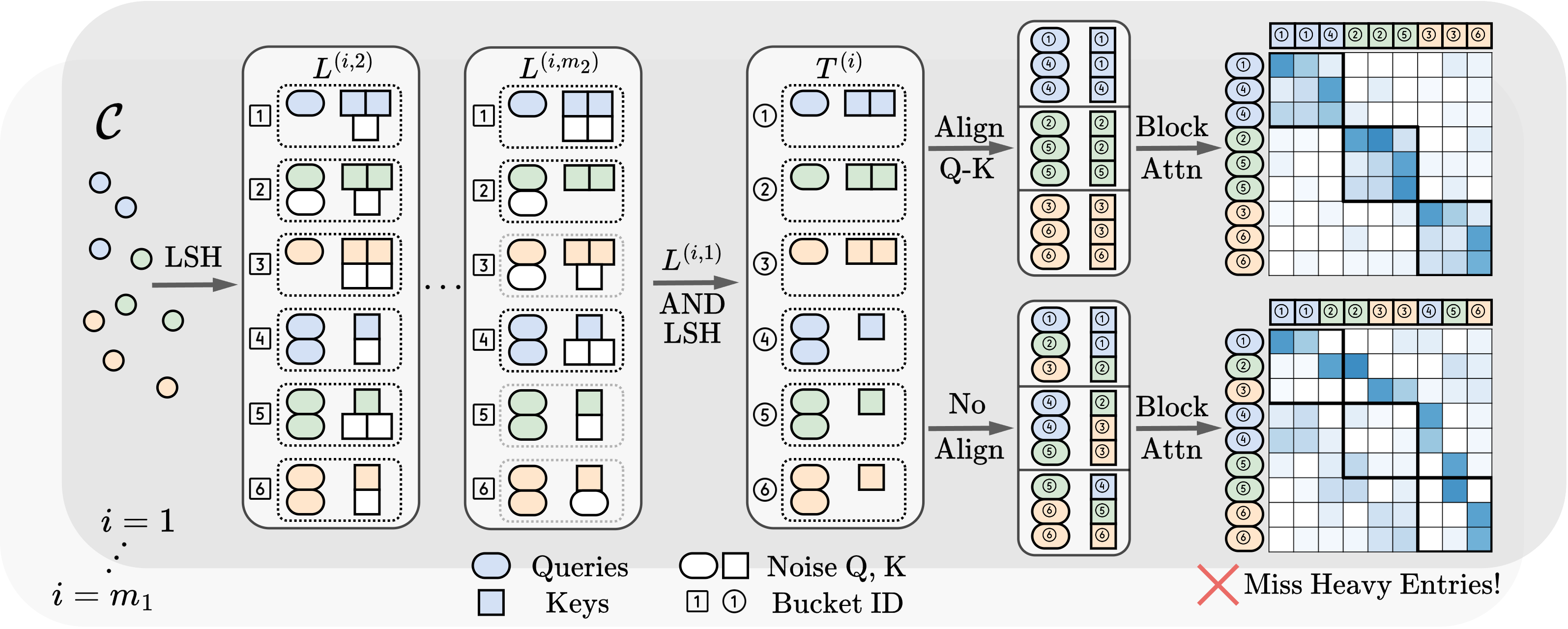

This study introduces a novel transformer model optimized for large-scale point cloud processing in scientific domains such as high-energy physics (HEP) and astrophysics. Addressing the limitations of graph neural networks and standard transformers, our model integrates local inductive bias and achieves near-linear complexity with hardware-friendly regular operations. One contribution of this work is the quantitative analysis of the error-complexity tradeoff of various sparsification techniques for building efficient transformers. Our findings highlight the superiority of using locality-sensitive hashing (LSH), especially OR & AND-construction LSH, in kernel approximation for large-scale point cloud data with local inductive bias. Based on this finding, we propose LSH-based Efficient Point Transformer (HEPT), which combines E2LSH with OR & AND constructions and is built upon regular computations. HEPT demonstrates remarkable performance in two critical yet time-consuming HEP tasks, significantly outperforming existing GNNs and transformers in accuracy and computational speed, marking a significant advancement in geometric deep learning and large-scale scientific data processing.

Figure 1.Pipline of HEPT.

All the datasets can be downloaded and processed automatically by running the scripts in ./src/datasets, i.e.,

cd ./src/datasets

python pileup.py

python tracking.py -d tracking-6k

python tracking.py -d tracking-60k

We are using torch 2.3.1 and pyg 2.5.3 with python 3.10.14 and cuda 12.1. Use the following command to install the required packages:

conda env create -f environment.yml

pip install torch_geometric==2.5.3

pip install torch_scatter==2.1.2 torch_cluster==1.6.3 -f https://data.pyg.org/whl/torch-2.3.0+cu121.html

To run the code, you can use the following command:

python tracking_trainer.py -m hept

Or

python pileup_trainer.py -m hept

Configurations will be loaded from those located in ./configs/ directory.

There are three key hyperparameters in HEPT:

block_size: block size for attention computationn_hashes: the number of hash tables, i.e., OR LSHnum_regions: # of regions HEPT will randomly divide the input space into (Sec. 4.3 in the paper)

We suggest first determine block_size and n_hashes according to the computational budget, but generally n_hashes should be greater than 1. num_regions should be tuned according to the local inductive bias of the dataset.

@article{miao2024locality,

title = {Locality-Sensitive Hashing-Based Efficient Point Transformer with Applications in High-Energy Physics},

author = {Miao, Siqi and Lu, Zhiyuan and Liu, Mia and Duarte, Javier and Li, Pan},

journal = {International Conference on Machine Learning},

year = {2024}

}