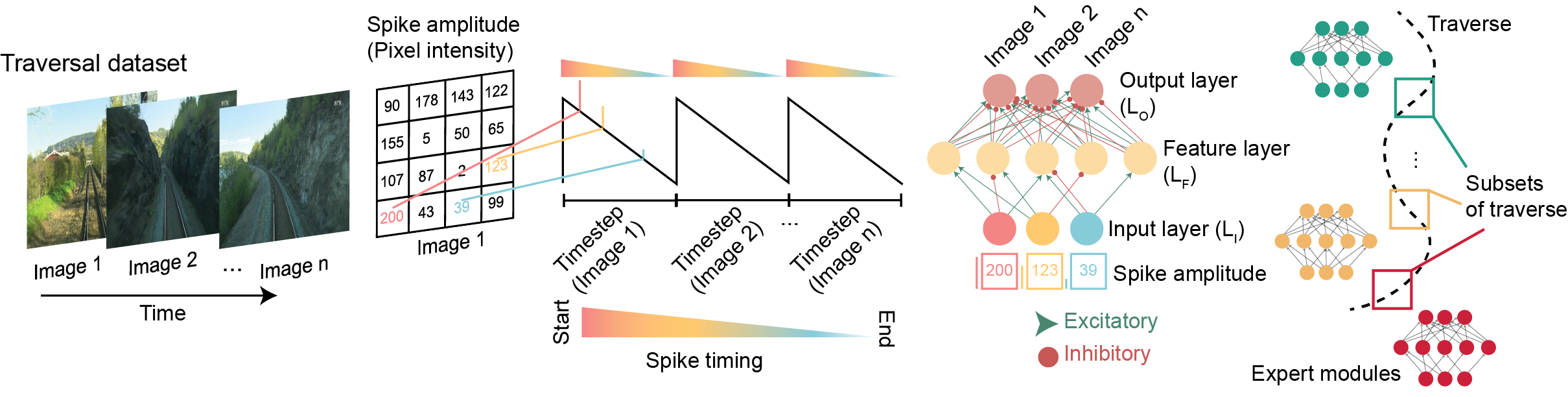

This repository contains code for VPRTempo, a spiking neural network that uses temporally encoding to perform visual place recognition tasks. The network is based off of BLiTNet and adapted to the VPRSNN framework.

This repository is licensed under the MIT License

If you use our code, please cite the following paper:

@misc{hines2023vprtempo,

title={VPRTempo: A Fast Temporally Encoded Spiking Neural Network for Visual Place Recognition},

author={Adam D. Hines and Peter G. Stratton and Michael Milford and Tobias Fischer},

year={2023},

eprint={2309.10225},

archivePrefix={arXiv},

primaryClass={cs.RO}

}

We recommend installing dependencies for VPRTempo with Mambaforge, however conda may also be used. VPRTempo uses PyTorch with the capability for CUDA GPU acceleration. Follow the installation instructions based on your operating system and hardware specifications.

Use conda/mamba to create a new environment and install Python, CUDA tools, and dependencies.

conda create -n vprtempo -c pytorch -c nvidia python torchvision torchaudio pytorch-cuda=11.7 cudatoolkit opencv matplotlibNote Install the version of PyTorch-CUDA that is compatible with your graphics card, see Start Locally | PyTorch for more details.

To install using the CPU only, simply install Python + dependencies.

conda create -n vprtempo python pytorch torchvision torchaudio cpuonly opencv matplotlib -c pytorchCUDA acceleration is not available on MacOS and the network will only use the CPU, so simply just need to install Python + dependencies.

conda create -n vprtempo -c conda-forge python opencv matplotlib -c pytorch pytorch::pytorch torchvision torchaudioActivate the environment & download the Github repository

conda activate vprtempo

git clone https://github.com/QVPR/VPRTempo.git

cd ~/VPRTempoVPRTempo was developed to be simple to train and test a variety of datasets. Please see the information below about running a test with the Nordland traversal dataset and how to organize custom datasets.

VPRTempo was developed and tested using the Nordland traversal dataset. This software will work for either the full-resolution or down-sampled datasets, however our paper details the full-resolution datasets.

To simplify first usage, we have set the defaults in VPRTempo.py to train and test on a small subset of Nordland data. We recommend downloading Nordland and using the ./src/nordland.py script to unzip and organize the images into the correct file and naming structure.

In general, data should be organised in the following way in order to train the network on multiple traversals of the same location.

--dataset

|--training

| |--traversal_1

| |--traversal_2

|

|--testing

| |--test_traversal

Speicfy the datapaths by altering self.trainingPath and self.testPath in VPRTempo.py. You can specify which traversals you want to train and test on by also altering self.locations and self.test_location. In the case above it would be the following;

self.trainingPath = '<path_to_data>/training/'

self.testPath = '<path_to_data>/testing/'

self.locations = ["traversal_1","traversal_2"]

self.test_location = "test_traversal"Image names for the same locations across traversals (training and testing) must be the same as they are imported based on a .txt file.

Both the training and testing is handled by the VPRTempo.py script. Initial installs do not contain any pre-defined networks and will need to be trained prior to use.

- Training and testing data is organized as above (see Datasets on how to set up the Nordland or custom datasets)

- The VPRTempo

condaenvironment has been activated

Once these two things have been setup, run VPRTempo.py to train and test your first network with the default settings.

If you encounter problems whilst running the code or if you have a suggestion for a feature or improvement, please report it as an issue.