Off policy reinforcement learning approach for Lux ai competition Kaggle For the details of the competition, please check this page -> Lux ai

I tried a off policy reinforcement learning, V-trace/UPGO. Training from random weight, the score of my agent is ~1200, but I think adding more training or other simple modification would improve the score. Because remaining 1 or half day left when I confirmed the clear convergence of my rl algorithm...

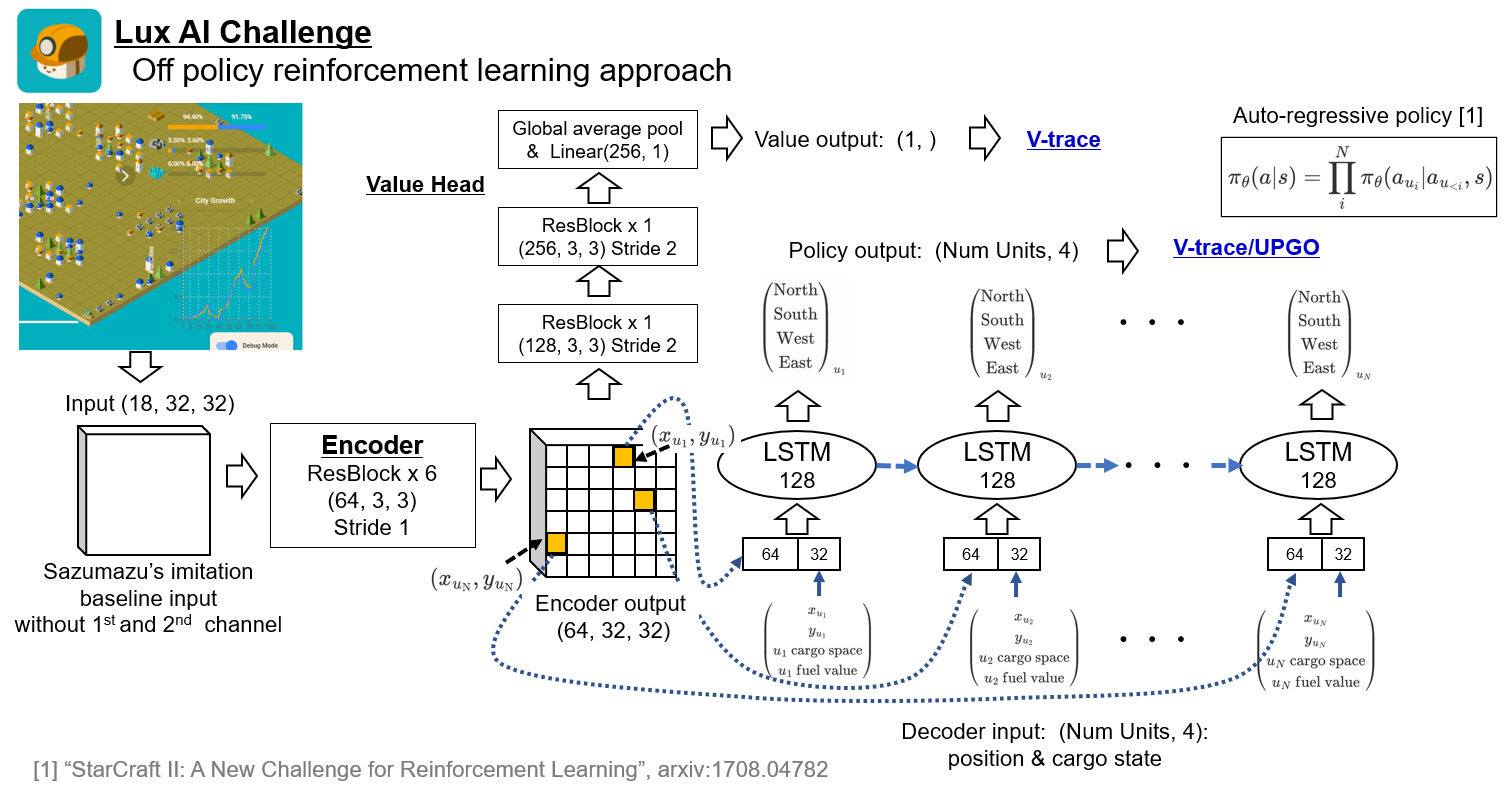

- model: based on image caption, task, encoder and lstm decoder architecture.

- multi agent action space: using auto regressive policy, arxiv

-

Extending handyrl : Extending handyrl to multi agent policy from single agent policy. Specifically all tensors are replaced like this, (episodes, steps, players, actions) -> (episodes, steps, players, units, actions) and loss is modified for auto regressive style.

-

Decoder input:

- making unique unit order: beginning from min y unit and then find the nearest neighbor unit repeatedly.

- (x, y, cargo_space, fuel) for each unit. This 4 dim is converted into 32 dim through a linear layer.

- unlike image cation model, we know the exact place of each unit that's why I directly use the unit position"s feature of the encoder, instead of the pooled feature.

-

UPGO is much faster for convergence than V-trace.

-

Reward: use intermediate reward, borrow from glmcdona's implementation and reduce unit part by 1/2 or 1/4.

-

kaggle notebook: here, you can check my agent of 1035 epoch(500,000 episodes).

This is my first reinforcement learning project and there might be some misunderstandings. Please be free to ask me if you find something weird.

This is a code for a kaggle competition and some part is not so clean right now. I will fix such a part little by little.

- Ubuntu 18.04

- Python with Anaconda/Mamba

- NVIDIA GPUx1

# clone project

$PROJECT=kaggle_lux_ai

$CONDA_NAME=lux_ai

git clone https://github.com/Fkaneko/$PROJECT

# install project

cd $PROJECT

conda create -n $CONDA_NAME python=3.7

conda activate $CONDA_NAME

yes | bash install.shSimply run followings

python handyrl_main.py -t # for reinforcement learning from random weightPlease check the src/config/handyrl_config.yaml for the default training configuration.

Also need to check the original handyrl documentation.

You can check your agent on a kaggle env like this one.

After training you will find the checkpoint under ./models.

- evaluate every 100 epochs

- match 50 episodes with imitation learning baseline model (score ~1350) without internal unit move resolution.

- 100, 300 and 800 epoch models are visualized here

| epoch | win rate | tile diff |

|---|---|---|

| 100 | 0.058 | -28.4 |

| 200 | 0.212 | -13.0 |

| 300 | 0.442 | -0.0 |

| 400 | 0.615 | 10.4 |

| 500 | 0.731 | 15.4 |

| 600 | 0.673 | 9.7 |

| 700 | 0.712 | 14.1 |

| 800 | 0.750 | 15.1 |

There are forked repositories inside this repository. Please also check NOTICE

- LuxPythonEnvGym: MIT, https://github.com/glmcdona/LuxPythonEnvGym

- HandyRL:MIT, https://github.com/DeNA/HandyRL

MIT