This repository serves as documentation for a DNN implementation based on the Multi-Layer Perceptron architecture, which can be used to learn any 2-input boolean function, such as an XOR operation.

I started this project because I wanted to improve my understanding of machine learning and to gain some experience in designing DNN accelerators in VHDL. I selected a MLP architecture particularly because it is a simple but efficient architecture and the basis of neural networks. Initially, I created an MLP neural network using the numpy library in Python to simulate and train the network as a proof of concept. The weights generated by a fully trained network can be used as a starting point for our accelerator. In my research of DNNs, I've come across two predominant methodologies: (1) utilizing pre-trained weights for inference, and (2) combining inference with backpropagation for weight adjustment. In the first scenario, the accelerator relies on pre-trained weights to execute inference tasks. In the second scenario, it engages in inference and then in backpropagation to correct errors within the network. This project will focus on implementating scenario 2, which will occur as a 2-stage process: "forward pass" and "backward pass".

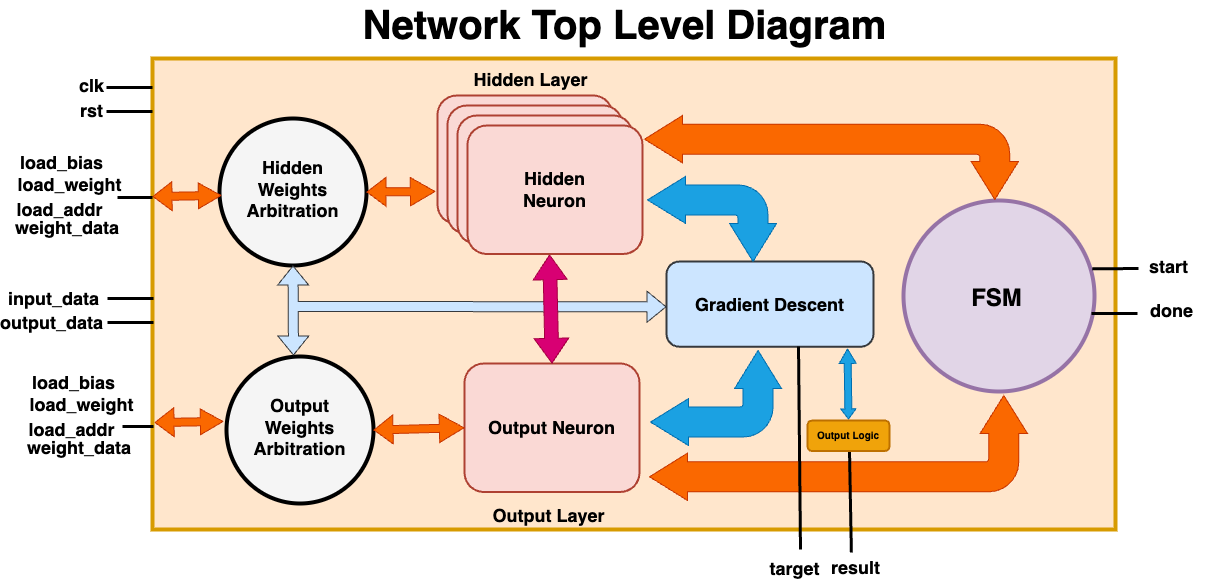

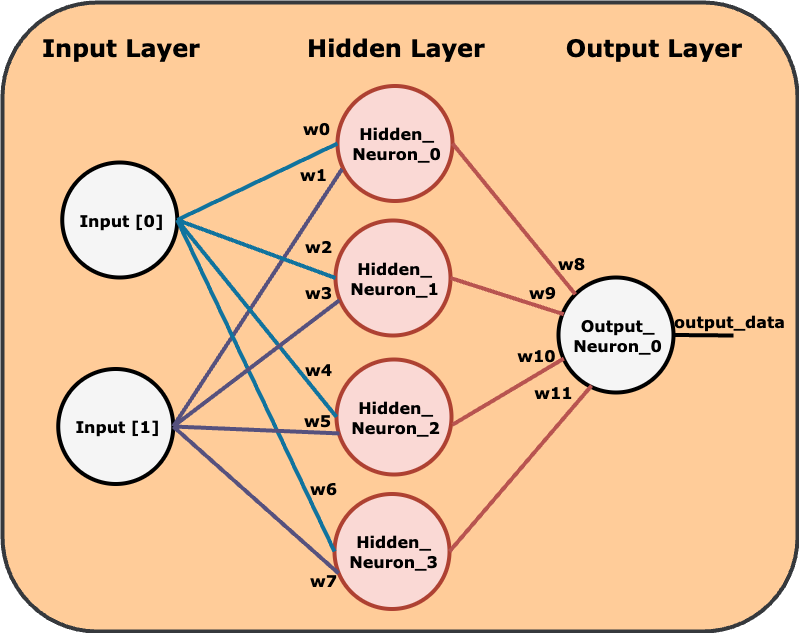

The best way to understand the purpose of this project is by visualizing the neural network through an abstracted diagram:

The diagram showcases the network's 3-layer architecture, starting with the input layer, which is where our data enters the system. The hidden layer is where the network begins to process the data through interconnected neurons linked to each input node by a weight. Finally, the output layer consists of a single neuron, which gives us the final result. Since the result will be non-binary, we would have to add some additional logic to interpret our result.

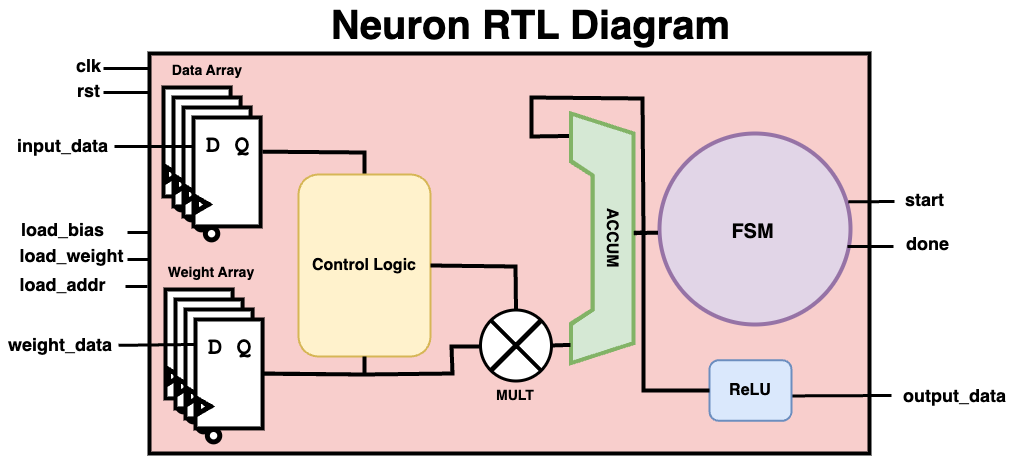

The diagram showcases the neuron architecture with components corresponding to:

Data Array: Represents the input vectorx.Weight Array: Stores the weightsWand biasb.Control Logic: Manages state transitions and data flow.FSM: Orchestrates the sequence of operations.ACCUM: Accumulates the weighted inputsz.MULT: Multiplies inputs by their weights.ReLU: Activation Functionz.

The operations performed by the neuron can be mathematically expressed as:

for n = NUM_IN-1:

WhereNUM_INrepresents the number of inputs our neuron will process. In our design, there are two inputs per neuron for the hidden layer neurons and four inputs for our output layer neuron. A hidden neuron calculation can then be expressed like this:

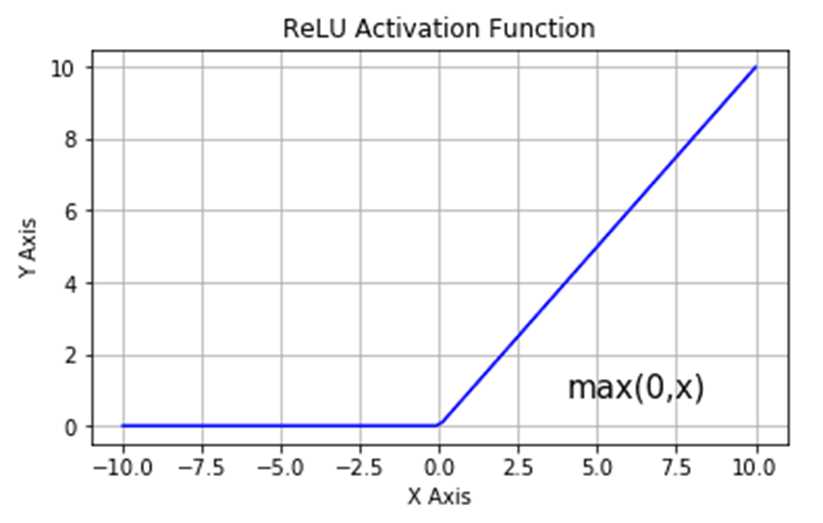

Here, the ACCUM output is fed through the ReLU activation function, which is denoted by a^{(i)}:

The Rectified Linear Unit (ReLU) function was selected due to the fact that it is easy to implement in VHDL and provides non-linear characteristics to our circuit. This leads to model sparsity, which is marked by inactive neurons and can improve the models predictive power.

The algorithm that we use to correct the error in each layer is known as gradient descent, a powerful tool for minimizing error within each layer of our neural network. After the forward pass stage, we quantify the error for each neuron in the output layer:

for j in 0 to OUTPUT_NEURONS-1:

Where y is the output vector and t is the target vector, introduced during training. The hidden layer error can then be calculated as follows:

for i in 0 to HIDDEN_NEURONS-1:

Where W2 denotes the transposed weight matrix for the hidden to output layer connections, and a′i is the derivative of the activation function given the weighted sum input. Now that we know the error, we proceed to update the weights associated with each connection:

Hidden-Output Layer Weights and Biases:

Input-Hidden Layer Weights and Biases:

Where η is the learning rate, which controls how much the weights are adjusted during each update.

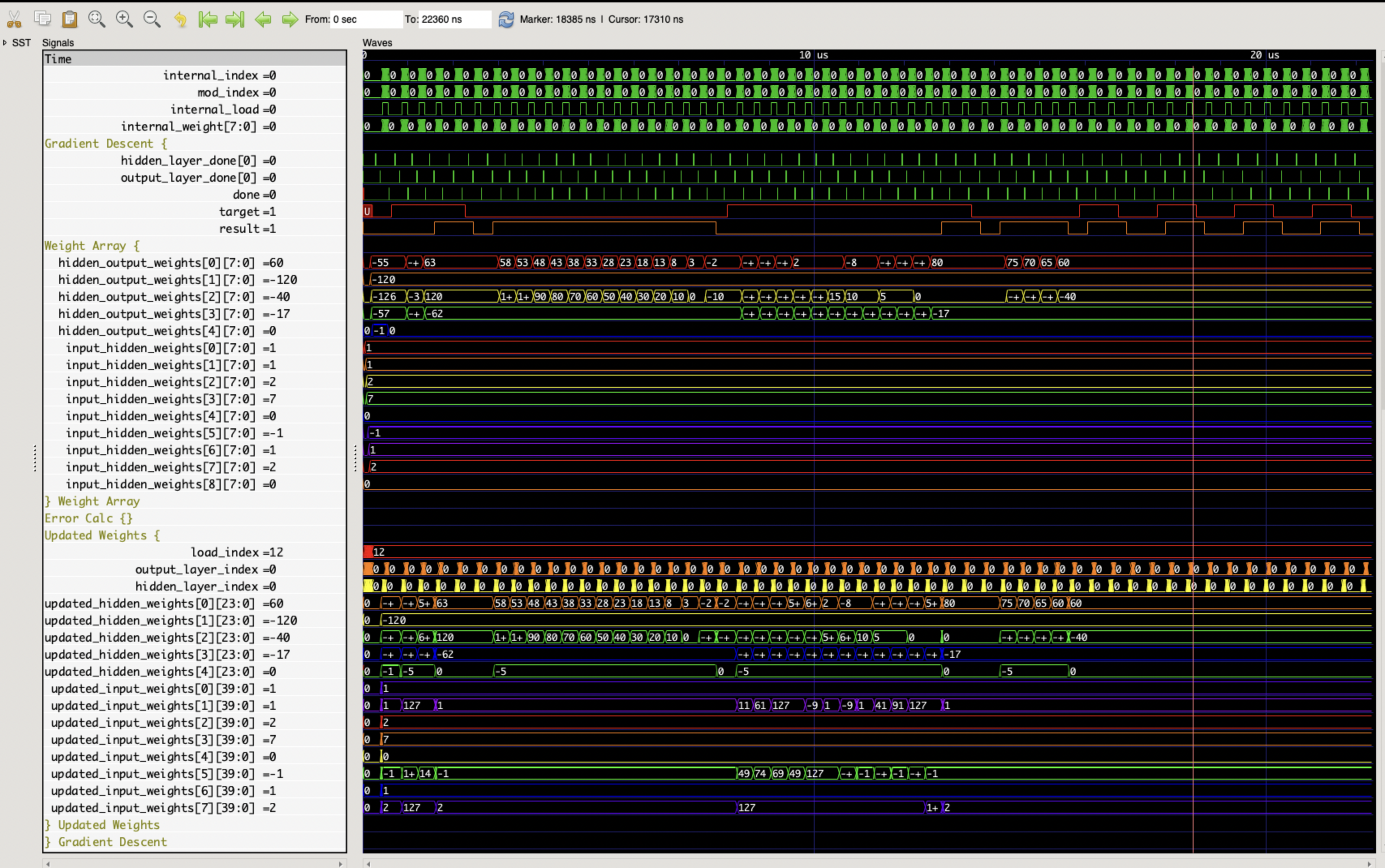

The waveforms show that after ~16 microseconds, the network is able to synchronize it's results with the expected target for an arbitrary test vector. While this result isn't useful as a metric on it's own, it provides a demonstration of the neural network's selective weight adjustment process. While the model was able to learn the XOR function, enhancements in configurability and performance optimization are needed for further applications.