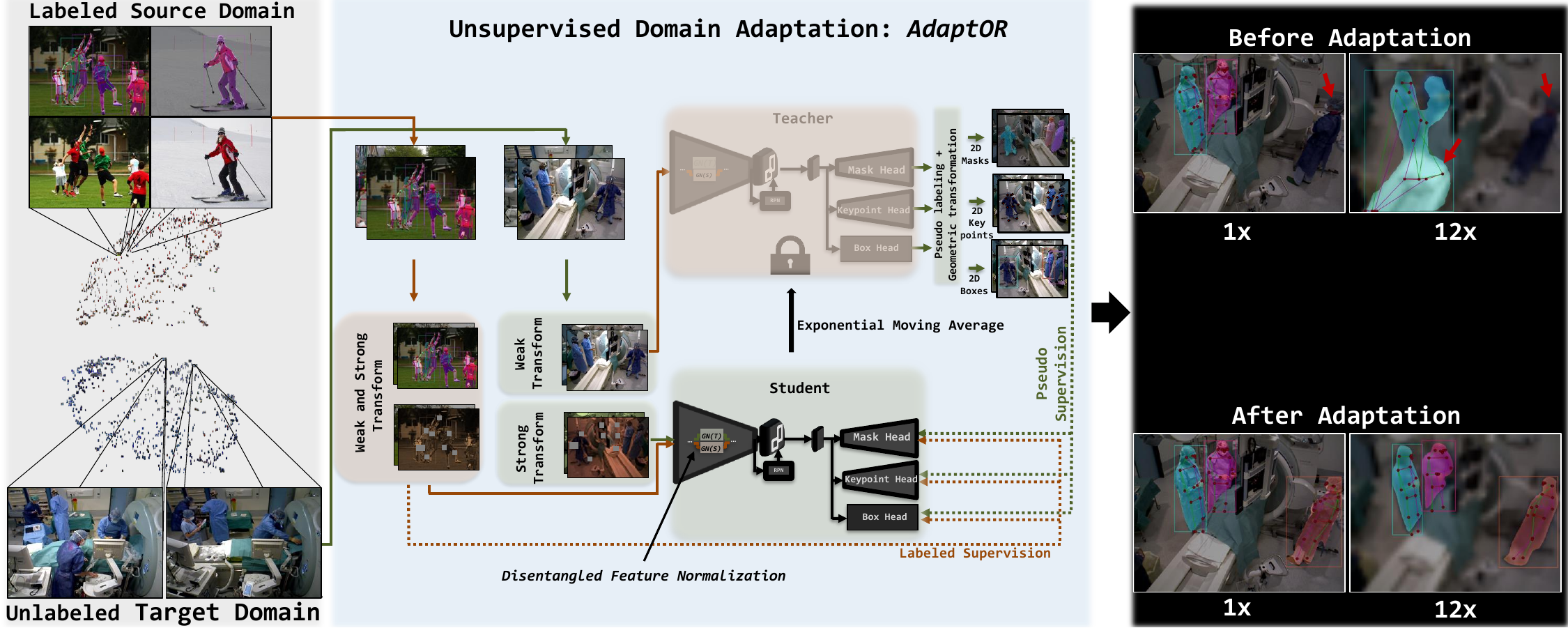

Unsupervised domain adaptation for clinician pose estimation and instance segmentation in the operating room

Vinkle Srivastav, Afshin Gangi, Nicolas Padoy, Medical Image Analysis (2022)

- Python ≥ 3.6, PyTorch ≥ 1.5, and torchvision with matching PyTorch version.

- Detectron2: follow the INSTALL.md to install detectron2.

- Gradio: for the demo

pip install gradio - We used python=3.7.8, pytorch=1.6.0, torchvision=0.7, detectron2=0.3, cuda=10.2, and cudnn=7.6.5 in our experiments.

- Run the following commands to download the repo.

($)> AdaptOR=/path/to/the/repository

($)> git clone https://github.com/CAMMA-public/adaptor.git $AdaptOR

($)> cd $AdaptOR- Follow the Datasets.md to download the COCO dataset. The directory structure should look as follows.

adaptor/

└── datasets/

└── coco/

├── train2017/

├── val2017/

└── annotations/

├── person_keypoints_train2017.json

└── person_keypoints_val2017.json

...- We will soon release the TUM-OR dataset needed for the domain adaptation experiments for AdaptOR

| Model | Supervision | APbbperson | APkpperson | APmaskperson | Model Weights |

|---|---|---|---|---|---|

| MoCo-v2_R_50_detectron2 | - | - | - | - | download |

| kmrcnn_R_50_FPN_3x_gn_amp_sup1 | 1% | 42.57 | 38.22 | 36.06 | download |

| kmrcnn_R_50_FPN_3x_gn_amp_sup2 | 2% | 45.37 | 44.08 | 38.96 | download |

| kmrcnn_R_50_FPN_3x_gn_amp_sup5 | 5% | 49.90 | 49.79 | 43.10 | download |

| kmrcnn_R_50_FPN_3x_gn_amp_sup10 | 10% | 52.70 | 56.65 | 45.46 | download |

| kmrcnn_R_50_FPN_3x_gn_amp_sup100 | 100% | 57.50 | 66.20 | 49.90 | download |

First, download the pre-trained models and copy inside the $AdaptOR/models. Run the eval_ssl_coco.sh or see the following bash script for the evaluation of AdaptOR-SSL on the COCO dataset with different percentages of supervision.

PERCENTAGE_SUPERVISION=1 #2,5,10,100

CONFIG_FILE=./configs/ssl_coco/kmrcnn_R_50_FPN_3x_gn_amp_sup${PERCENTAGE_SUPERVISION}.yaml

MODEL_WEIGHTS=./models/kmrcnn_R_50_FPN_3x_gn_amp_sup${PERCENTAGE_SUPERVISION}.pth

python train_net.py \

--eval-only \

--config ${CONFIG_FILE} \

MODEL.WEIGHTS ${MODEL_WEIGHTS}Run the train_ssl_coco.sh or see the following bash script for the training of AdaptOR-SSL on the COCO dataset with different percentages of supervision on 4 V100 GPUS. To train on more or less GPUs, follow the linear scaling rule.

PERCENTAGE_SUPERVISION=1 #2,5,10,100

CONFIG_FILE=./configs/ssl_coco/kmrcnn_R_50_FPN_3x_gn_amp_sup${PERCENTAGE_SUPERVISION}.yaml

python train_net.py \

--num-gpus 4 \

--config ${CONFIG_FILE} \Training on the TUM-OR dataset for the domain adaptation experiments for AdaptOR coming soon.

Download the pre-trained models and copy inside the $AdaptOR/models

($)> python AdaptOR-SSL_demo_image.pySample output of AdaptOR comparing the model before and after the domain adaption on the downsampled images with downsampling factor of 8x (80x60)

Sample output of AdaptOR-SSL comparing the model trained with 1% vs. 100% supervision

@inproceedings{srivastav2021adaptor,

title={Unsupervised domain adaptation for clinician pose estimation and instance segmentation in the operating room},

author={Srivastav, Vinkle and Gangi, Afshin and Padoy, Nicolas},

booktitle={Medical Image Analysis},

year={2022},

}The project uses detectron2. We thank the authors of detectron2 for releasing the library. If you use detectron2, consider citing it using the following BibTeX entry.

@misc{wu2019detectron2,

author = {Yuxin Wu and Alexander Kirillov and Francisco Massa and

Wan-Yen Lo and Ross Girshick},

title = {Detectron2},

howpublished = {\url{https://github.com/facebookresearch/detectron2}},

year = {2019}

}The project also leverages following research works. We thank the authors for releasing their codes.

- Unbiased-Teacher

- Rand-Augment and Rand-Cut

- Pytorch Image Models

- MoCo-v2 pre-trained weights are used to initialize the AdaptOR-SSL models. The MoCo-v2 weights in the detectron2 format is available here MoCo-v2-detectron2. The config files by default download the weights from our S3 server. You can also use this script to convert the MoCo-v2 weights to the detectron2 format.

This code, models, and datasets are available for non-commercial scientific research purposes as defined in the CC BY-NC-SA 4.0. By downloading and using this code you agree to the terms in the LICENSE. Third-party codes are subject to their respective licenses.