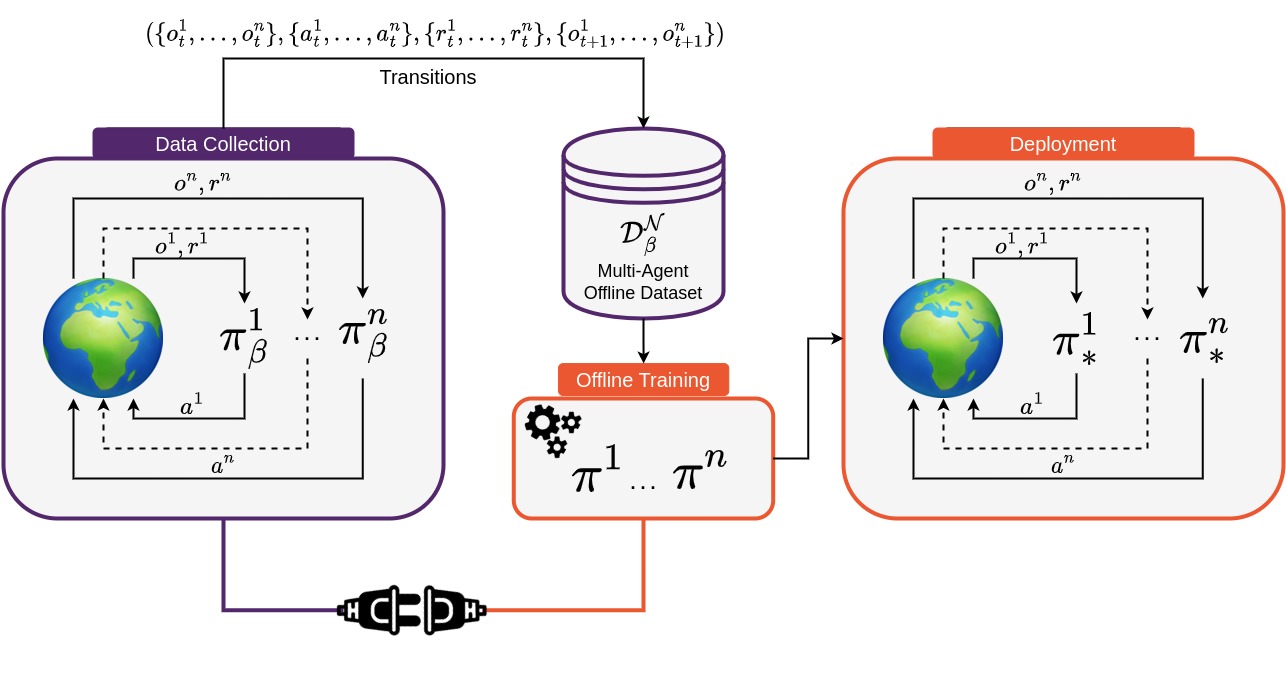

Offline MARL holds great promise for real-world applications by utilising static datasets to build decentralised controllers of complex multi-agent systems. However, currently offline MARL lacks a standardised benchmark for measuring meaningful research progress. Off-the-Grid MARL (OG-MARL) fills this gap by providing a diverse suite of datasets with baselines on popular MARL benchmark environments in one place, with a unified API and an easy-to-use set of tools.

OG-MARL forms part of the InstaDeep MARL ecosystem, developed jointly with the open-source community. To join us in these efforts, reach out, raise issues and read our contribution guidelines or just 🌟 to stay up to date with the latest developments!

We have generated datasets on a diverse set of popular MARL environments. A list of currently supported environments is included in the table below. It is well known from the single-agent offline RL literature that the quality of experience in offline datasets can play a large role in the final performance of offline RL algorithms. Therefore in OG-MARL, for each environment and scenario, we include a range of dataset distributions including Good, Medium, Poor and Replay datasets in order to benchmark offline MARL algorithms on a range of different dataset qualities. For more information on why we chose to include each environment and its task properties, please read our accompanying paper.

| Environment | Scenario | Agents | Act | Obs | Reward | Types | Repo |

|---|---|---|---|---|---|---|---|

| 🔫SMAC v1 | 3m 8m 2s3z 5m_vs_6m 27m_vs_30m 3s5z_vs_3s6z 2c_vs_64zg |

3 8 5 5 27 8 2 |

Discrete | Vector | Dense | Homog Homog Heterog Homog Homog Heterog Homog |

source |

| 💣SMAC v2 | terran_5_vs_5 zerg_5_vs_5 terran_10_vs_10 |

5 5 10 |

Discrete | Vector | Dense | Heterog | source |

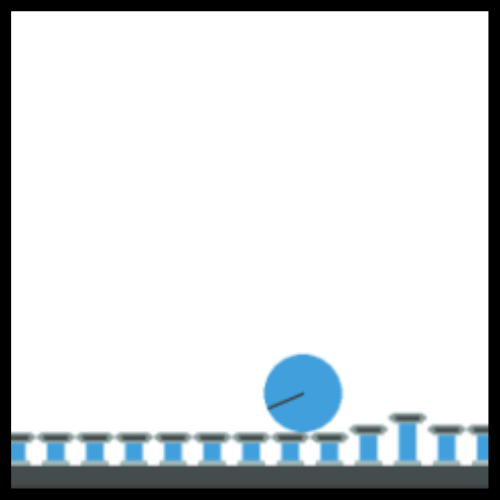

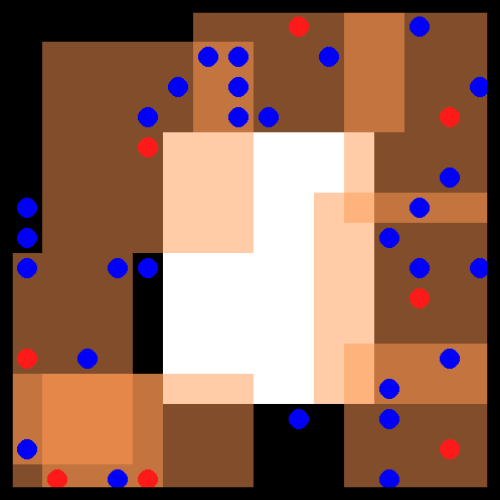

| 🐻PettingZoo | Pursuit Co-op Pong PistonBall |

8 2 15 |

Discrete Discrete Cont. |

Pixels | Dense | Homog Heterog Homog |

source |

| 🚅Flatland | 3 Trains 5 Trains |

3 5 |

Discrete | Vector | Dense | Homog | source |

| 🐜MAMuJoCo | 2-HalfCheetah 2-Ant 4-Ant |

2 2 4 |

Cont. | Vector | Dense | Heterog Homog Homog |

source |

| 🏙️CityLearn | 2022_all_phases | 17 | Cont. | Vector | Dense | Homog | source |

| 🔌Voltage Control | case33_3min_final | 6 | Cont. | Vector | Dense | Homog | source |

To install og-marl run the following command.

pip install -e .["datasets","baselines"]

Depending on the environment you want to use, you should install that environments dependencies. We provide convenient shell scripts for this.

bash install_environments/<environment_name>.sh

You should replace <environment_name> with the name of the environment you want to install.

Installing several different environments dependencies in the same python virtual environment (or conda environment) may work in some cases but in others, they may have conflicting requirements. So we reccomend maintaining a different virtual environment for each environment.

Next you need to download the dataset you want to use and add it to the correct file path. Go to the OG-MARL website (https://sites.google.com/view/og-marl) and download the dataset. Once the zip file is downloaded add it to a directory called datasets on the same level as the og-marl/ directory. The folder structure should look like this:

examples/

|_> ...

og_marl/

|_> ...

datasets/

|_> smacv1/

|_> 3m/

| |_> Good/

| |_> Medium/

| |_> Poor/

|_> ...

|_> smacv2/

|_> terran_5_vs_5/

| |_> Good/

| |_> Medium/

| |_> Poor/

|_> ...

Note: because we support many different environments, each with their own set of dependencies which are often conflicting, it might be required that you follow slightly different installation instructions for each environment. For this, we recommend reading the detailed installation guide.

Below we provide a code snippet demonstrating the ease of use of OG-MARL. The code shows how to record and load datasets in a simple example using the 3m scenario from SMAC. We also provide a detailed tutorial for a step-by-step guide across multiple environments.

from og_marl import SMAC

from og_marl import QMIX

from og_marl import OfflineLogger

# Instantiate environment

env = SMAC("3m")

# Wrap env in offline logger

env = OfflineLogger(env)

# Make multi-agent system

system = QMIX(env)

# Collect data

system.run_online()

# Load dataset

dataset = env.get_dataset("Good")

# Train offline

system.run_offline(dataset)We are currently working on a large refactor of OG-MARL to get rid of the dependency on reverb and launchpad. This will make the code a lot easier to work with. The current progress on the refactor can be followed on the branch refactor/remove-reverb-and-launchpad.

Offline MARL also lends itself well to the new wave of hardware-accelerated research and development in the field of RL. In the near future, we plan to release a JAX version of OG-MARL.

If you use OG-MARL in your work, please cite the library using:

@misc{formanek2023offthegrid,

title={Off-the-Grid MARL: a Framework for Dataset Generation with Baselines for Cooperative Offline Multi-Agent Reinforcement Learning},

author={Claude Formanek and Asad Jeewa and Jonathan Shock and Arnu Pretorius},

year={2023},

eprint={2302.00521},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

Other works that form part of InstaDeep's MARL ecosystem in JAX. In particular, we suggest users check out the following sister repositories: