MoPA: Multi-Modal Prior Aided Domain Adaptation for 3D Semantic Segmentation

Haozhi Cao1,*,

Yuecong Xu2,

Jianfei Yang2,

Pengyu Yin1,

Shenghai Yuan1,

Lihua Xie1

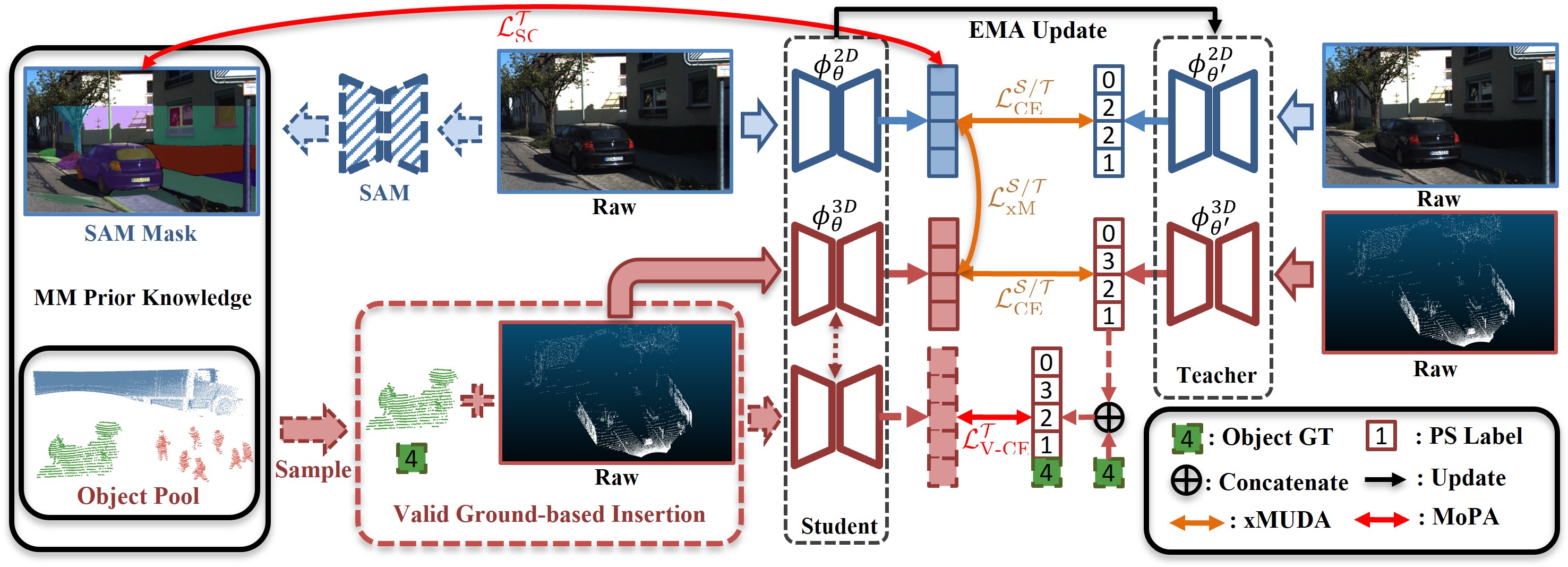

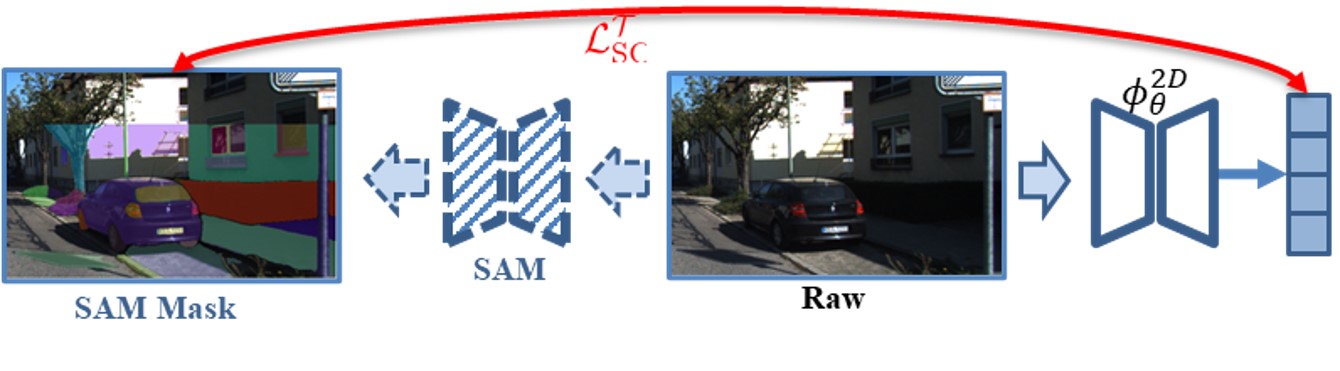

MoPA is a MM-UDA method that aims to alleviate the imbalanced class-wise performance on Rare Objects (ROs) and the lack of 2D dense supervision signals through Valid Ground-based Insertion (VGI) and Segment Anything Mask consistency (SAM consistency). An overall structure is as follows.

Specifically, VGI insert more ROs from the wild with ground truth to guide the recognition of ROs during UDA process without introducing artificial artifacts, while SAM consistency leverage image masks from Segment Anything Model to encourage mask-wise prediction consistency.

- [2024.03] We are now refactoring our code and evaluating its feasibility. Code will be available shortly.

- [2024.01] Our paper is accepted by ICRA 2024! Check our paper on arxiv here.

- Initial release. 🚀

- Add installation and prerequisite details.

- Add data preparation details.

- Add training details.

- Add evaluation details.

We greatly appreciate the contributions of the following public repos:

@article{cao2023mopa,

title={Mopa: Multi-modal prior aided domain adaptation for 3d semantic segmentation},

author={Cao, Haozhi and Xu, Yuecong and Yang, Jianfei and Yin, Pengyu and Yuan, Shenghai and Xie, Lihua},

journal={arXiv preprint arXiv:2309.11839},

year={2023}

}