This code is part of supplementary materials for our method which acheived 11th place among 170 teams in the ISBI 2020 MoNuSAC Workshop.

A joint paper which summarizes the dataset, and methods developed by various participants titled MoNuSAC2020: A Multi-organ Nuclei Segmentation and Classification Challenge is published at IEEE Transactions on Medical Imaging (TMI).

- TMI Paper

- Technical Report (PDF)

- Leaderboard

- Slides

- ISBI 2020 MoNuSAC Workshop Oral Presentation (Time - 1:58:46)

- Participant Manuscripts

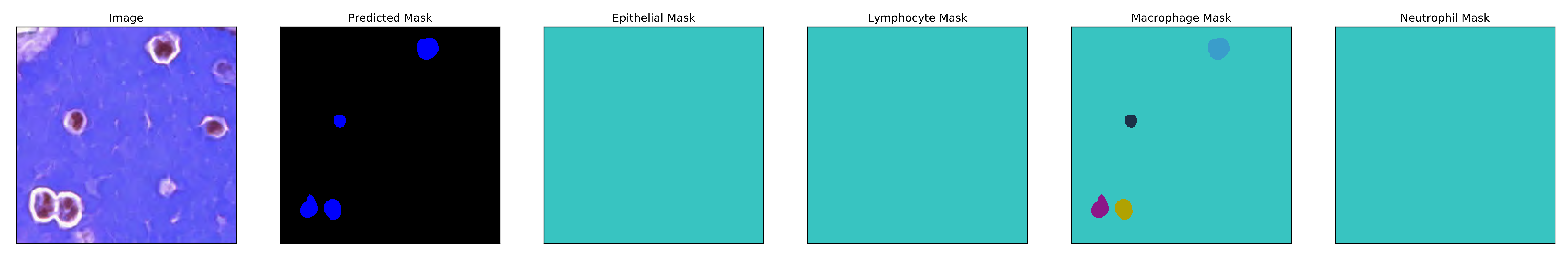

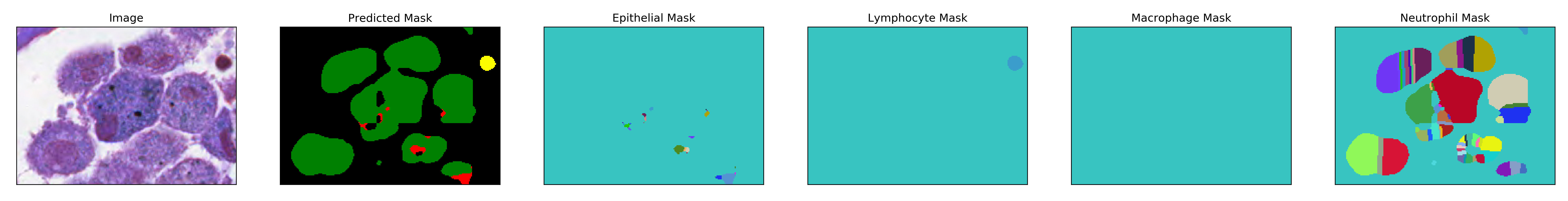

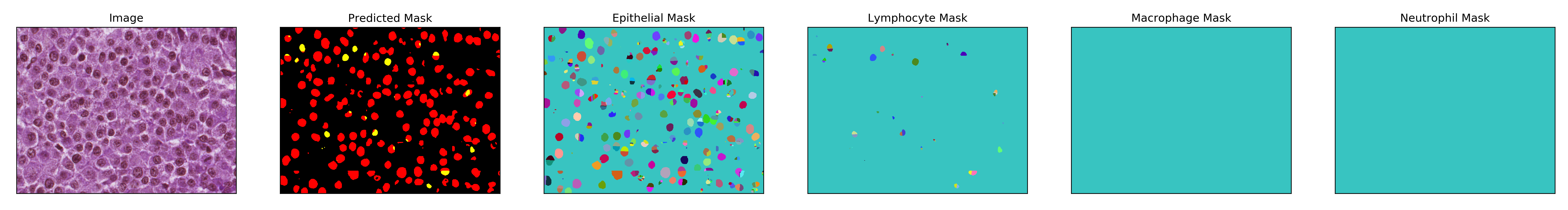

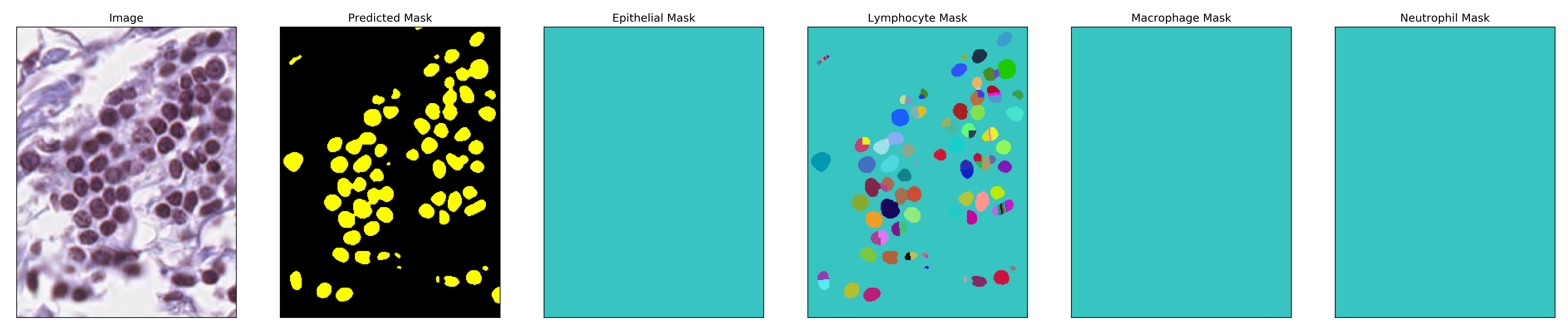

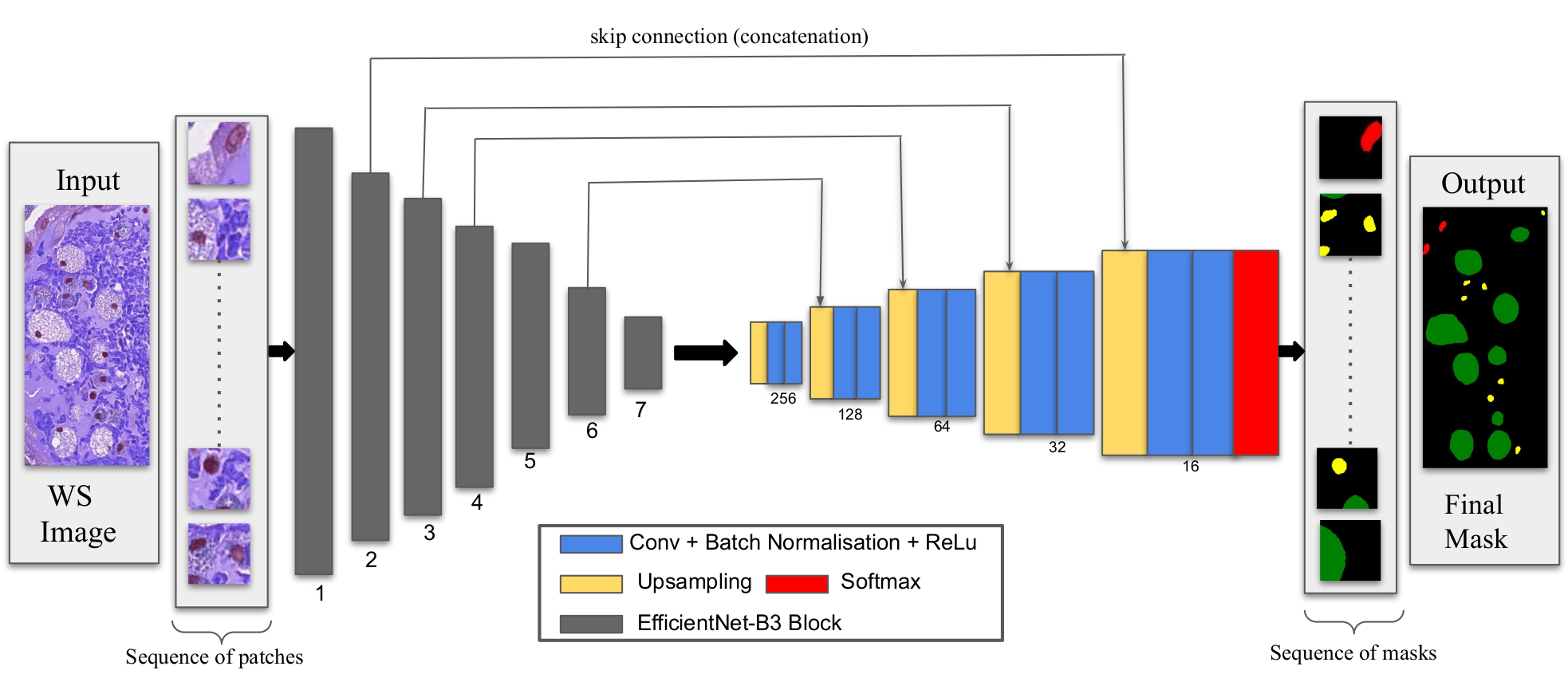

In this work, we implement an end-to-end deep learning framework for automatic nuclei segmentation and classification from H&E stained whole slide images (WSI) of multiple organs (breast, kidney, lung and prostate). The proposed approach, called PatchEUNet, leverages a fully convolutional neural network of the U-Net family by replacing the encoder of the U-Net model with an EfficientNet architecture with weights initialized from ImageNet.

Since there is a large scale variance in the whole slide images of the MoNuSAC 2020 Challenge, we propose to use a patchwise training scheme to mitigate the problems of multiple scales and limited training data. For the class imbalance problem, we design an objective function defined as a weighted sum of a focal loss and Jaccard distance, resulting in significantly improved performance. During inference, we apply the median filter on the predicted masks in an effort to refine the segmented outputs. Finally for each class mask, we apply watershed algorithm to get the class instances.

Results reported in this table are from the validation set made from the entire training set provided for the challenge. These are results of multi-class segmentation of the 4 cell types.

| Config | IoU(%) | Dice(%) |

|---|---|---|

| U-Net | 77 | 78 |

| Proposed | 84 | 87 |

- Python: 3.6

- Tensorflow: 2.0.0

- Keras: 2.3.1

- segmentation_models.

- OpenCV

Run this command to make environment

conda env create -f environment.yml

OR you can make you own environment by:

conda create -n yourenvname python=3.6 anaconda

Then install the packages

conda install -c anaconda tensorflow-gpu=2.0.0

conda install -c conda-forge keras

conda install -c conda-forge opencv

conda install -c conda-forge tqdm

The run conda activate yourenvname.

NOTE: segmentation_models does not have conda distribution. You can install by running pip install -U --pre segmentation-models --user inside your environment. More details at segmentation_models.

MoNuSAC_images_and_annotations: original dataset which has patient's whole slide images (WSI) and ground truth. (Given by challenge organizers)MoNuSAC_masks: contains binary masks generated fromget_mask.ipynb.Testing Images: contains test images, without annotations. (Given by challenge organizers)data_processedv0: contains all raw images and the ground truth masks.data_processedv4: sliding window patchwise data from original images and masks indata_processedv0.data_processedv5: 80/20 trainval split fromdata_processedv4.

- Clone repository (obviously!)

- Make

datasetfolder - Put

MoNuSAC_images_and_annotationsindatasetfolder - Run

0_get_masks.ipynb. You should get the MoNuSAC_masks folder in dataset - Run

1_data_process_MoNuSAC.ipynbto get raw images and their ground truth masks indata_processedv0. - Run

2_extract_patches.ipynbto get patches of images and gt masks from the previous raw version to getdata_processedv4and the 80/20 splitdata_processedv5. - Run

3_train.iynb. It trains PatchEUNet ondata_processedv5. - Put

Testing Imagesindatasetfolder. - Run

3c_load_test_data.ipynb. Outputstest_imagesfolder with all test images. - Run

4_inference.ipynbto get final prediction masks fromtest_images. (For visualization) - Run

4b_inference.ipynbto get final prediction masks according to MoNuSAC submission format.

Weight file for the multiclass segmentation model is available at releases.

Model definition and baseline training scripts are based on https://segmentation-models.readthedocs.io/en/latest/install.html.

MIT