Code release for SceneReplica paper: ArXiv | Webpage

- Data Setup: Setup the data files, object models and create a ros workspace with source for the different algorithms

- Scene Setup: Setup the Scene in either simulation or real world

- Experiments: Run experiments from different algorithms listed

- Misc: Scene Generation, Video recording, ROS workspace setup

For ease of use, the code assumes that you have the data files (model meshes, generated scenes etc) under a single directory.

As an example it can be something simple like: ~/Datasets/benchmarking/

~/Datasets

|--benchmarking

|--models/

|--grasp_data

|--refined_grasps

|-- fetch_gripper-{object_name}.json

|--sgrasps.pk

|--final_scenes

|--scene_data/

|-- scene_id_*.pk scene pickle files

|--metadata/

|-- meta-00*.mat metadata .mat files

|-- color-00*.png color images for scene

|-- depth-00*.png depth images for scene

|--scene_ids.txt : selected scene ids on each line

Follow the steps below, download and extract the zip files:

- Download and extract Graspit generated grasps for YCB models:

grasp_data.zipasgrasp_data/ - Download the successful grasps file : sgrasps.pk This file contains list of possible successful grasp indices for each object.

- Each object in each scene is checked for a motion plan to standoff position, using all the grasps generated in grasp_data

- Grasps that provide a valid motion plan are saved w.r.t that object and corresponding scene.

- the algorithm first iterates throught these successful grasps and the rest next, in the actual experiments.

- Download and extract Scenes Data :

final_scenes.zipasfinal_scenes/ - [Optional] Download and extract YCB models for gazebo and Isaac Sim (using

textured_simplemeshes):models.zip- Only needed if you want to play around with the YCB models in Gazebo or Isaac Sim simulation

- This already includes the edited model for cafe table under the name

cafe_table_org - For Gazebo, create a symlink to the gazebo models into your Fetch Gazebo src/models/:

~/src/fetch_gazebo/fetch_gazebo/models

Scene Idxs: 10, 25, 27, 33, 36, 38, 39, 48, 56, 65, 68, 77, 83, 84, 104, 122, 130, 141, 148, 161

Setup the data folders as described and follow the steps below:

-

Setup the YCB scene in real world as follows.

--datadiris the dir withcolor-*.png, depth-*.png, pose-*.png and *.matinfo files. Using the above Data Setup, it should be the folder:final_scenes/metadata/cd src python setup_robot.py # will raise torso and adjust head camera lookat python setup_ycb_scene.py --index [SCENE_IDX] --datadir [PATH TO DIR]

-

setup_ycb_scene.pystarts publishing an overlay image which we can use as a reference for real world object placement -

Run rviz to visualize the overlay image and adjust objects in real world

rosrun rviz rviz -d config/scene_setup.rviz

Details about real world setup:

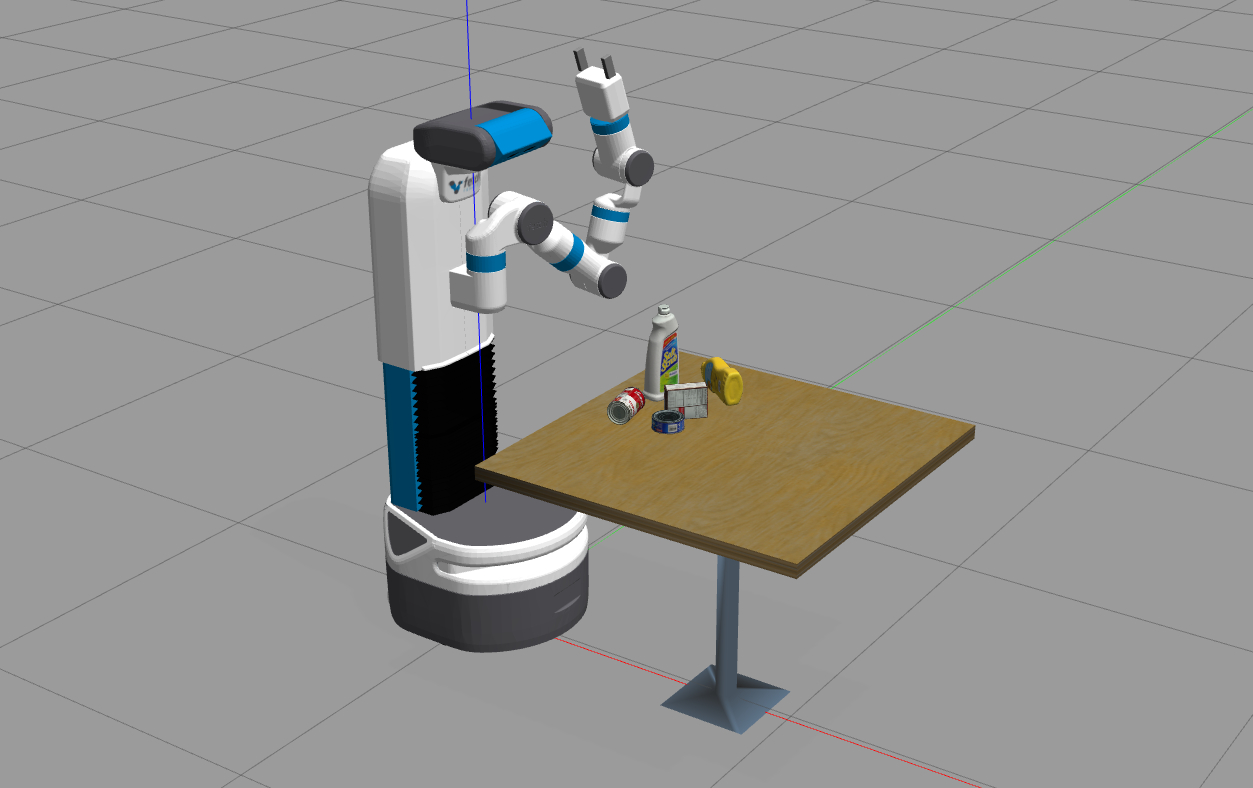

- We use a Fetch Mobile Manipulator for the experiments

- Table height used for the experiments was 0.75 meters

- Using a height adjustable table is preferred to ensure this match

- Sufficient lighting for the scene is also assumed. The performance of perception algorithms may get affected with different lighting

Usage with other robots: The metadata files (specifically the color scene-overlay reference images) are obtained using a Fetch robot in simulation. So the same images will not work for scene replication unless your robot's camera (either internal or external camera) have the same pose. In order to recreate the same scene and generate new overlay images for your setup, you can follow these steps:

- Recreate the setup in simulation where you can fix the camera (same pose as to be used in real world). If possible, try to match Fetch robot setup which we use (reference:

src/setup_robot.pywhich adjusts the camera lookat position) - Spawn the desired in simulation using

setup_scene_sim.pyreference as a reference. This should spawn the objects in correct locations in the simulation. - Use the

src/scene_generation/save_pose_results.pyas a reference to save your own version of overlay images. They might look a bit different but the end goal of recreating the scene in real world should work as before. - Once you have the overlay images, the entire pipeline works as document where you re-create the scene in real world using the overlay images (make sure you are not using the ones for Fetch robot). This assumes that the camera pose in real world is same as the one used in simulation.

[Optional]

-

Download the models of YCB objects as described above. Make sure creating a symlink to the gazebo models into your Fetch Gazebo src/models/:

~/src/fetch_gazebo/fetch_gazebo/models -

launch the tabletop ycb scene with only the robot

roslaunch launch/just_robot.launch

-

Setup the desired scene in Gazebo:

cd src/ python setup_scene_sim.pyPreferred that all data is under

~/Datasets/benchmarking/{scene_dir}/(e.g. "final_scenes"). It runs in a loop and asks you to enter the scene id at each iteration. Loads the objects in gazebo and waits for user confirmation before next scene. -

To use MoveIt with Gazebo for the Fetch robot, start MoveIt by

roslaunch launch/moveit_sim.launch

-

Try the model-based grasping as described below in Gazebo

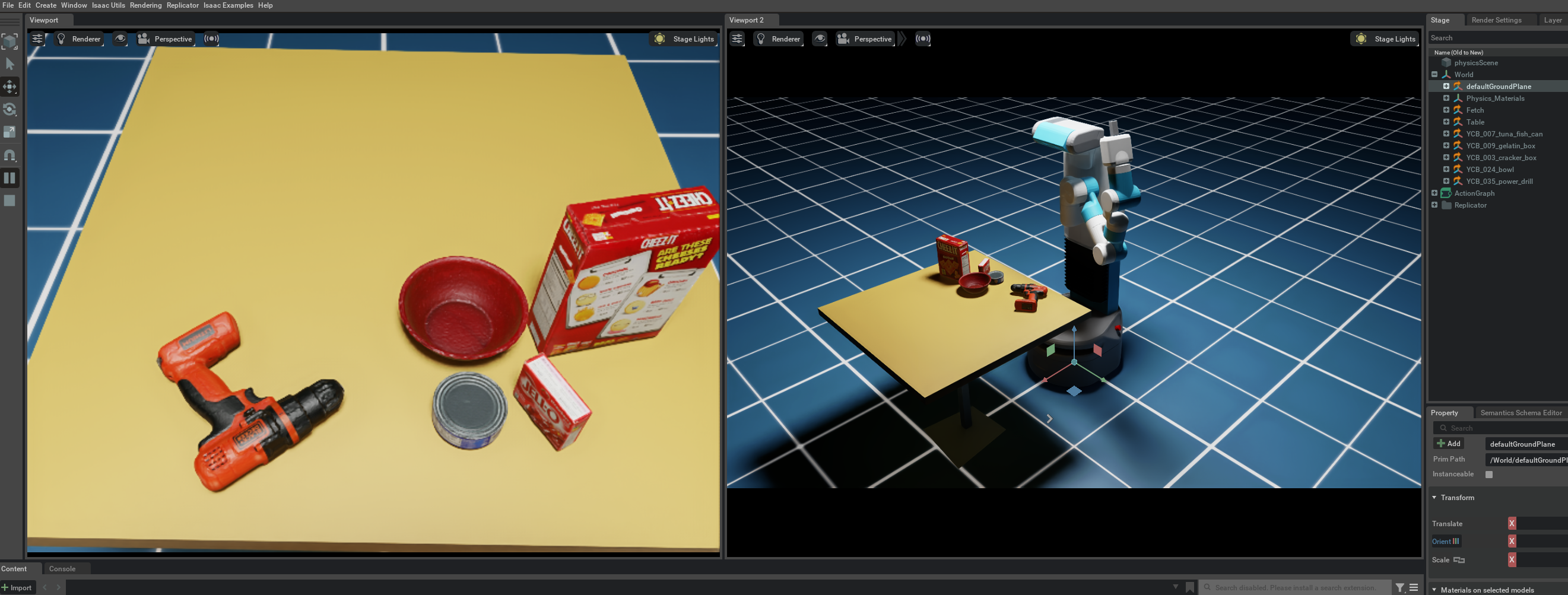

[Optional]

-

Download the models of YCB objects as described above, and make sure you have isaac_sim correctly installed

-

Setup the desired scene in Isaac Sim:

cd src/ ./$ISAAC_ROOT/python setup_scene_isaac.py --scene_index $scene_index

-

To use MoveIt with Isaac Sim for the Fetch robot, download and setup the isaac_sim_moveit package. Start the controllers and the MoveIt by

cd isaac_sim_moveit/launch roslaunch fetch_isaac_controllers.launch roslaunch fetch_isaac_moveit.launch -

Try the model-based grasping as described below in Isaac Sim. For example,

cd src python3 bench_model_based_grasping.py --data_dir=../data/ --scene_idx 68 --pose_method isaac --obj_order nearest_first

- We use a multi-terminal setup for ease of use (example: Terminator)

- In one part, we have the perception repo and run bash scripts to publish the required perception components (e.g., 6D Object Pose or Segmentation Masks)

- We also have a MoveIt motion planning launch file as for the initial experiments, we have only benchmarked against MoveIt

- Lastly, we have a rviz visualization terminal and a terminal to run the main grasping script.

- Ensure proper sourcing of ROS workspace and conda environment activation.

-

Start the appropriate algorithm from below in a separate terminal and verify if the required topics are being published or not?

-

Once verified,

cd src/and you can start the grasping script: Runpython bench_model_based_grasping.py. See its command line args for more info.

--pose_method: From {"gazebo", "isaac", "posecnn", "poserbpf"}--obj_order: From {"random", "nearest_first"}--scene_idx: Scene id for which you'll test the algorithm

Reference repo: IRVLUTD/PoseCNN-PyTorch-NV-Release

cd PoseCNN-PyTorch-NV-Releaseconda activate benchmark- term1:

rosrun rviz rviz -d ./ros/posecnn.rviz - term2:

./experiments/scripts/ros_ycb_object_test_fetch.sh $GPU_ID - More info: check out README

Reference repo: IRVLUTD/posecnn-pytorch

cd posecnn-pytorchconda activate benchmark- term1:

./experiments/scripts/ros_ycb_object_test_subset_poserbpf_realsense_ycb.sh $GPU_ID $INSTANCE_ID - term2:

./experiments/scripts/ros_poserbpf_ycb_object_test_subset_realsense_ycb.sh $GPU_ID $INSTANCE_ID - Rviz visualization:

rviz -d ros/posecnn_fetch.rviz - Check out README for more info

-

Start the appropriate algorithm from below in a separate terminal and verify if the required topics are being published or not?

- First run the segmentation script

- Next run the 6dof grasping script

- Finally run the grasping pipeline script

-

Once verified, you can start the grasping script:

cd src/and runpython bench_6dof_segmentation_grasping.py. See its command line args for more info.--grasp_method: From {"graspnet", "contact_gnet"}--seg_method: From {"uois", "msmformer"}--obj_order: From {"random", "nearest_first"}--scene_idx: Scene id for which you'll test the algorithm

Reference repo: IRVLUTD/UnseenObjectClustering

cd UnseenObjectClustering- seg code:

./experiments/scripts/ros_seg_rgbd_add_test_segmentation_realsense.sh $GPU_ID - rviz:

rosrun rviz rviz -d ./ros/segmentation.rviz

Reference repo: YoungSean/UnseenObjectsWithMeanShift

cd UnseenObjectsWithMeanShift- seg code:

./experiments/scripts/ros_seg_transformer_test_segmentation_fetch.sh $GPU_ID - rviz:

rosrun rviz rviz -d ./ros/segmentation.rviz

Reference repo: IRVLUTD/ pytorch_6dof-graspnet

cd pytorch_6dof-graspnet- grasping code:

./exp_publish_grasps.sh(chmod +x if needed)

Reference repo: IRVLUTD/contact_graspnet

NOTE: This has a different conda environment (contact_graspnet) than others due to a tensorflow dependency.

Check the env_cgnet.yml env file in the reference repo.

cd contact_graspnetconda activate contact_graspnet- Run

run_ros_fetch_experiment.shin a terminal.- In case GPU usage is too high, (check via

nvidia-smi): reduce the number for--forward_passesflag in shell script - If you dont want to see generated grasp viz, remove the

--vizflag from shell script

- In case GPU usage is too high, (check via

We utilize a Gazebo simulation envrionment to generate the scenes. The gazebo_models meshes and offline grasp dataset (grasp_data) are used in this process. All the scripts for scene generation and final scene selection are located in src/scene_generation/ with a detailed README for the process.

-

Launch the RealSense camera

roslaunch realsense2_camera rs_aligned_depth.launch tf_prefix:=measured/camera

-

Run the video recording script:

src/video_recorder.pycd src/ python3 video_recorder.py -s SCENE_ID -m METHOD -o ORDER [-f FILENAME_SUFFIX] [-d SAVE_DIR]To stop capture,

Ctrl + Cshould as a keyboard interrupt.

We used the following steps to setup our ROS workspace. Install the following dependencies into a suitable workspace folder, e.g. ~/bench_ws/

1. Install ROS Noetic

Install using the instructions from here - http://wiki.ros.org/noetic/Installation/Ubuntu

2. Setup ROS workspace

Create and build a ROS workspace using the instructions from here - http://wiki.ros.org/catkin/Tutorials/create_a_workspace

3. Install fetch ROS, Gazebo packages and custom scripts

Install packages using following commands inside the src folder

`git clone -b melodic-devel https://github.com/ZebraDevs/fetch_ros.git`

`git clone –branch gazebo11 https://github.com/fetchrobotics/fetch_gazebo.git`

`git clone https://github.com/ros/urdf_tutorial`

`git clone https://github.com/IRVLUTD/SceneReplica.git`

If you see missing package erros: Use apt install for ros-noetic-robot-controllers and ros-noetic-rgbd-launch.

Gazebo or MoveIt Issues:

alias killgazebo="killall -9 gazebo & killall -9 gzserver & killall -9 gzclient"and then usekillgazebocommand to restart- Similarly for MoveIt, you can use

killall -9 move_groupifCtrl-Con the moveit launch process is unresponsive