Distracted Drivers Starter Project

This starter project currently ranks in the top 15% of submissions and with a few minor changes can reach the top 10%.

Follow the Fomoro cloud setup instructions to get started with AWS spot instances without the hassle of setting up infrastructure. Fomoro provisions GPU instances, tracks model and dataset versions, and allows you to focus on iteration.

Training

Before using this code you must agree to the Kaggle competition's terms of use.

Setup

Feel free to email me or reach out on Twitter if you need help getting started.

- Follow the installation guide for Fomoro.

- Fork this repo.

- Clone the forked repo.

Dataset Preparation

In this step we'll upload the dataset to Fomoro for training.

- Download the images and drivers list into the "dataset" folder:

- Unzip both into the "dataset" folder so it looks like this:

- distracted-drivers-keras/

- dataset/

- imgs/

- train/

- test/

- driver_imgs_list.csv

- imgs/

- dataset/

- From inside the dataset folder, run

python prep_dataset.py. This generates a pickled dataset of numpy arrays. - Again, from inside the dataset folder, run

fomoro data publish. This will upload the dataset to Fomoro for training.

Model Training

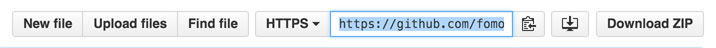

- Copy the clone url from your fork on Github:

- Insert the clone url into the "repo" line of the

fomoro.tomlconfig in the root of the repo. - Insert your Fomoro username as described in the

fomoro.tomlconfig. - Add the model to Fomoro:

fomoro model add. - Start a training session:

fomoro session start -f. Training should take a little over 30 minutes.

Making Changes

When you want to train new code, simply commit the changes, push them to Github, then run fomoro session start -f.

You can type fomoro help for more commands.

Running Locally

To run the code locally simply install the dependencies and run python main.py.

Model Development

- Tune your learning rate. If the loss diverges, the learning rate is probably too high. If it never learns, it might be too low.

- Good initialization is important. The initial values of weights can have a significant impact on learning. In general, you want the weights initialized based on the input and/or output dimensions to the layer (see Glorot or He initialization).

- Early stopping can help prevent overfitting but good regularization is also beneficial. L1 and L2 can be hard to tune but batch normalization and dropout are usually much easier to work with.