Welcome to the official repository of VLM-Eval: A General Evaluation on Video Large Language Models, the go-to source for a comprehensive evaluation of Video Large Language Models. Immerse yourself in this pioneering project that sets a new standard in the video AI domain.

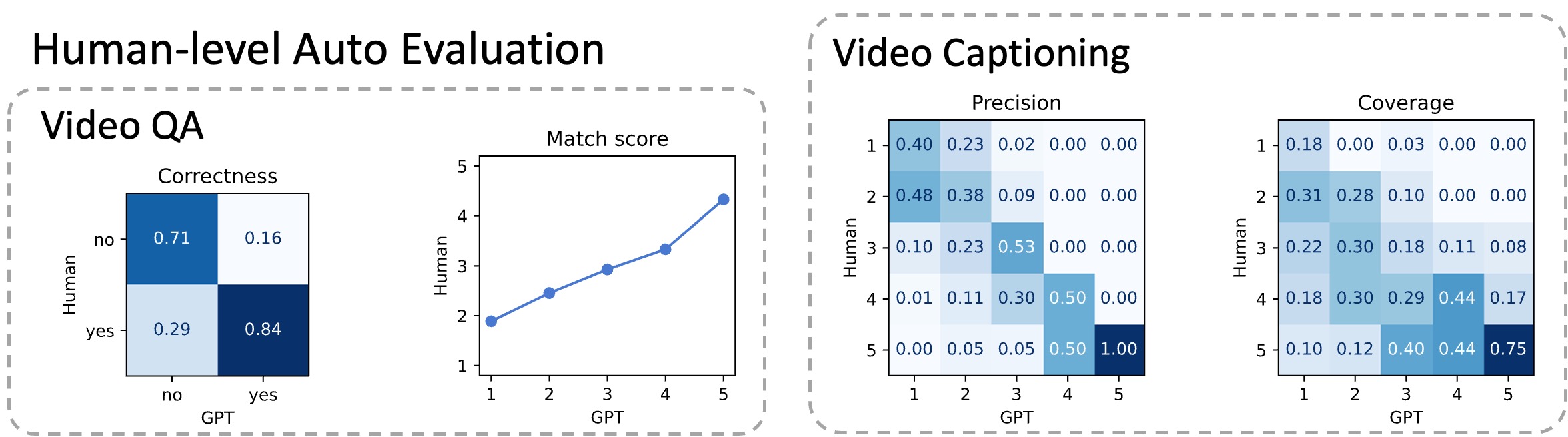

Discover how VLM-Eval leverages the power of human-verified GPT-based and retrieval-based evaluation, covering tasks from video captioning to action recognition.

The framework distinguishes itself with its dual evaluation approach. The GPT-based evaluation harnesses the capabilities of Generative Pre-trained Transformers to analyze and interpret video captions, ensuring reliable and efficient assessment through human-verified prompts. On the other hand, the retrieval-based evaluation focuses on the practical application of these models in real-world scenarios, emphasizing their utility in downstream applications.

We welcome additions to the list of papers and models, as well as evaluation results.

- Video-LLaMA: An Instruction-tuned Audio-Visual Language Model for Video Understanding Paper Github Demo

- VideoChat: Chat-Centric Video Understanding Paper Github

- Video-ChatGPT: Towards Detailed Video Understanding via Large Vision and Language Models Paper Github

- Video-LLaVA: Learning United Visual Representation by Alignment Before Projection Paper Github Demo

- VideoChat2: Chat-Centric Video Understanding Paper Github Demo

- LLaMA-VID: An Image is Worth 2 Tokens in Large Language Models Paper Github Demo

The following datasets are used in our training.

| Dataset | Task Domain | Scale |

|---|---|---|

| WebVid link | Video captioning | 10M |

| NExT-QA link | Video QA | 5K |

| DiDemo link | Video captioning, temporal localization | 10K |

| MSRVTT link | Video QA, video captioning | 10K |

| MSVD link | Video QA, video captioning | 2K |

| TGIF-QA link | Video QA | 72K |

| HMDB51 link | Action recognition | 7K |

| UCF101 link | Action recognition | 13K |

| VideoInstruct-100K link | Chat | 100K |

Other datasets:

| Dataset | Task Domain | Scale |

|---|---|---|

| VideoChatInstruct-11K link | Chat | 11K |

| Valley-webvid2M-Pretrain-703K link | Video captioning | 703K |

| Valley-Instruct-73k link | Chat | 73K |

| Kinetics-400 link | Action recognition | 650K |

Dive into our detailed benchmarking results, showcasing the capabilities of different video language models and setting new standards in the field.

| Method | QA Acc |

QA Match |

Cap Prec |

Cap Cov |

T2V Acc5 |

V2T Acc5 |

Act Acc1 |

Act Acc5 |

|---|---|---|---|---|---|---|---|---|

| Video-LLaMA | 32.2 | 2.26 | 1.99 | 2.08 | 23.4 | 23.4 | 28.2 | 47.6 |

| VideoChat | 41.6 | 2.63 | 2.02 | 2.16 | 25.8 | 30.3 | 36.0 | 56.8 |

| Video-ChatGPT | 46.2 | 2.84 | 2.49 | 2.65 | 28.2 | 32.8 | 40.3 | 64.5 |

| Video-LLaVA (VLM-Eval) | 48.8 | 2.98 | 2.75 | 2.86 | 33.5 | 31.9 | 43.0 | 64.3 |

| Video-LLaVA (Lin et al.) | 48.0 | 2.90 | 2.18 | 2.29 | 29.7 | 31.4 | 41.3 | 63.9 |

| VideoChat2 | 44.6 | 2.80 | 2.29 | 2.42 | 28.9 | 28.7 | 35.6 | 55.4 |

| LLaMA-VID | 50.1 | 2.97 | 2.18 | 2.25 | 29.4 | 30.1 | 45.1 | 67.4 |

For those eager to explore VLM-Eval, this section provides a comprehensive guide to get started. It includes instructions on setting up the environment, along with examples and tutorials to help users effectively utilize the framework for their research. At the current stage, you must download videos from the original datasets. We also highly recommend that you request us to test your open-source video LLM.

The initial step involves setting your OpenAI key in the environment. You can do this by the command below.

export OPENAI_API_KEY="your api key here"The following command provided will guide GPT-3.5 to assess video captions within the MSVD dataset. The overall usage comprises approximately 62K prompt tokens and 14K completion tokens. This process will only incur a cost of about $0.09.

It is normal to encounter some errors during the GPT evaluation process. The evaluation code is designed to retry automatically.

You may download the prediction file msvd_cap_results_final.json from here, and the gt file MMGPT_evalformat_MSVD_Caption_test.json from here.

python -m eval_bench.gpt_eval.evaluate_cap_pr_v2 \

--pred_path path/to/msvd_cap_results_final.json \

--gt /data/data/labels/test/MMGPT_evalformat_MSVD_Caption_test.json \

--output_dir results/ours/msvd_cap_pr/ \

--output_json results/ours/acc_msvd_cap_pr.json \

--out_path results/ours/cap_pr_metric_msvd.json \

--num_tasks 8 \

--kernel_size 5 \

--max_try_times 15python -m eval_bench.eval_cap_ret \

--eval_task retrieval \

--pred path/to/msvd_cap_results_final.json \

--gt /data/data/labels/test/MMGPT_evalformat_MSVD_Caption_test.json \

--clip_model ViT-B/32python -m eval_bench.eval_cap_ret \

--eval_task caption \

--pred path/to/msvd_cap_results_final.json \

--gt /data/data/labels/test/MMGPT_evalformat_MSVD_Caption_test.jsonYou may download the prediction file msvd_qa_preds_final.json from here. There is no need for a separate ground truth file since the ground truth is already embedded within the prediction file.

python -m eval_bench.gpt_eval.evaluate_qa_msvd \

--pred_path path/to/msvd_qa_preds_final.json \

--output_dir results/ours/msvd_qa/ \

--output_json results/ours/acc_msvd_qa.json \

--out_path results/ours/qa_metric_msvd.json \

--num_tasks 8 \

--task_name MSVD \

--kernel_size 10 \

--max_try_times 15python -m eval_bench.eval_cap_ret \

--eval_task action \

--pred_path mmgpt/0915_act/ucf101_action_preds_final.json \

--out_path results/mmgpt/0915_act/ucf101_action_preds_final.json \

--clip_model ViT-B/32Enjoy exploring and implementing VLM-Eval! 💡👩💻🌍

This project is licensed under the terms of the MIT License.

VLM-Eval encourages active community participation. Researchers and developers are invited to contribute their papers, models, and evaluation results, fostering a collaborative environment. This section also highlights significant contributions from the community, showcasing a diverse range of insights and advancements in the field.

Contributions from the following sources have shaped this project:

If VLM-Eval enhances your research, we appreciate your acknowledgment. Find citation details here, and don't forget to star our repository!

@article{li2023vlm,

title={VLM-Eval: A General Evaluation on Video Large Language Models},

author={Li, Shuailin and Zhang, Yuang and Zhao, Yucheng and Wang, Qiuyue and Jia, Fan and Liu, Yingfei and Wang, Tiancai},

journal={arXiv preprint arXiv:2311.11865},

year={2023}

}