SemCity : Semantic Scene Generation with Triplane Diffusion

Jumin Lee*, Sebin Lee*, Changho Jo, Woobin Im, Juhyeong Seon and Sung-Eui Yoon*

We test our code on Ubuntu 20.04 with a single RTX 3090 or 4090 GPU.

git clone https://github.com/zoomin-lee/SemCity.git

conda create -n semcity

conda activate semcity

conda install pytorch==2.1.0 torchvision==0.16.0 torchaudio==2.1.0 pytorch-cuda=11.8 -c pytorch -c nvidia

pip install blobfile matplotlib prettytable tensorboard tensorboardX scikit-learn tqdm

pip install --user -e .

We use the SemanticKITTI and CarlaSC datasets. See dataset.md for detailed data structure.

Please adjust the sequences folder path in dataset/path_manager.py.

Train the Triplane Autoencoder and then the Triplane Diffusion.

You can set dataset using --dataset kitti or --dataset carla.

In/outpainting and semantic scene completion refinement are only possible with SemanticKITTI datasets.

python scripts/train_ae_main.py --save_path exp/ae

When you are finished training the triplane autoencoder, save the triplane. The triplane is a proxy representation of the scene for triplane diffusion training.

python scripts/save_triplane.py --data_name voxels --save_tail .npy --resume {ae.pt path}

If you want to train semantic scene completion refinement, also save the triplane of the result of the ssc method (e.g. monoscene).

python scripts/save_triplane.py --data_name monoscene --save_tail _monoscene.npy --resume {ae.pt path}

For training for semantic scene generation or in/outpainting,

python scripts/train_diffusion_main.py --triplane_loss_type l2 --save_path exp/diff

For training semantic scene completion refinement,

python scripts/train_diffusion_main.py --ssc_refine --refine_dataset monoscene --triplane_loss_type l1 --save_path exp/diff

In dataset/path_manager.py, adjust the triplane autoencoder and triplane diffusion .pt paths to AE_PATH and DIFF_PATH.

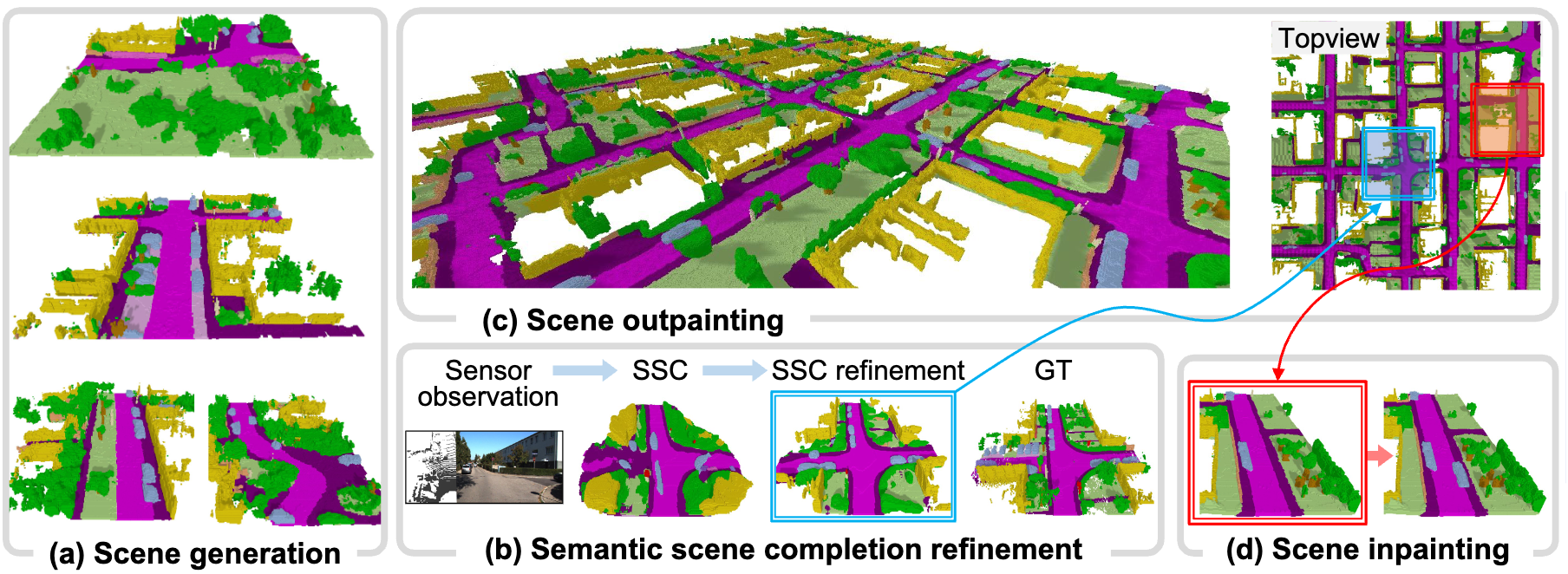

To generate 3D semantic scene like fig(a),

python sampling/generation.py --num_samples 10 --save_path exp/gen

For semantic scene completion refinement like fig(b),

python sampling/ssc_refine.py --refine_dataset monoscene --save_path exp/ssc_refine

Currently, we're only releasing the code to outpaint twice the original scene.

python sampling/outpainting.py --load_path figs/000840.label --save_path exp/out

For inpainting, as in fig(d), you can define the region (top right, top left, bottom right, bottom left) where you want to regenerate.

python sampling/inpainting.py --load_path figs/000840.label --save_path exp/in

We render our scene with pyrender and then evaluate it using torch-fidelity.

The code is partly based on guided-diffusion, Sin3DM and scene-scale-diffusion.

If you find this code useful for your research, please consider citing our paper:

@inproceedings{lee2024semcity,

title={SemCity: Semantic Scene Generation with Triplane Diffusion},

author={Lee, Jumin and Lee, Sebin and Jo, Changho and Im, Woobin and Seon, Juhyeong and Yoon, Sung-Eui},

booktitle={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

year={2024}

}