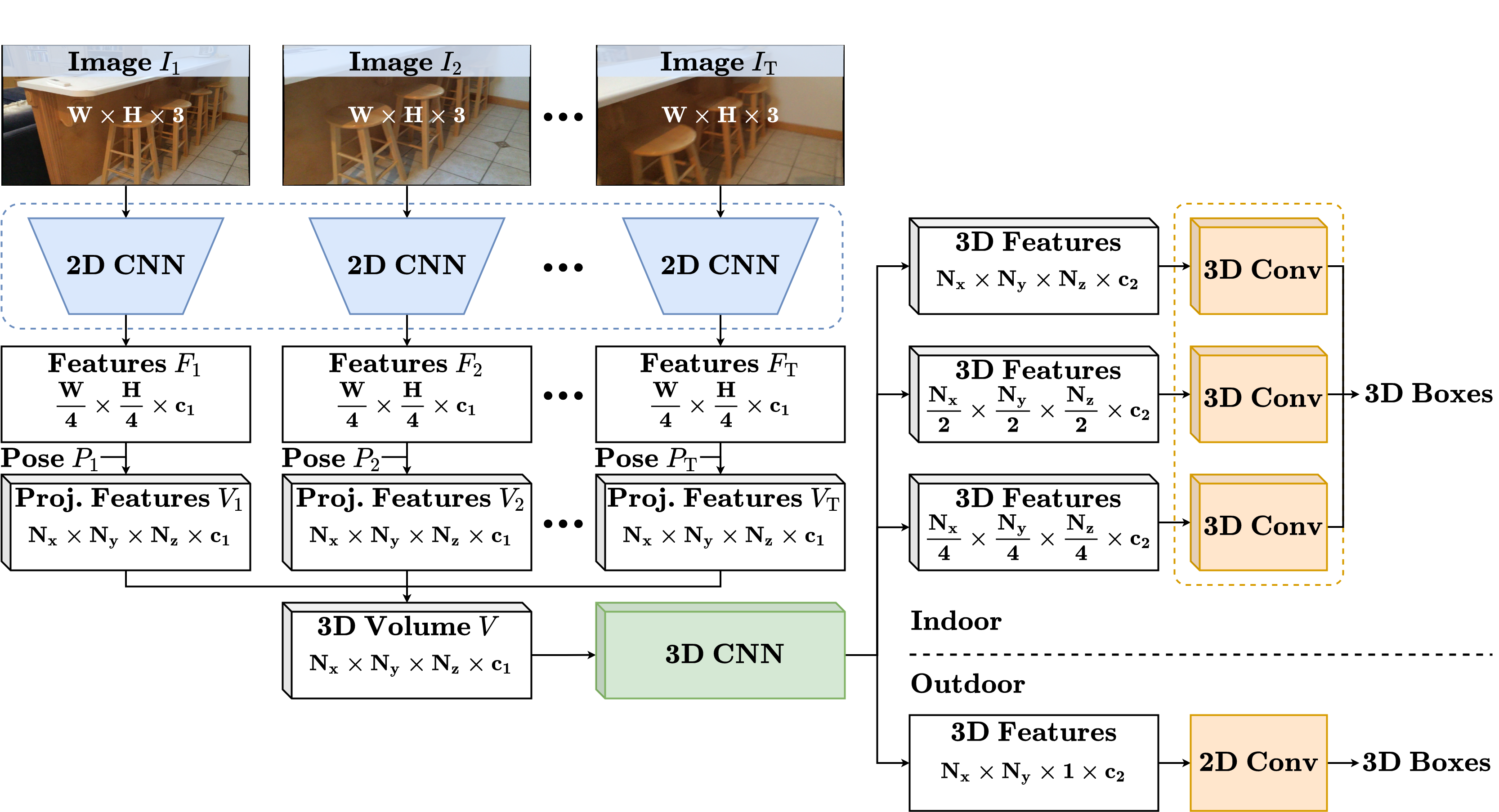

ImVoxelNet: Image to Voxels Projection for Monocular and Multi-View General-Purpose 3D Object Detection

This repository contains implementation of the monocular/multi-view 3D object detector ImVoxelNet, introduced in our paper:

ImVoxelNet: Image to Voxels Projection for Monocular and Multi-View General-Purpose 3D Object Detection

Danila Rukhovich, Anna Vorontsova, Anton Konushin

Samsung AI Center Moscow

https://arxiv.org/abs/2106.01178

For convenience, we provide a Dockerfile. Alternatively, you can install all required packages manually.

This implementation is based on mmdetection3d framework. Please refer to the original installation guide install.md. Also, rotated_iou should be installed.

Most of the ImVoxelNet-related code locates in the following files:

detectors/imvoxelnet.py,

necks/imvoxelnet.py,

dense_heads/imvoxel_head.py,

pipelines/multi_view.py.

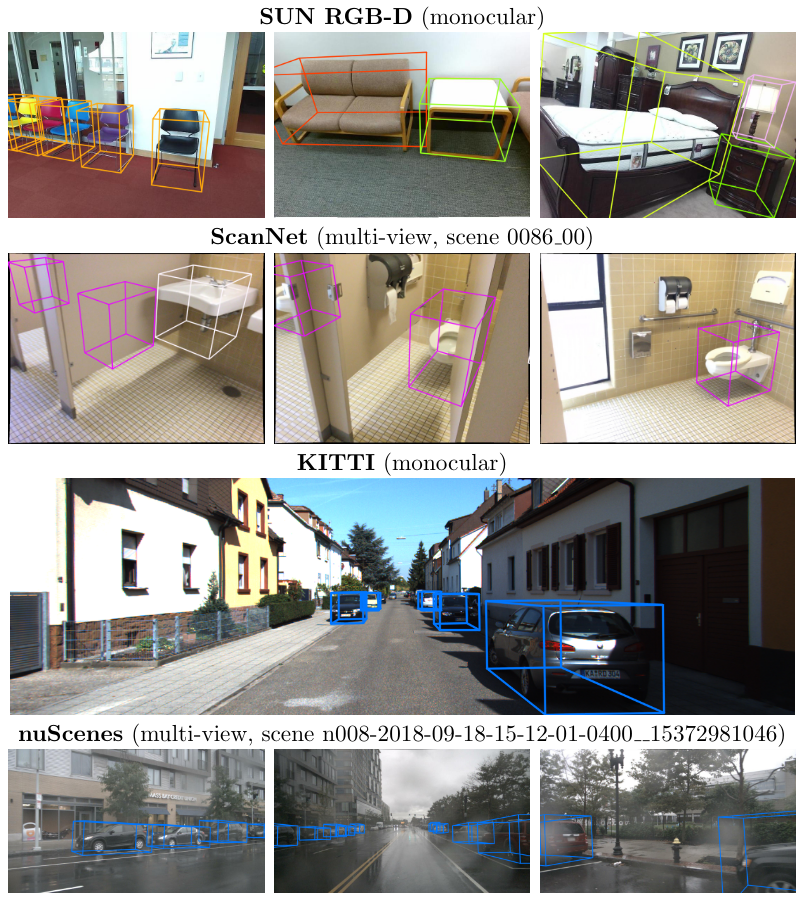

We support three benchmarks based on the SUN RGB-D dataset.

- For the VoteNet benchmark with 10 object categories, you should follow the instructions in sunrgbd.

- For the PerspectiveNet

benchmark with 30 object categories, the same instructions can be applied;

you only need to pass

--dataset sunrgbd_monocularwhen runningcreate_data.py. - The Total3DUnderstanding

benchmark implies detecting objects of 37 categories along with camera pose and room layout estimation.

Download the preprocessed data as

train.json and

val.json

and put it to

./data/sunrgbd. Then run:python tools/data_converter/sunrgbd_total.py

ScanNet. Please follow instructions in scannet.

Note that create_data.py works with point clouds, not RGB images; thus, you should do some preprocessing before running create_data.py.

- First, you should obtain RGB images. We recommend using a script from SensReader.

- Then, put the camera poses and JPG images in the folder with other

ScanNetdata:

scannet

├── sens_reader

│ ├── scans

│ │ ├── scene0000_00

│ │ │ ├── out

│ │ │ │ ├── frame-000001.color.jpg

│ │ │ │ ├── frame-000001.pose.txt

│ │ │ │ ├── frame-000002.color.jpg

│ │ │ │ ├── ....

│ │ ├── ...

Now, you may run create_data.py with --dataset scannet_monocular.

For KITTI and nuScenes, please follow instructions in getting_started.md.

For nuScenes, set --dataset nuscenes_monocular.

Please see getting_started.md for basic usage examples.

Training

To start training, run dist_train with ImVoxelNet configs:

bash tools/dist_train.sh configs/imvoxelnet/imvoxelnet_kitti.py 8Testing

Test pre-trained model using dist_test with ImVoxelNet configs:

bash tools/dist_test.sh configs/imvoxelnet/imvoxelnet_kitti.py \

work_dirs/imvoxelnet_kitti/latest.pth 8 --eval mAPVisualization

Visualizations can be created with test script.

For better visualizations, you may set score_thr in configs to 0.15 or more:

python tools/test.py configs/imvoxelnet/imvoxelnet_kitti.py \

work_dirs/imvoxelnet_kitti/latest.pth --show| Dataset | Object Classes | Download Link | Log |

|---|---|---|---|

| SUN RGB-D | 37 from Total3dUnderstanding | total_sunrgbd.pth | total_sunrgbd.log |

| SUN RGB-D | 30 from PerspectiveNet | perspective_sunrgbd.pth | perspective_sunrgbd.log |

| SUN RGB-D | 10 from VoteNet | sunrgbd.pth | sunrgbd.log |

| ScanNet | 18 from VoteNet | scannet.pth | scannet.log |

| KITTI | Car | kitti.pth | kitti.log |

| nuScenes | Car | nuscenes.pth | nuscenes.log |

If you find this work useful for your research, please cite our paper:

@article{rukhovich2021imvoxelnet,

title={ImVoxelNet: Image to Voxels Projection for Monocular and Multi-View General-Purpose 3D Object Detection},

author={Danila Rukhovich, Anna Vorontsova, Anton Konushin},

journal={arXiv preprint arXiv:2106.01178},

year={2021}

}