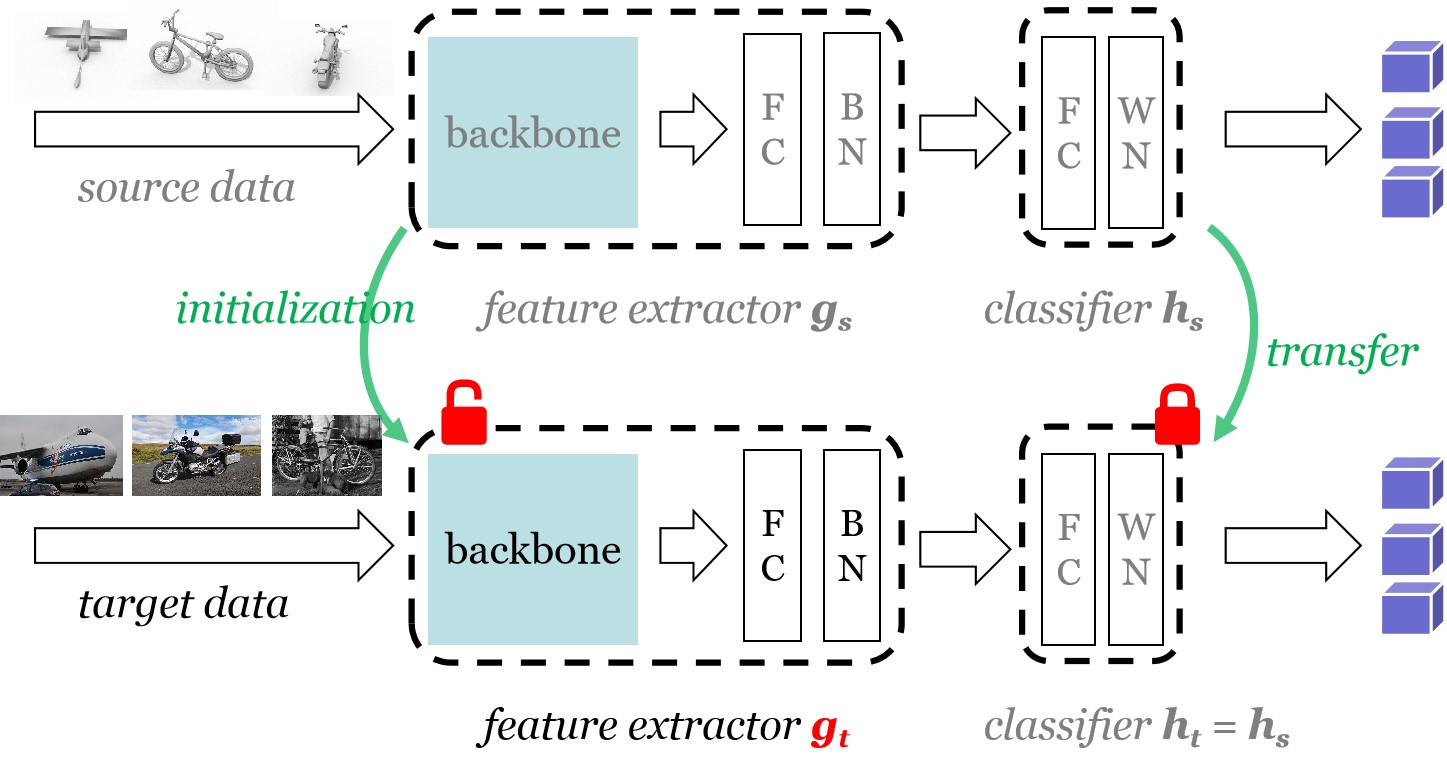

[ICML-2020] Do We Really Need to Access the Source Data? Source Hypothesis Transfer for Unsupervised Domain Adaptation

Attention: A stronger extension (https://arxiv.org/pdf/2012.07297.pdf) of SHOT will be released in a new repository (https://github.com/tim-learn/SHOT-plus).

Note that we update the code and further consider the standard learning rate scheduler like DANN and report new results in the final camera ready version. Please refer results.md for the detailed results on various datasets.

We have updated the results for Digits. Now the results of SHOT-IM for Digits are stable and promising. (Thanks to wengzejia1 for pointing the bugs in uda_digit.py).

- python == 3.6.8

- pytorch ==1.1.0

- torchvision == 0.3.0

- numpy, scipy, sklearn, PIL, argparse, tqdm

-

Please manually download the datasets Office, Office-Home, VisDA-C, Office-Caltech from the official websites, and modify the path of images in each '.txt' under the folder './object/data/'.

-

Concerning the Digits dsatasets, the code will automatically download three digit datasets (i.e., MNIST, USPS, and SVHN) in './digit/data/'.

-

- MNIST -> USPS (m2u) SHOT (cls_par = 0.1) and SHOT-IM (cls_par = 0.0)

cd digit/ python uda_digit.py --dset m2u --gpu_id 0 --output ckps_digits --cls_par 0.0 python uda_digit.py --dset m2u --gpu_id 0 --output ckps_digits --cls_par 0.1

-

- Train model on the source domain A (s = 0)

cd object/ python image_source.py --trte val --da uda --output ckps/source/ --gpu_id 0 --dset office --max_epoch 100 --s 0

- Adaptation to other target domains D and W, respectively

python image_target.py --cls_par 0.3 --da uda --output_src ckps/source/ --output ckps/target/ --gpu_id 0 --dset office --s 0

-

- Synthetic-to-real

cd object/ python image_source.py --trte val --output ckps/source/ --da uda --gpu_id 0 --dset VISDA-C --net resnet101 --lr 1e-3 --max_epoch 10 --s 0 python image_target.py --cls_par 0.3 --da uda --dset VISDA-C --gpu_id 0 --s 0 --output_src ckps/source/ --output ckps/target/ --net resnet101 --lr 1e-3

-

- Train model on the source domain A (s = 0)

cd object/ python image_source.py --trte val --da pda --output ckps/source/ --gpu_id 0 --dset office-home --max_epoch 50 --s 0

- Adaptation to other target domains C and P and R, respectively

python image_target.py --cls_par 0.3 --threshold 10 --da pda --dset office-home --gpu_id 0 --s 0 --output_src ckps/source/ --output ckps/target/

-

- Train model on the source domain A (s = 0)

cd object/ python image_source.py --trte val --da oda --output ckps/source/ --gpu_id 0 --dset office-home --max_epoch 50 --s 0

- Adaptation to other target domains C and P and R, respectively

python image_target_oda.py --cls_par 0.3 --da oda --dset office-home --gpu_id 0 --s 0 --output_src ckps/source/ --output ckps/target/

-

- Train model on the source domains A (s = 0), C (s = 1), D (s = 2), respectively

cd object/ python image_source.py --trte val --da uda --output ckps/source/ --gpu_id 0 --dset office-caltech --max_epoch 100 --s 0 python image_source.py --trte val --da uda --output ckps/source/ --gpu_id 0 --dset office-caltech --max_epoch 100 --s 1 python image_source.py --trte val --da uda --output ckps/source/ --gpu_id 0 --dset office-caltech --max_epoch 100 --s 2

- Adaptation to the target domain W (t = 3)

python image_target.py --cls_par 0.3 --da uda --output_src ckps/source/ --output ckps/target/ --gpu_id 0 --dset office --s 0 python image_target.py --cls_par 0.3 --da uda --output_src ckps/source/ --output ckps/target/ --gpu_id 0 --dset office --s 1 python image_target.py --cls_par 0.3 --da uda --output_src ckps/source/ --output ckps/target/ --gpu_id 0 --dset office --s 0 python image_multisource.py --cls_par 0.0 --da uda --dset office-caltech --gpu_id 0 --t 3 --output_src ckps/source/ --output ckps/target/

-

- Train model on the source domain A (s = 0)

cd object/ python image_source.py --trte val --da uda --output ckps/source/ --gpu_id 0 --dset office-caltech --max_epoch 100 --s 0

- Adaptation to multiple target domains C and P and R at the same time

python image_multitarget.py --cls_par 0.3 --da uda --dset office-caltech --gpu_id 0 --s 0 --output_src ckps/source/ --output ckps/target/

-

Unsupervised Partial Domain Adaptation (PDA) on the ImageNet-Caltech dataset without source training by ourselves (using the downloaded Pytorch ResNet50 model directly)

- ImageNet -> Caltech (84 classes) [following the protocol in PADA]

cd object/ python image_pretrained.py --gpu_id 0 --output ckps/target/ --cls_par 0.3

Please refer run.sh for all the settings for different methods and scenarios.

If you find this code useful for your research, please cite our paper

@inproceedings{liang2020shot,

title={Do We Really Need to Access the Source Data? Source Hypothesis Transfer for Unsupervised Domain Adaptation},

author={Liang, Jian and Hu, Dapeng and Feng, Jiashi},

booktitle={International Conference on Machine Learning (ICML)},

pages={6028--6039},

month = {July 13--18},

year={2020}

}