I'm here to release

- llama 7B onnx models

- and a 400-lines python script without torch to run it

So you can quantize the model partially and optimize kernel step by step.

Please download it here

- huggingface https://huggingface.co/tpoisonooo/llama.onnx/tree/main

- BaiduYun https://pan.baidu.com/s/195axYNz79U6YkJLETNJmXw?pwd=onnx

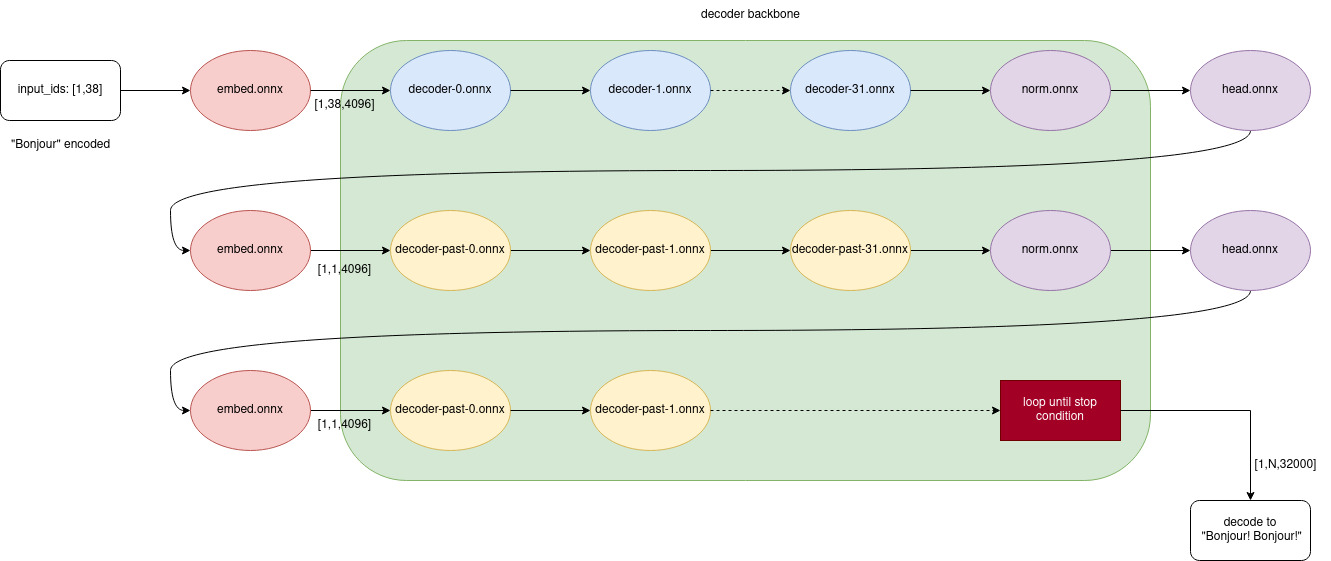

These models converted from alpaca huggingface, here is the graph to call them:

Try onnxruntime demo, no torch required, and the precision has been checked.

$ python3 -m pip install -r requirements.txt

$ python3 demo-single.py ${ONNX_DIR} "bonjour"

..

Bonjour.2023/04/?? add memory plan, add temperature warp

2023/04/07 add onnxruntime demo and tokenizer.model (don't forget to download it)

2023/04/05 init project

- Any

logits_warperorlogits_processororBeamSearchnot implemented, so the result would be not good. Please wait for nexxxxt version !!! - I have compared the output values of

onnxruntime-cpuandtorch-cuda, and the maximum error is 0.002, not bad - The current state is equivalent to these configurations

temperature=1.0

total_tokens=2000

top_p=1.0

top_k=None

repetition_penalty=1.0