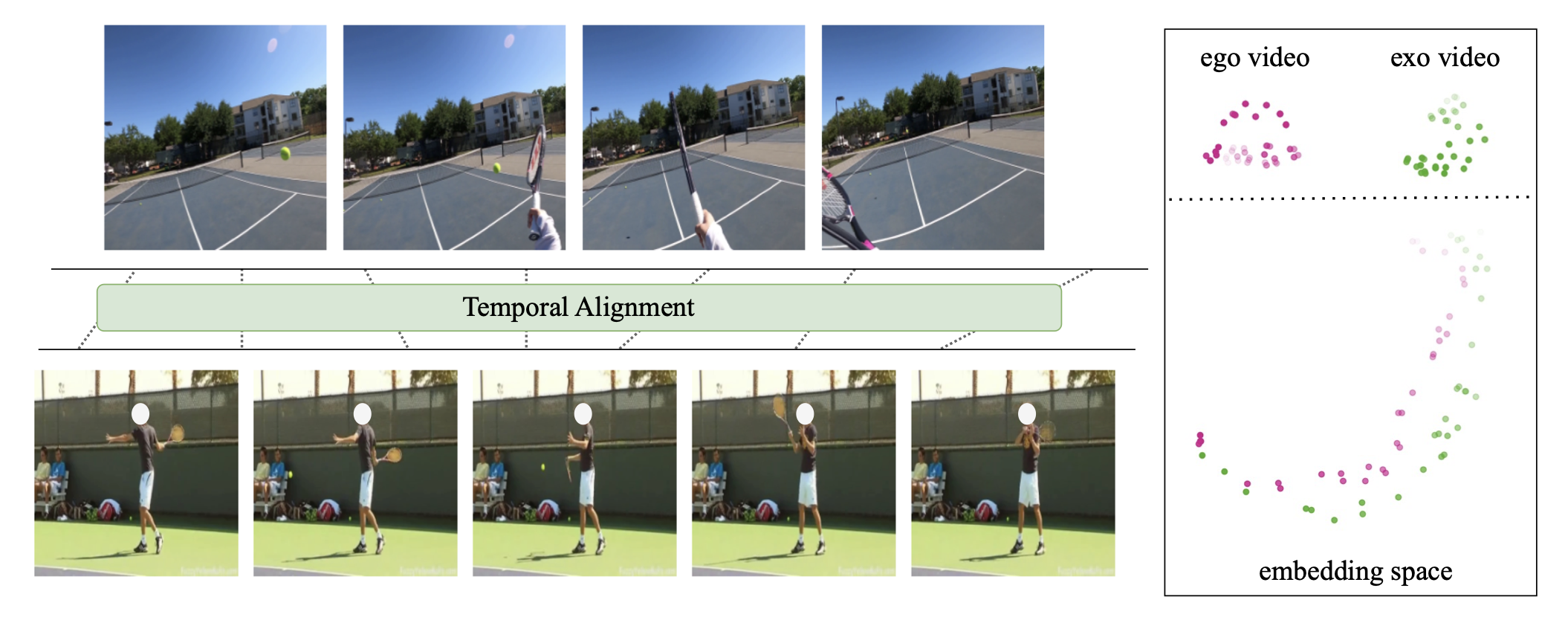

Learning Fine-grained View-Invariant Representations from Unpaired Ego-Exo Videos via Temporal Alignment

Zihui Xue, Kristen Grauman

NeurIPS, 2023

project page | arxiv | bibtex

We present AE2, a self-supervised embedding approach to learn fine-grained action representations that are invariant to the ego-exo viewpoints.

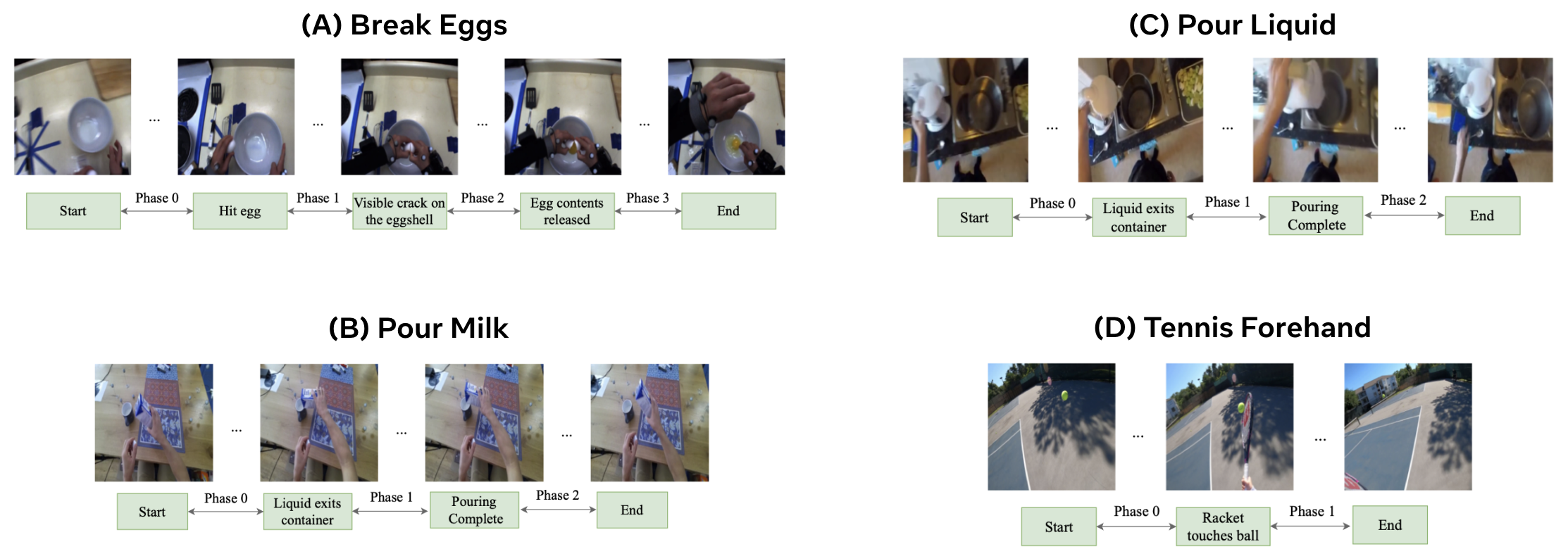

We propose a new ego-exo benchmark for fine-grained action understanding, which consist of four action-specific datasets. For evaluation, we annotate these datasets with dense per-frame labels.

Build a conda environment from environment.yml

conda env create --file environment.yml

conda activate ae2

Download AE2 data and models here and save them to your designated data path.

Modify --dataset_root in utils/config.py to be your data path.

Note: avoid having ''ego'' in your root data path, as this could lead to potential issues.

We assemble ego and exo videos from five public datasets and collect a ego tennis dataset. Our benchmark consists of four action-specific ego-exo datasets:

- (A) Break Eggs: ego and exo videos from CMU-MMAC.

- (B) Pour Milk: ego and exo videos from H2O.

- (C) Pour Liquid: ego pour water videos from EPIC-Kitchens and exo pour videos from HMDB51.

- (D) Tennis Forehand: ego videos we collect and exo tennis forehand videos from Penn Action.

Important: We divide data into train/val/test splits. For a fair comparison, we recommend utilizing the validation splits for selecting the best model, and evaluating final model performance on the test data only once. This helps reduce the risk of model overfitting.

Evaluation of the learned representations on four downstream tasks:

- (1) Action phase classification (regular, ego2exo and exo2ego)

- (2) Frame retrieval (regular, ego2exo and exo2ego)

- (3) Action phase progression

- (4) Kendall's tau

We provide pretrained AE2 checkpoints and embeddings on test data here, modify ckpt_dir in scripts/eval.sh or scripts/eval_extract_embed.sh to be your data path.

# We provide pre-extracted AE2 embeddings (AE2_ckpts/dataset_name_eval) for evaluation

bash scripts/eval.sh

# Additionally, you can use provided AE2 models (AE2_ckpts/dataset_name.ckpt) to extract embeddings for evaluation

bash scripts/eval_extract_embed.shWe provide training scripts for AE2 on four datasets. Be sure to modify --dataset_root in utils/config.py to be your data path.

Training logs and checkpoints will be saved to ./logs/exp_{dataset_name}/{args.output_dir}.

bash scripts/run.sh dataset_name # choose among {break_eggs, pour_milk, pour_liquid, tennis_forehand}--task align is for basic AE2 training, --task align_bbox integrates hand and object detection results with an object-centric encoder.

Note

UPDATE (Apr. 2024) on Bounding Box Alignment: It has been observed that the assumption of uniform video resolutions (e.g., [1024, 768] for Break Eggs) does not hold across all videos.

This discrepancy influences --task align_bbox as improper scaling factors were used, resulting in misaligned bounding boxes with hands and objects (see ego/S16_168.256_173.581.mp4 for an example).

Thanks to Mark Eric Endo for the finding!

- We implement different alignment objectives,

--losscan be set astcc,dtw,dtw_consistency,dtw_contrastive(ours) orvava. - Modify

--ds_every_n_epochand--save_everyto control the frequency of downstream evaluation and saving checkpoint. - During training, we only monitor downstream performance on validation data, it is suggested to pick the best model checkpoint based on val and run evaluation on test data only once (see eval code).

If you find our work inspiring or use our codebase in your research, please consider giving a star ⭐ and a citation.

@article{xue2023learning,

title={Learning Fine-grained View-Invariant Representations from Unpaired Ego-Exo Videos via Temporal Alignment},

author={Xue, Zihui and Grauman, Kristen},

journal={NeurIPS},

year={2023}

}