Project Page | Paper | Data (coming soon!)

This repository contains the implementation of the following paper:

Motion-X: A Large-scale 3D Expressive Whole-body Human Motion Dataset

Jing Lin∗12, Ailing Zeng∗1, Shunlin Lu∗13, Yuanhao Cai2, Ruimao Zhang3, Haoqian Wang2, Lei Zhang1

∗ Equal contribution. 1International Digital Economy Academy 2 Tsinghua University 3The Chinese University of Hong Kong, Shenzhen

Figure 1. Motion samples from our dataset

Table of Contents

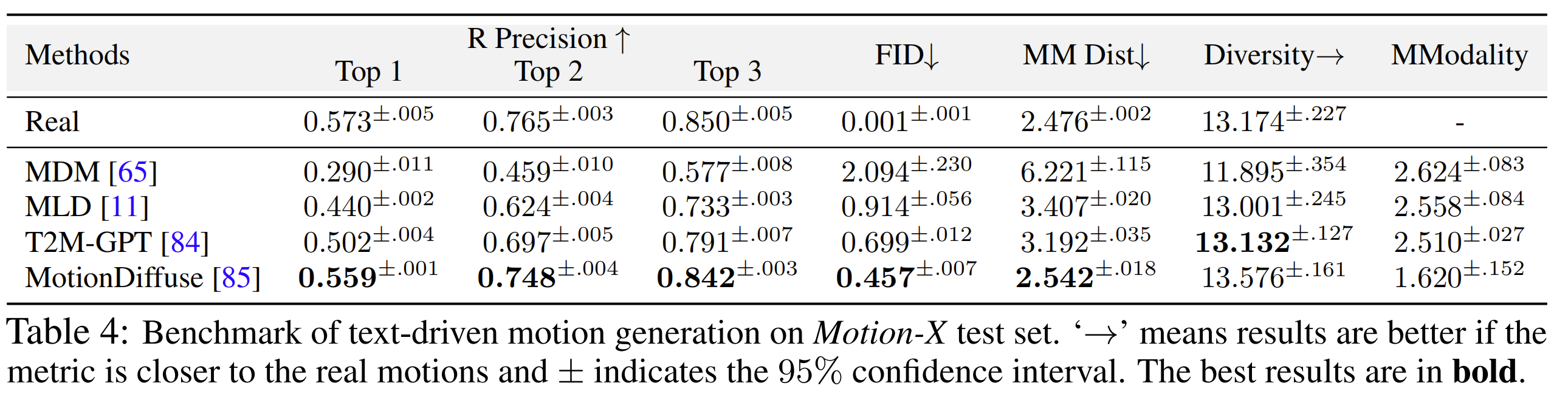

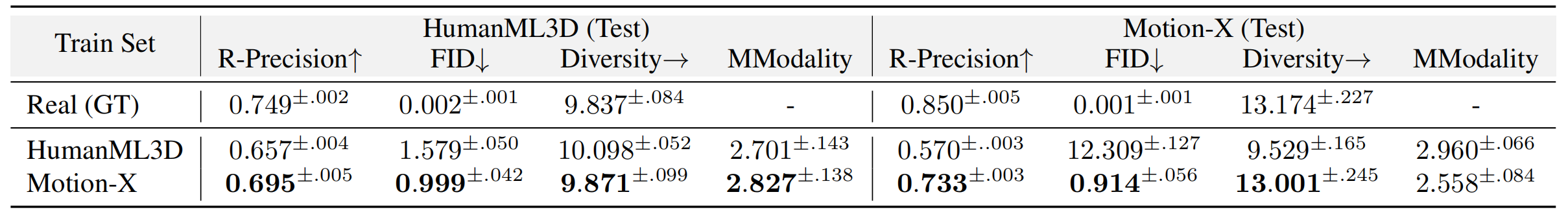

We propose a high-accuracy and efficient annotation pipeline for whole-body motions and the corresponding text labels. Based on it, we build a large-scale 3D expressive whole-body human motion dataset from massive online videos and eight existing motion datasets. We unify them into the same formats, providing whole-body motion (i.e., SMPL-X) and corresponding text labels.

Labels from Motion-X:

- Motion label: including

13.7Mwhole-body poses and96Kmotion clips annotation, represented as SMPL-X parameters. - Text label: (1)

13.7Mframe-level whole-body pose description and (2)96Ksequence-level semantic labels. - Other modalities: RGB videos, audio, and music information.

Supported Tasks:

- Text-driven 3d whole-body human motion generation

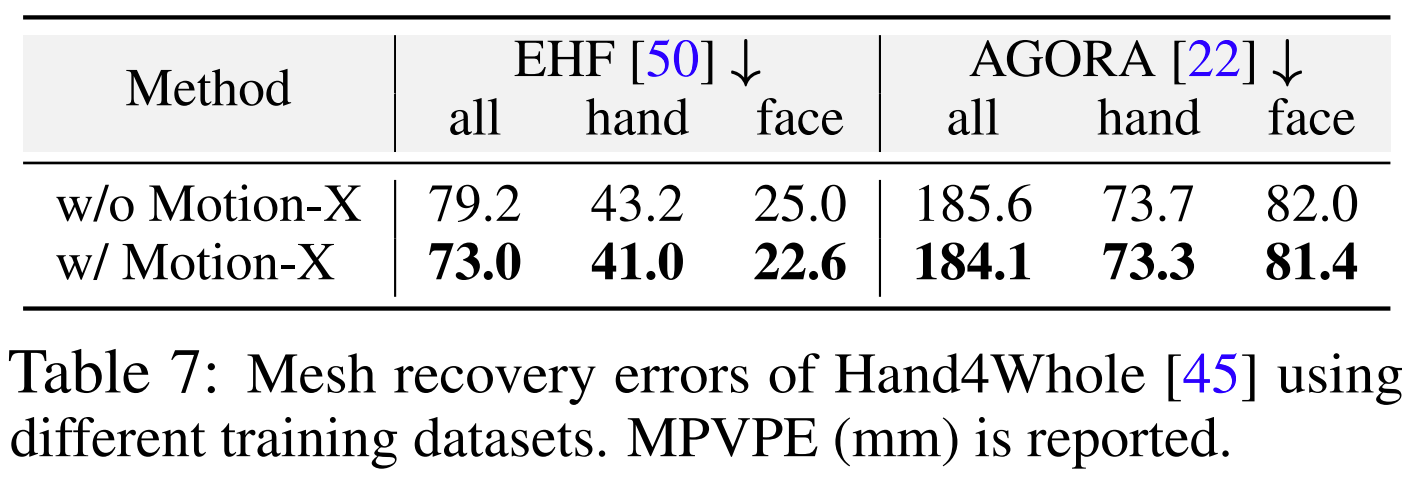

- 3D whole-body human mesh recovery

- Others: Motion pretraining, multi-modality pre-trained models for motion understanding and generation, etc.

Figure 2. Example of the RGB video and annotated motion, RGB videos are from: website1,

website2,

website3

We hope to disseminate Motion-X in a manner that aligns with the original data sources and complies with the necessary protocols. Here are the instructions:

-

Fill out this form to request authorization to use Motion-X for non-commercial purposes. After you submit the form, an email containing the dataset will be delivered to you as soon as we release the dataset. We plan to release Motion-X by Sept. 2023.

-

For the motion capture datasets (i.e., AMASS, GRAB, EgoBody),

- we will not distribute the original motion data. So Please download the originals from the original websites.

- We will provide the text labels and facial expressions annotated by our team.

-

For the other datasets (i.e., NTU-RGBD120, AIST++, HAA500, HuMMan),

- please read and acknowledge the licenses and terms of use on the original websites.

- Once users have obtained necessary approvals from the original institutions, we will provide the motion and text labels annotated by our team.

| Dataset | Clip Number |

Frame Number |

Body Motion | Hand Motion | Facial Motion | Semantic Text | Pose Text | Website |

|---|---|---|---|---|---|---|---|---|

| AMASS | 26K | 3.5M | AMASS | AMASS | Ours | HumanML3D | Ours | amass |

| NTU-RGBD120 | 38K | 2.6M | Ours | Ours | Ours | NTU | Ours | rose1 |

| AIST++ | 1.4K | 1.1M | Ours | Ours | Ours | AIST++ | Ours | aist |

| HAA500 | 9.9K | 0.6M | Ours | Ours | Ours | HAA500 | Ours | cse.ust.hk |

| HuMMan | 0.9K | 0.2M | Ours | Ours | Ours | Ours | Ours | HuMMan |

| GRAB | 1.3K | 1.6M | GRAB | GRAB | Ours | GRAB | Ours | grab |

| EgoBody | 1.0K | 0.4M | EgoBody | EgoBody | Ours | Ours | Ours | sanweiliti |

| BAUM | 1.4K | 0.2M | Ours | Ours | Ours | BAUM | Ours | mimoza |

| Online Videos | 15K | 3.4M | Ours | Ours | Ours | Ours | Ours | online |

| Motion-X (Ours) | 96K | 13.7M | Ours | Ours | Ours | Ours | Ours | motion-x |

-

To retrieve motion and text labels you can simply do:

import numpy as np import torch # read motion and save as smplx representation motion = np.load('motion_data/000001.npy') motion = torch.tensor(motion).float() motion_parms = { 'root_orient': motion[:, :3], # controls the global root orientation 'pose_body': motion[:, 3:3+63], # controls the body 'pose_hand': motion[:, 66:66+90], # controls the finger articulation 'pose_jaw': motion[:, 66+90:66+93], # controls the yaw pose 'face_expr': motion[:, 159:159+50], # controls the face expression 'face_shape': motion[:, 209:209+100], # controls the face shape 'trans': motion[:, 309:309+3], # controls the global body position 'betas': motion[:, 312:], # controls the body shape. Body shape is static } # read text labels semantic_text = np.loadtxt('texts/semantic_texts/000001.npy') # semantic labels body_text = np.loadtxt('texts/body_texts/000001.txt') # body pose description hand_text = np.loadtxt('texts/hand_texts/000001.txt') # hand pose description face_text = np.loadtxt('texts/face_texts/000001.txt') # facial expression

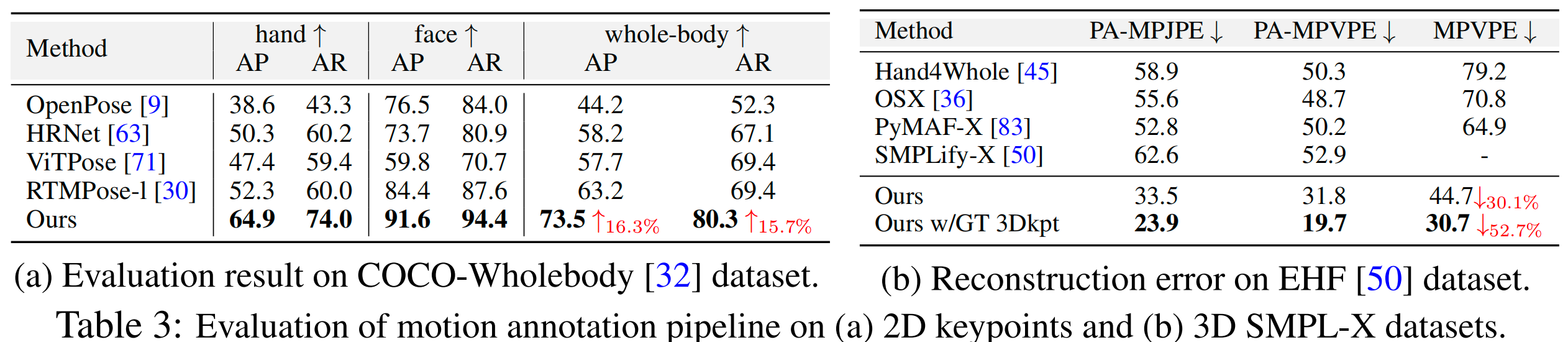

Our annotation pipeline significantly surpasses existing SOTA 2D whole-body models and mesh recovery methods.

If you find this repository useful for your work, please consider citing it as follows:

@article{lin2023motionx,

title={Motion-X: A Large-scale 3D Expressive Whole-body Human Motion Dataset},

author={Lin, Jing and Zeng, Ailing and Lu, Shunlin and Cai, Yuanhao and Zhang, Ruimao and Wang, Haoqian and Zhang, Lei},

journal={arXiv preprint arXiv: 2307.00818},

year={2023}

}