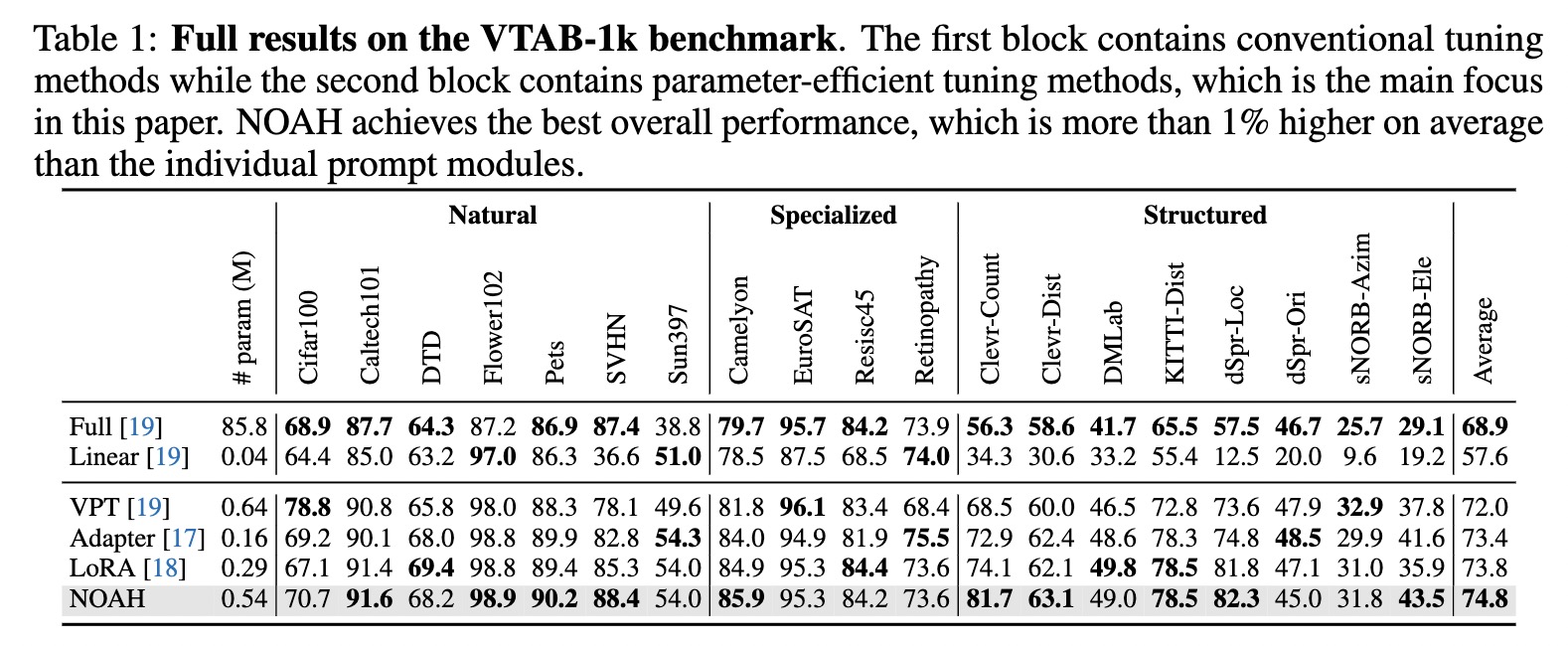

The idea is simple: we view existing parameter-efficient tuning modules, including Adapter, LoRA and VPT, as prompt modules and propose to search the optimal configuration via neural architecture search. Our approach is named NOAH (Neural prOmpt seArcH).

[05/2022] arXiv paper has been released.

conda create -n NOAH python=3.8

conda activate NOAH

pip install -r requirements.txt

cd data/vtab-source

python get_vtab1k.py

-

Images

Please refer to DATASETS.md to download the datasets.

-

Train/Val/Test splits

Please refer to files under

data/XXX/XXX/annotationsfor the detail information.

We use the VTAB experiments as examples.

| Model | Link |

|---|---|

| ViT B/16 | link |

sh configs/NOAH/VTAB/supernet/slurm_train_vtab.sh PATH-TO-YOUR-PRETRAINED-MODEL

sh configs/NOAH/VTAB/search/slurm_search_vtab.sh PARAMETERS-LIMITES

sh configs/NOAH/VTAB/subnet/slurm_retrain_vtab.sh PATH-TO-YOUR-PRETRAINED-MODEL

If you use this code in your research, please kindly cite this work.

@inproceedings{zhang2022NOAH,

title={Neural Prompt Search},

author={Yuanhan Zhang and Kaiyang Zhou and Ziwei Liu},

year={2022},

archivePrefix={arXiv},

}

Part of the code is borrowed from CoOp, AutoFormer, timm and mmcv.

Thanks Zhou Chong (https://chongzhou96.github.io/) for the code of downloading the VTAB-1k.