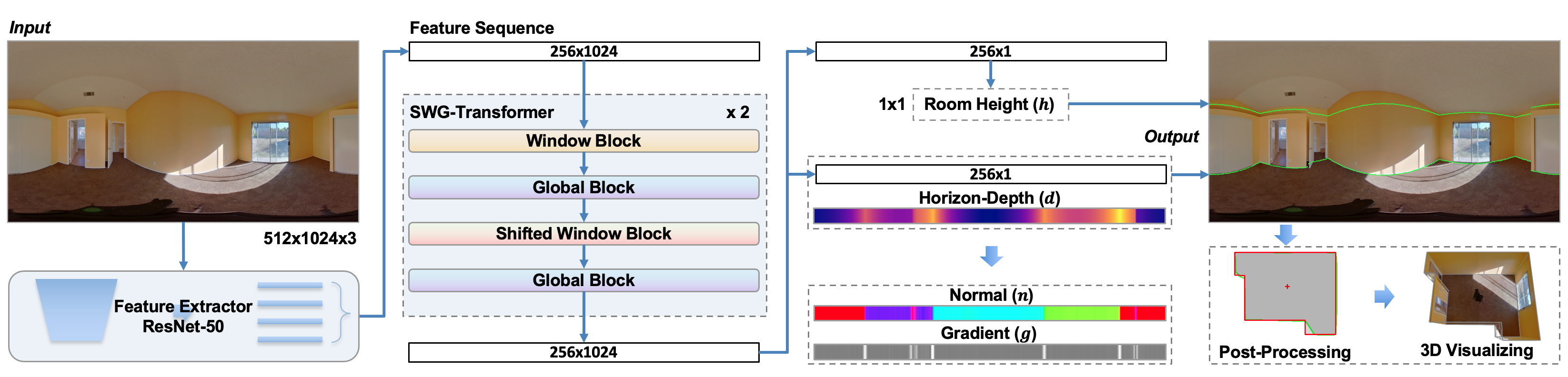

This is PyTorch implementation of our paper "LGT-Net: Indoor Panoramic Room Layout Estimation with Geometry-Aware Transformer Network"(CVPR'22). [Supplemental Materials] [Video] [Presentation] [Poster]

- 2023.5.18 Update post-processing. If you want to reproduce the post-processing results of paper, please switch to the old commit. Check out the Post-Porcessing.md for more information.

- demo app that runs on HuggingFace Space🤗.

- demo script that runs on Google colab.

Install our dependencies:

pip install -r requirements.txtOffice MatterportLayout dataset is at here.

If you have problems using this dataset, attention to this issue.

Make sure the dataset files are stored as follows:

src/dataset/mp3d

|-- image

| |-- 17DRP5sb8fy_08115b08da534f1aafff2fa81fc73512.png

|-- label

| |-- 17DRP5sb8fy_08115b08da534f1aafff2fa81fc73512.json

|-- split

|-- test.txt

|-- train.txt

|-- val.txt

Statistics

| Split | All | 4 Corners | 6 Corners | 8 Corners | >=10 Corners |

|---|---|---|---|---|---|

| All | 2295 | 1210 | 502 | 309 | 274 |

| Train | 1647 | 841 | 371 | 225 | 210 |

| Val | 190 | 108 | 46 | 21 | 15 |

| Test | 458 | 261 | 85 | 63 | 49 |

Office ZInd dataset is at here.

Make sure the dataset files are stored as follows:

src/dataset/zind

|-- 0000

| |-- panos

| | |-- floor_01_partial_room_01_pano_14.jpg

| |-- zind_data.json

|-- room_shape_simplicity_labels.json

|-- zind_partition.json

Statistics

| Split | All | 4 Corners | 5 Corners | 6 Corners | 7 Corners | 8 Corners | 9 Corners | >=10 Corners | Manhattan | No-Manhattan(%) |

|---|---|---|---|---|---|---|---|---|---|---|

| All | 31132 | 17293 | 1803 | 7307 | 774 | 2291 | 238 | 1426 | 26664 | 4468(14.35%) |

| Train | 24882 | 13866 | 1507 | 5745 | 641 | 1791 | 196 | 1136 | 21228 | 3654(14.69%) |

| Val | 3080 | 1702 | 153 | 745 | 81 | 239 | 22 | 138 | 2647 | 433(14.06%) |

| Test | 3170 | 1725 | 143 | 817 | 52 | 261 | 20 | 152 | 2789 | 381(12.02%) |

We follow the same preprocessed pano/s2d3d proposed by HorizonNet. You also can directly download the dataset file in here.

Make sure the dataset files are stored as follows:

src/dataset/pano_s2d3d

|-- test

| |-- img

| | |-- camera_0000896878bd47b2a624ad180aac062e_conferenceRoom_3_frame_equirectangular_domain_.png

| |-- label_cor

| |-- camera_0000896878bd47b2a624ad180aac062e_conferenceRoom_3_frame_equirectangular_domain_.txt

|-- train

| |-- img

| |-- label_cor

|-- valid

|-- img

|-- label_cor

We provide pre-trained weights on individual datasets at here.

- mp3d/best.pkl: Training on MatterportLayout dataset

- zind/best.pkl: Training on ZInd dataset

- pano/best.pkl: Training on PanoContext(train)+Stanford2D-3D(whole) dataset

- s2d3d/best.pkl: Training on Stanford2D-3D(train)+PanoContext(whole) dataset

- ablation_study_full/best.pkl: Ablation Study: Ours (full) on MatterportLayout dataset

Make sure the pre-trained weight files are stored as follows:

checkpoints

|-- SWG_Transformer_LGT_Net

| |-- ablation_study_full

| | |-- best.pkl

| |-- mp3d

| | |-- best.pkl

| |-- pano

| | |-- best.pkl

| |-- s2d3d

| | |-- best.pkl

| |-- zind

| |-- best.pkl

You can evaluate by executing the following command:

- MatterportLayout dataset

python main.py --cfg src/config/mp3d.yaml --mode test --need_rmse - ZInd dataset

python main.py --cfg src/config/zind.yaml --mode test --need_rmse - PanoContext dataset

python main.py --cfg src/config/pano.yaml --mode test --need_cpe --post_processing manhattan --force_cube - Stanford 2D-3D dataset

python main.py --cfg src/config/s2d3d.yaml --mode test --need_cpe --post_processing manhattan --force_cube--post_processingtype of post-processing approach, we use DuLa-Net post-processing and optimize by adding occlusion detection (described in here ) to processmanhattanconstraint (manhattan_oldrepresents the original method), use DP algorithm to processatalantaconstraint, default is disabled.--need_rmseneed to evaluate root mean squared error and delta error, default is disabled.--need_cpeneed to evaluate corner error and pixel error, default is disabled.--need_f1need to evaluate corner metrics (Precision, Recall and F$_1$-score) with 10 pixels as threshold(code from here), default is disabled.--force_cubeforce cube shape when evaluating, default is disabled.--wall_numdifferent corner number to evaluate, default is all.--save_evalsave the visualization evaluating results of each panorama, the output results locate in the corresponding checkpoint directory (e.g.,checkpoints/SWG_Transformer_LGT_Net/mp3d/results/test), default is disabled.

Execute the following commands to train (e.g., MatterportLayout dataset):

python main.py --cfg src/config/mp3d.yaml --mode trainYou can copy and modify the configuration in YAML file for other training.

We provide an inference script (inference.py) that you can

try to predict your panoramas by executing the following command (e.g., using pre-trained weights of MatterportLayout dataset):

python inference.py --cfg src/config/mp3d.yaml --img_glob src/demo/demo1.png --output_dir src/output --post_processing manhattanIt will output json files(xxx_pred.json, format is the same as PanoAnnotator) and visualization images (xxx_pred.png) under output_dir.

visualization image:

-

--img_globa panorama path or directory path for prediction. -

--post_processingIfmanhattanis selected, we will preprocess the panorama so that the vanishing points are aligned with the axes for post-processing. Note that after preprocessing our predicted results will not align with your input panoramas, you can use the output file (vp.txt) of vanishing points to reverse align them manually. -

--visualize_3d3D visualization of output results (need install dependencies and GUI desktop environment). -

--output_3doutput the object file of 3D mesh reconstruction.

The code style is modified based on Swin-Transformer.

Some components refer to the following projects:

If you use this code for your research, please cite

@InProceedings{jiang2022lgt,

author = {Jiang, Zhigang and Xiang, Zhongzheng and Xu, Jinhua and Zhao, Ming},

title = {LGT-Net: Indoor Panoramic Room Layout Estimation with Geometry-Aware Transformer Network},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022}

}