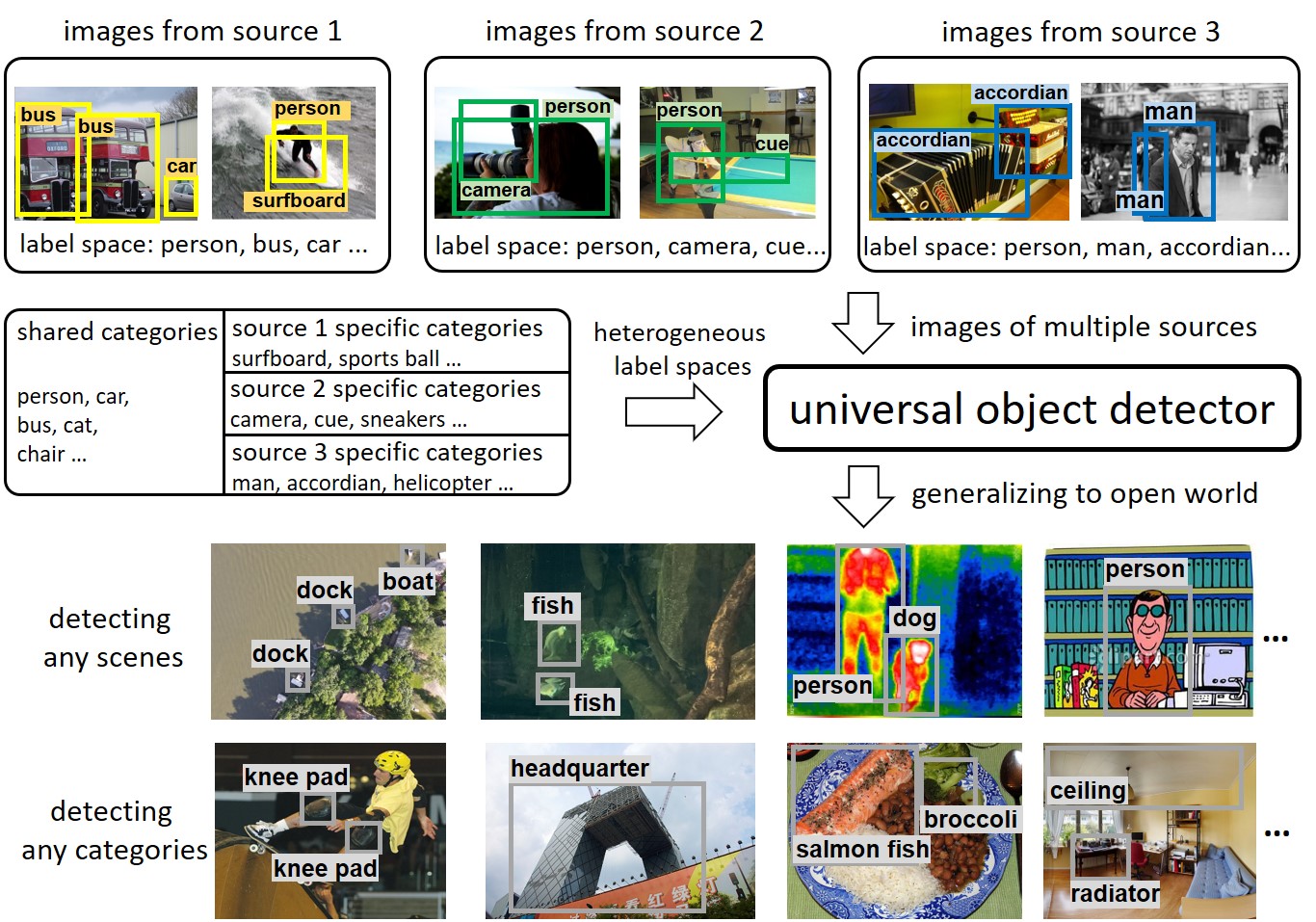

Detecting Everything in the Open World: Towards Universal Object Detection,

*CVPR 2023

Our code is based on mmdetection v2.18.0. See its official installation for installation.

CLIP is also required for running the code.

Please first prepare datasets

Prepare the language CLIP embeddings. We have released the pre-computed embeddings in the clip_embeddings folder, you can also run the script to obtain the language embeddings:

python scripts/dump_clip_features_manyprompt.py --ann path_to_annotation_for_datasets --clip_model RN50 --out_path path_to_lanugage_embeddings

Prepare the pre-trained RegionCLIP parameters. We have released the RegionCLIP embeddings converted in mmdetection formats google drive, Baidu drive, 提取码bj48. The code for parameter conversion will be released soon.

run

bash tools/dist_train.sh configs/singledataset/clip_end2end_faster_rcnn_r50_c4_1x_coco.py 8 --cfg-options load_from=regionclip_pretrained-cc_rn50_mmdet.pth

to train a Faster RCNN model on the single COCO dataset (val35k).

train the region proposal stage (our CLN model) on the single COCO dataset (val35k):

bash tools/dist_train.sh configs/singledataset/clip_decouple_faster_rcnn_r50_c4_1x_coco_1ststage.py 8

extract pre-computed region proposals:

bash tools/dist_test.sh configs/singledataset/clip_decouple_faster_rcnn_r50_c4_1x_coco_1ststage.py [path_for_trained_checkpoints] 8 --out rp_train.pkl

Modify the datasets in config files to extract region proposals on the COCO validation datasets. The default proposal names we use are rp_train.pkl and rp_val.pkl, which is specified in the config file of the second stage.

train the RoI classification stage on the single COCO dataset (val35k):

bash tools/dist_train.sh configs/singledataset/clip_decouple_faster_rcnn_r50_c4_1x_coco_2ndstage.py 8 --cfg-options load_from=regionclip_pretrained-cc_rn50_mmdet.pth

inference on the LVIS v0.5 dataset to evaluation the open-world performance of end-to-end models:

bash tools/dist_test.sh configs/inference/clip_end2end_faster_rcnn_r50_c4_1x_lvis_v0.5.py [path_for_trained_checkpoints] 8 --eval bbox

extract pre-computed region proposals:

bash tools/dist_test.sh configs/inference/clip_decouple_faster_rcnn_r50_c4_1x_lvis_v0.5_1ststage.py [path_for_trained_checkpoints] 8 --out rp_val_ow.pkl

inference with pre-computed proposals and the RoI classification stage:

bash tools/dist_test.sh configs/inference/clip_decouple_faster_rcnn_r50_c4_1x_lvis_v0.5_2ndstage.py [path_for_trained_checkpoints] 8 --eval bbox

For inference with probability calibration, obtain detection results for prior probability by infering first:

bash tools/dist_test.sh configs/inference/clip_decouple_faster_rcnn_r50_c4_1x_lvis_v0.5_2ndstage.py [path_for_trained_checkpoints] 8 --out raw_lvis_results.pkl --eval bbox

raw_lvis_results.pkl here is the detection result file we use here by default.

Then inference with probability calibration:

bash tools/dist_test.sh configs/inference/clip_decouple_faster_rcnn_r50_c4_1x_lvis_v0.5_2ndstage_withcalibration.py [path_for_trained_checkpoints] 8 --eval bbox

The steps for multi-dataset training are generally the same as single-dataset training. Use the config files under configs/multidataset/ for multi-dataset training. We release the config files for training with two datasets (Objects365 and COCO) and three datasets (OpenImages, Objects365 and COCO).

We will release other checkpoints soon.

| Training Data | end-to-end training | decoupled training (1st stage) | decoupled training (2nd stage) |

|---|---|---|---|

| COCO | model | model | model |

| COCO + Objects365 | |||

| COCO + Objects365 + OpenImages |