By Zhe Chen, Jing Zhang, and Dacheng Tao.

This repository is the implementation of the paper Recurrent Glimpse-based Decoder for Detection with Transformer.

This code is heavily based on the implementation of Deformable DETR.

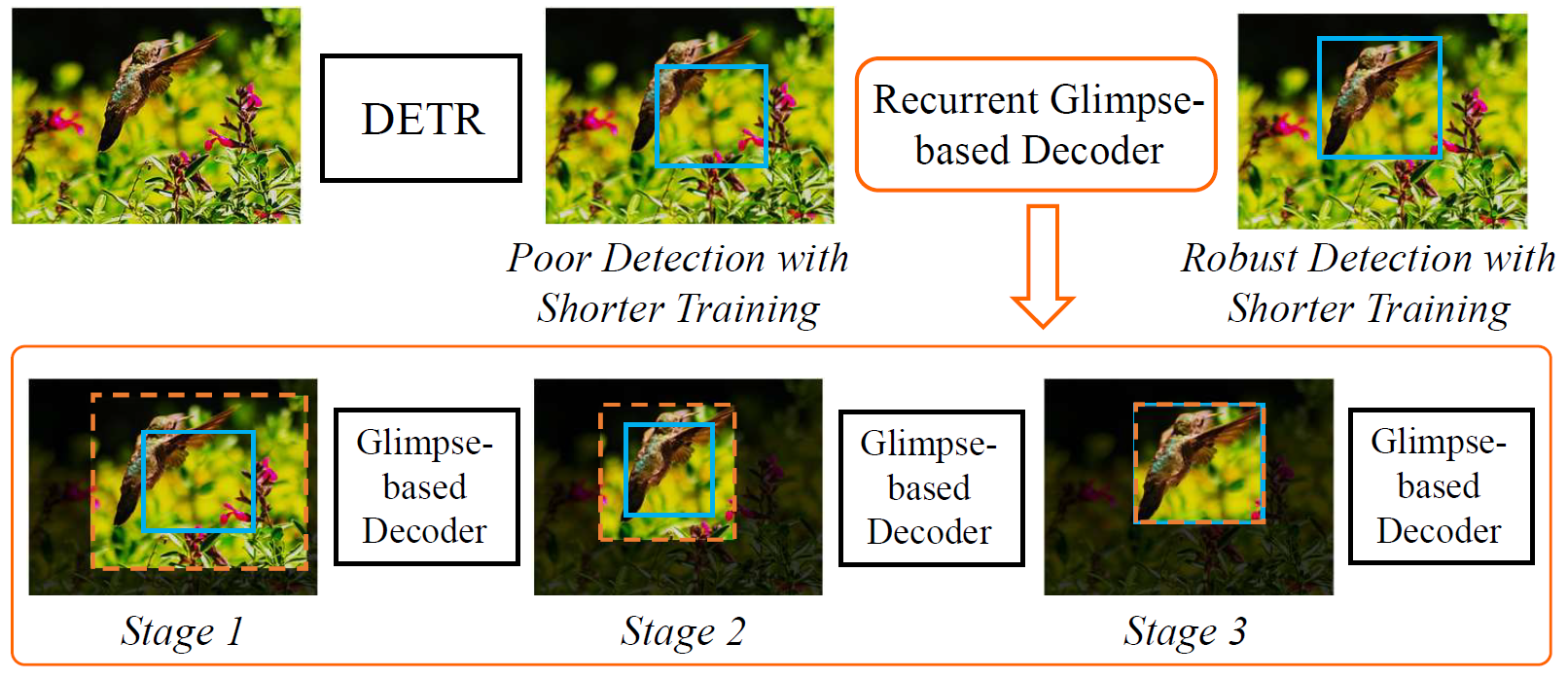

Abstract. Although detection with Transformer (DETR) is increasingly popular, its global attention modeling requires an extremely long training period to optimize and achieve promising detection performance. Alternative to existing studies that mainly develop advanced feature or embedding designs to tackle the training issue, we point out that the Region-of-Interest (RoI) based detection refinement can easily help mitigate the difficulty of training for DETR methods. Based on this, we introduce a novel REcurrent Glimpse-based decOder (REGO) in this paper. In particular, the REGO employs a multi-stage recurrent processing structure to help the attention of DETR gradually focus on foreground objects more accurately. In each processing stage, visual features are extracted as glimpse features from RoIs with enlarged bounding box areas of detection results from the previous stage. Then, a glimpse-based decoder is introduced to provide refined detection results based on both the glimpse features and the attention modeling outputs of the previous stage. In practice, REGO can be easily embedded in representative DETR variants while maintaining their fully end-to-end training and inference pipelines. In particular, REGO helps Deformable DETR achieve 44.8 AP on the MSCOCO dataset with only 36 training epochs, compared with the first DETR and the Deformable DETR that require 500 and 50 epochs to achieve comparable performance, respectively. Experiments also show that REGO consistently boosts the performance of different DETR detectors by up to 7% relative gain at the same setting of 50 training epochs.

This project is released under the Apache 2.0 license.

If you find REGO useful in your research, please consider citing:

@inproceedings{chen2022recurrent,

title={Recurrent glimpse-based decoder for detection with transformer},

author={Chen, Zhe and Zhang, Jing and Tao, Dacheng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={5260--5269},

year={2022}

}| Method | Epochs | AP | APS | APM | APL | FLOPs (G) |

URL |

|---|---|---|---|---|---|---|---|

| Faster R-CNN + FPN | 109 | 42.0 | 26.6 | 45.4 | 53.4 | 180 | - |

| Deformable DETR | 50 | 44.5 | 27.1 | 47.6 | 59.6 | 173 | config log |

| Deformable DETR ** | 50 | 46.2 | 28.3 | 49.2 | 61.5 | 173 | config log |

| REGO-Deformable DETR | 50 | 46.3 | 28.7 | 49.5 | 60.9 | 190 | config log(Invalid) model(Invalid) |

| REGO-Deformable DETR ** | 50 | 47.9 | 30.3 | 50.9 | 62.1 | 190 | config log(Invalid) model(Invalid) |

Note:

Update: Sorry that the log files and checkpoints were lost when I finished my post-doctoral position. The data and the original project were stored on the dropbox supported by the previous university, and I forgot to transfer them. Using the code of this project could reproduce the results but may have minor variations due to the randomness in training.

-

**: Refine with two-stage Deformable DETR and iterative bounding box refinement.

-

I have cleaned up the code. I have re-trained the models and obtained slightly better performance than what the paper reports.

-

There may be fluctuations in performance after training.

Please follow the Deformable DETR.

When using REGO, please add '--use_rego' to the training or testing scripts.