Official Implementation of LADS (Latent Augmentation using Domain descriptionS)

WARNING: this is still WIP, please raise an issue if you run into any bugs.

@article{dunlap2023lads,

title={Using Language to Entend to Unseen Domains},

author = {Dunlap, Lisa and Mohri, Clara and Guillory, Devin and Zhang, Han and Darrell, Trevor and Gonzalez, Joseph E. and Raghunathan, Aditi and Rohrbach, Anja},

journal={International Conference on Learning Representations (ICLR)},

year={2023}

}

-

Install the dependencies for our code using Conda. You may need to adjust the environment YAML file depending on your setup.

conda env create -f environment.yaml -

Launch your environment with

conda activate LADSorsource activate LADS -

Compute and store CLIP embeddings for each dataset (see below)

-

Create an account with Weights and Biases if you don't already have one.

-

Run one of the config files and be amazed (or midly impressed) by what LADS can do!

The configurations for each method are in the configs folder. To try say the baseline of doing normal LR on the CLIP embeddings:

python main.py --config configs/Waterbirds/mlp.yaml

you can also override parameters like so

python main.py --config configs/Waterbirds/mlp.yaml METHOD.MODEL.LR=0.1 EXP.PROJ=new_project

Datasets supported are in the helpers folder. Currently they are:

- Waterbirds (100% and 95%) our specific split code to generate data

- ColoredMNIST Red/Blue color NOTEBOOK COMING SOON

- DomainNet (the version used in the paper is

DATA.DATASET=DomainNetMini) full dataset - CUB Paintings photos dataset paintings dataset

- OfficeHome COMING SOON

You can download the CLIP embeddings of these datasets here. We also have the embeddings for CUB, Waterbirds, and DomainNetMini in the embeddings folder.

Since computing the CLIP embeddings for each train/val/test set is time consuming, you can store the embeddings by setting DATA.LOAD_CACHED=False, then it should store the embeddings into a file embeddings/{dataset}/clip_{openai,LAION}_{model_name}

All the augmenation methods (i.e. LADS and BiasLADS) are in methods/augmentations, while the classifiers and baselines are in methods/clip_transformations.py

More description of each method and the config files in the config folder.

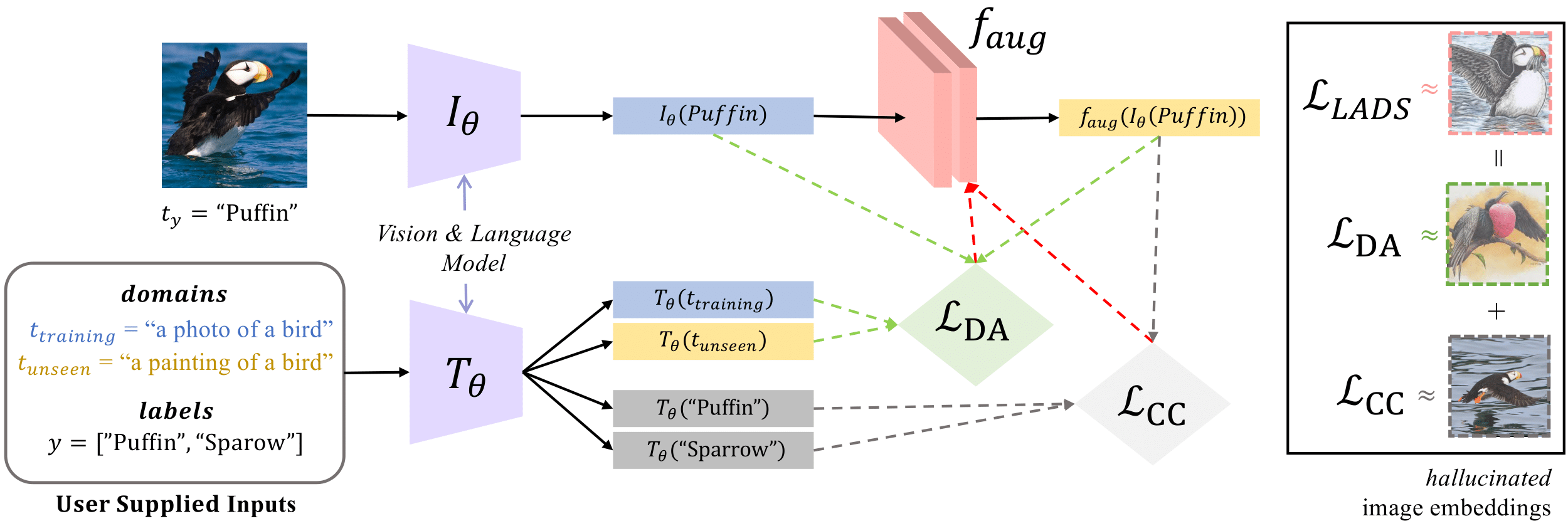

In LADS we train an augmentation network, augment the training data, then train a linear probe with the original and augmented data. Thus we use the same ADVICE_METHOD class and change the EXP.AUGMENTATION parameter to LADS.

To make sure everything is working, run:

python main.py --config configs/CUB/lads.yaml

and check your results with https://wandb.ai/clipinvariance/LADS_CUBPainting_Replication/runs/ok37oz5h.

For the bias datasets, the augmentation class is called BiasLADS, and you can run the lads.yaml configs as well :)

In order to run the CLIP zero-shot baseline, set EXP.ADVICE_METHOD=CLIPZS.

For example

python main.py --config configs/Waterbirds/ZS.yaml

CLIP text templates are located in helpers/text_templates.py, and you can specify which template you want with the EXP.TEMPLATES parameter.

Also note that we use the classes given in EXP.PROMPTS instead of the dataset classes in the dataset object itself so make sure to set those correctly.

If you want to simply run logistic regression on the embeddings, run the mlp.yaml file in any of the config folders. Some of the methods we have dont require any training (e.g. HardDebias), so all those do is perform a transformation on the embeddings before we do the logistic regression.

Note: you do need to save the embeddings for each model in the helpers/dataset_helpers.py folder.

For example, to run LR on CLIP with a resnet50 backbone on ColoredMNIST, run

python main.py --config configs/ColoredMNIST/mlp.yaml

LR Initialized with the CLIP ZS Language Weights For a small bump in OOD performance, you can run the mlpzs.yaml config to initalize the linear layer with the text embeddings of the classes. The prompts used are dictated by EXP.TEMPLATES, similar to running zero-shot.

EXP.TEXT_PROMPTS

This is the domains/biases that you want to be invariant to. You can either have them be class specific (e.g. ["a painting of a {}.", "clipart of a {}."]) or generic (e.g. [["painting"], ["clipart"]]). The default is class specific so if you want to use generic prompts instead set AUGMENTATION.GENERIC=True. For generic prompts, if you want to average the text embeddings of several phrases of a domain, simply add them to the list (e.g. [["painting", "a photo of a painting", "an image of a painting"], ["clipart", "clipart of an object"]]).

EXP.NEUTRAL_PROMPTS

If you want to take the difference in text embeddings (for things like the directional loss, most of the augmentations, and the embedding debiasing methods). you can set a neutral prompt (e.g. ["a sketch of a {}."] or [["a photo of a sketch]]). Like TEXT_PROMPTS you can have it be class specific or generic, but if TEXT_PROMPTS is class specific so is NEUTRAL_PROMPTS and vice versa.

EXP.ADVICE_METHOD

This sets the type of linear probing you are doing. Set to LR if you want to use the scikit learn LR (what is in the CLIP repo) or ClipMLP for pytorch MLP (if METHOD.MODEL.NUM_LAYERS=1 this is LR). Typically CLIPMLP runs a lot faster than LR.

The main results and checkpoints of LADS and other baselines can be accessed on wandb.