Compositional Inversion for Stable Diffusion Models

Xu-Lu Zhang1,2, Xiao-Yong Wei1,3, Jin-Lin Wu2,4, Tian-Yi Zhang1, Zhao-Xiang Zhang2,4, Zhen Lei2,4, Qing Li1

1Department of Computing, Hong Kong Polytechnic University,

2Center for Artificial Intelligence and Robotics, HKISI, CAS,

3College of Computer Science, Sichuan University,

4State Key Laboratory of Multimodal Artificial Intelligence Systems, CASIA

Abstract:

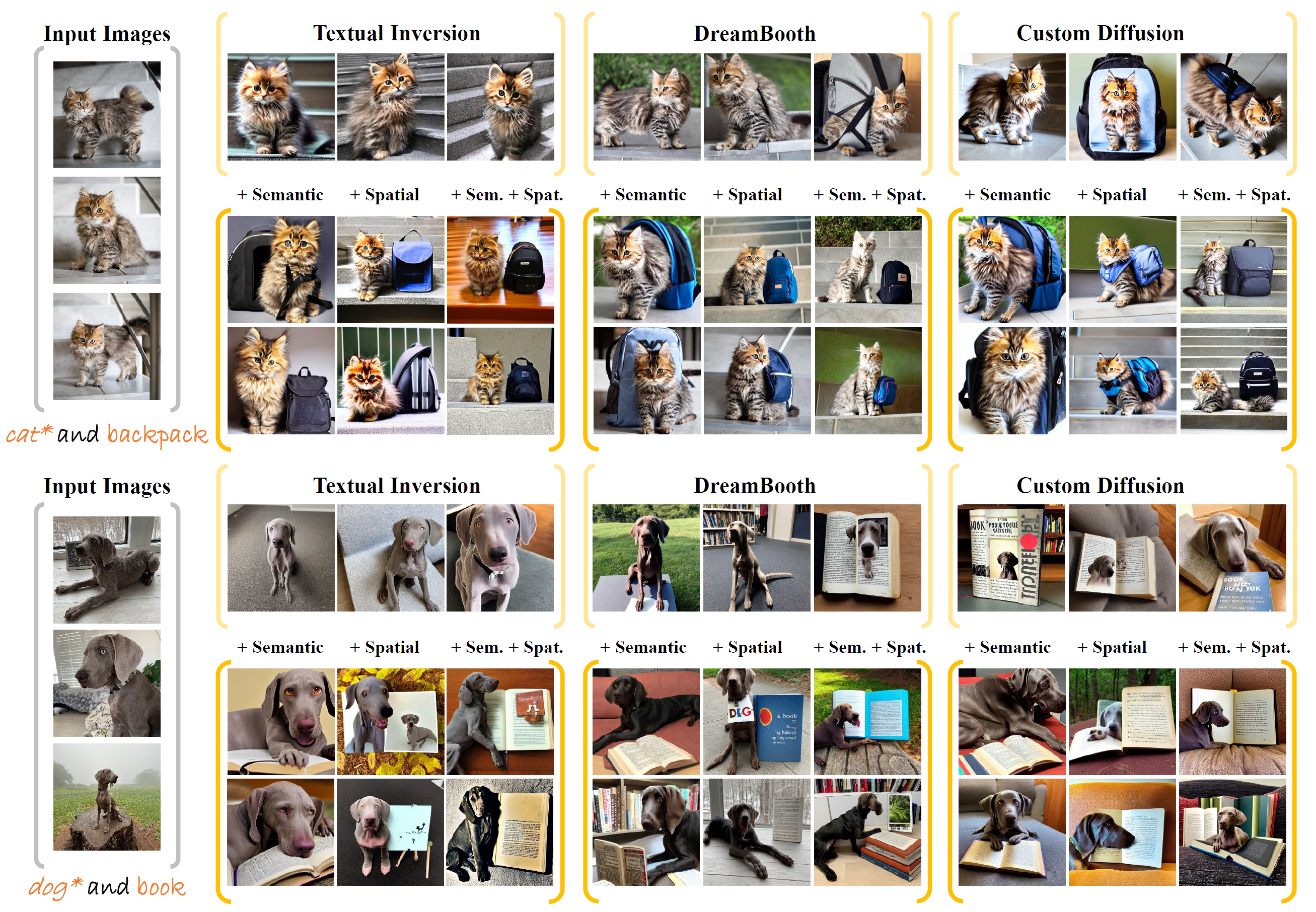

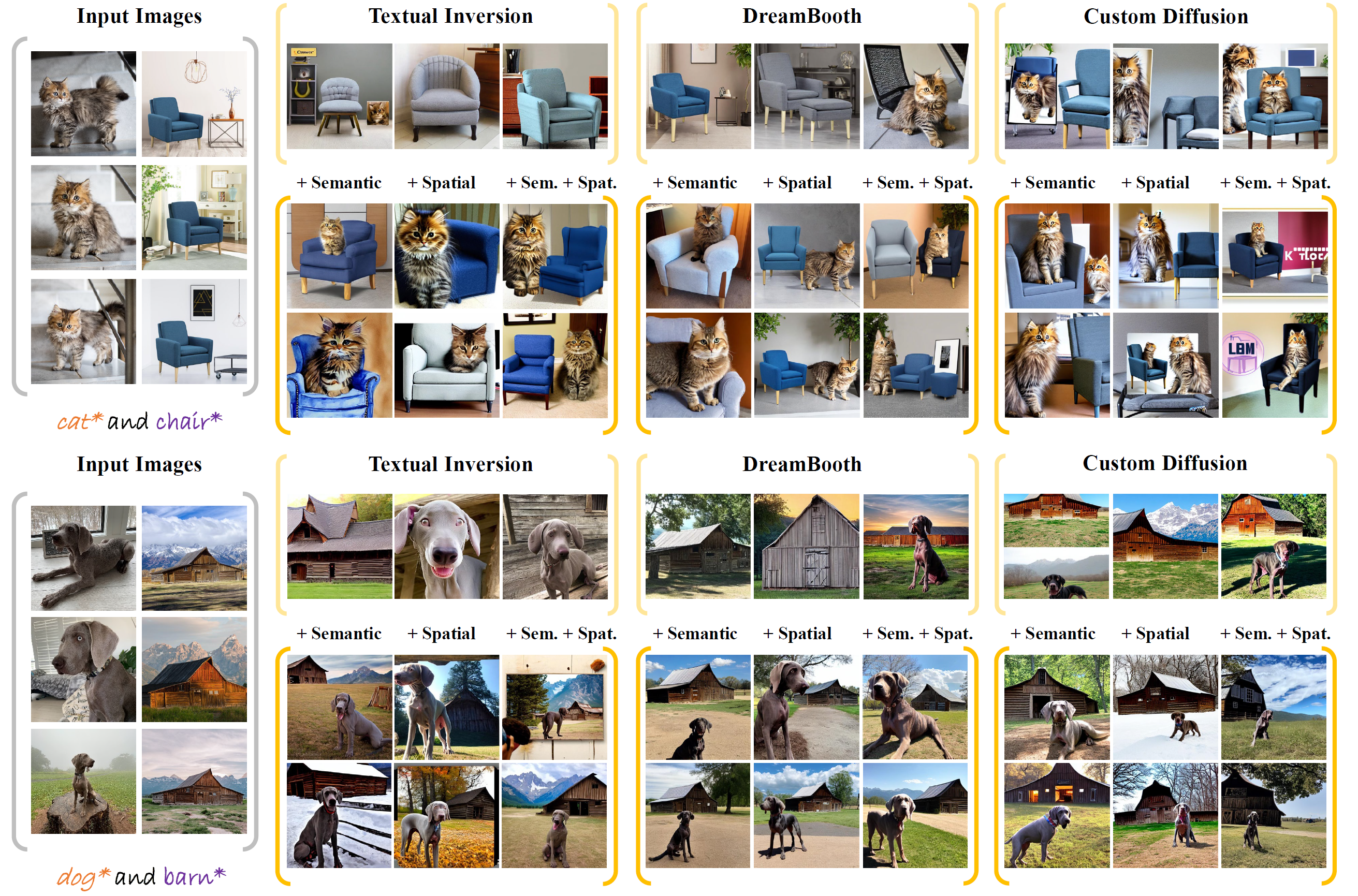

This paper explores the use of inversion methods, specifically Textual Inversion, to generate personalized images by incorporating concepts of interest provided by user images, such as pets or portraits. However, existing inversion methods often suffer from overfitting issues, where the dominant presence of inverted concepts leads to the absence of other desired concepts in the generated images. It stems from the fact that during inversion, the irrelevant semantics in the user images are also encoded, forcing the inverted concepts to occupy locations far from the core distribution in the embedding space. To address this issue, we propose a method that guides the inversion process towards the core distribution for compositional embeddings. Additionally, we introduce a spatial regularization approach to balance the attention on the concepts being composed. Our method is designed as a post-training approach and can be seamlessly integrated with other inversion methods. Experimental results demonstrate the effectiveness of our proposed approach in mitigating the overfitting problem and generating more diverse and balanced compositions of concepts in the synthesized images.

This repo contains the official implementation of Compositional Inversion. Our code is built on diffusers.

- Release code!

- Support mutiple anchors for semantic inversion

- Support automatic layout generation for spatial inversion

- Release pre-trained embeddings

To set up the environment, please run:

git clone https://github.com/zhangxulu1996/Compositional-Inversion.git

cd Compositional-Inversion

conda create -n compositonal_inversion python=3.9

conda activate compositonal_inversion

pip install -r requirements.txt

We conduct experiments on the concepts used in previous studies. You can find the code and resources for the "Custom Diffusion" concept here and for the "Textual Inversion" concept here.

Dreambooth and Custom Diffusion use a small set of real images to prevent overfitting. You can refer this guidance to prepare the regularization dataset.

The data directory structure should look as follows:

├── real_images

│ └── [concept name]

│ │ ├── images

│ │ │ └── [regularization images]

│ │ └── images.txt

├── reference_images

│ └── [concept name]

│ │ └── [reference images]

You can download the regularization dataset we used from Google Drive. Note that these images are from LAION-400M. Remember to check the image paths in "images.txt". There is another available way that uses synthetic images generated by pre-trained diffusion models.

To invert an image based on Textual Inversion, run:

sh scripts/compositional_textual_inversion.sh

To invert an image based on Custom Diffusion, run:

sh scripts/compositional_custom_diffusion.sh

To invert an image based on DreamBooth, run:

sh scripts/compositional_dreambooth.sh

To generate new images of the learned concept, run:

python inference.py

--model_name="custom_diffusion" \

--spatial_inversion \

--checkpoint="snapshot/compositional_custom_diffusion/cat" \

--file_names="<cute-cat.bin>"

Additionally, if you prefer a Jupyter Notebook interface, you can refer to the demo file. This notebook provides a demonstration on generating new images using the semantic inversion and spatial inversion.

To invert two concepts based on Textual Inversion, you should separately invert each concept according to the script provided above.

To invert two concept based on Custom Diffusion, run

sh scripts/compositional_custom_diffusion_multi.sh

To invert two concept based on Dreambooth, run

sh scripts/compositional_dreambooth_multi.sh

To generate new images of two learned concepts, run:

python inference.py

--model_name="custom_diffusion" \

--spatial_inversion \

--checkpoint="snapshot/compositional_custom_diffusion/cat+chair" \

--file_names="<cute-cat>.bin,<blue-chair>.bin"

To reproduce the results in the paper, please refer to the reproduce notebook. It contains the necessary code and instructions.

The sample results obtained from our proposed method:

If you make use of our work, please cite our paper:

@inproceedings{zhang2024compositional,

title={Compositional inversion for stable diffusion models},

author={Zhang, Xulu and Wei, Xiao-Yong and Wu, Jinlin and Zhang, Tianyi and Zhang, Zhaoxiang and Lei, Zhen and Li, Qing},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={7},

pages={7350--7358},

year={2024}

}